This blog examines the reasons why migrating from Oracle to Snowflake is a good idea, the advantages and the challenges you could face in moving from Oracle to Snowflake. It also discusses the strategy, stages, and steps of the Oracle to Snowflake migration process and how BryteFlow as an Oracle to Snowflake migration tool can make the process smoother, faster, and completely no-code.

Quick Links

- About Oracle

- About Snowflake

- Why Choose Snowflake Over Oracle?

- Snowflake Migration Best Practices

- Oracle to Snowflake Mapping – Data Types and Other Objects

- Oracle to Snowflake Migration Steps

- Why you should use BryteFlow to get your Oracle Data to Snowflake

- How to migrate data from Oracle to Snowflake Step by Step with BryteFlow

About Oracle

Oracle or Oracle DB as it is also called, is a proprietary Relational Database Management System (RDBMS) owned by the Oracle Corporation. The Oracle DB is based on a framework where data objects can be accessed with SQL directly by users or through an application front end. Oracle can communicate across networks, enabled by its proprietary network component. Learn about Oracle to Postgres Migration

The Oracle database is largely used for online transaction processing (OLTP), data warehousing, and storage and retrieval of information. Oracle was a pioneer in the use of enterprise grid computing (EGC). Enterprise grid computing refers to operations carried out by groups of computers joined on a network. It enables users to pull data and access applications from any computer that is part of the network.

Oracle DB is versatile and can be run across multiple platforms, including Windows, Linux, UNIX, and macOS, not to mention open platforms like GNU/Linux. Oracle can be run on-premise and on the Cloud on platforms such as AWS, Azure and GCP. About BryteFlow CDC for Oracle

About Snowflake

Snowflake is a highly popular, fully managed data platform that works as a Cloud Data Warehouse. Snowflake is highly scalable and powerful, can handle huge volumes of data, and operate at high speed. It is available as a Software-as-a-Service (SaaS) solution and provides a unified data platform that allows for separation of storage and compute, data sharing, scalable compute, cloning of data etc. It enables easy use of third-party tools for the modern-day data requirements of global enterprises. Build a Snowflake Data Warehouse

One of the biggest benefits of Snowflake is that users are spared the nitty-gritty of managing the infrastructure including backend tasks like server installation and maintenance, installing updates etc. Snowflake democratizes data by allowing users easy access to data at unlimited scale and allowing for secure data sharing. It provides flexible, and user-friendly options for data processing, storage, and analytics. Snowflake is Cloud-agnostic and can be built on AWS (Amazon Web Services), Microsoft Azure, and GCP (Google Cloud Platform). It lends itself to multiple use cases like data lakes, data warehousing, data science objectives, data engineering and data sharing, and adheres to the ANSI SQL standard, supporting both- organized and semi-structured data like JSON, Parquet, and XML. SQL Server to Snowflake in 4 Easy Steps

Why Choose Snowflake Over Oracle?

Oracle is a leading database system used for various data operations. Oracle Exadata, Oracle’s data warehouse product has limitations in scalability, high costs for licensing, maintenance, and hardware, and requires dedicated expert resources for management. Snowflake Data Cloud offers unlimited scalability, separates compute from storage to reduce costs, and scales instantly using a multi-cluster architecture to deal with increased workloads. It allows for a virtually unlimited number of users, ensures performance, and charges only for actual usage without licensing costs or upgrade fees. Oracle to Teradata, Migrate in 5 Easy Steps

Many businesses are shifting from Oracle to Snowflake due to its superior scalability, reduced total cost of ownership, minimal maintenance, and unique Cloud features. Snowflake is designed for the Cloud, enabling easy interfacing without infrastructure concerns, offers a pay-as-you-go model, isolating computer workloads, and reducing costs of analytical operations. Snowflake can be deployed on major Cloud platforms, like AWS, Azure and GCP, and operates globally, supporting extensive data operations with minimal maintenance and is based on SQL, ensuring ease of use and a fail-safe structure. SQL Server to Snowflake in 4 Easy Steps

Snowflake Migration Best Practices

While acknowledging the advantages of transitioning from Oracle to Snowflake, it’s advisable to follow recommended best practices offered by experts. These practices can guarantee a secure data migration and optimize the use of Snowflake’s many features for organizations. Compare Databricks to Snowflake

- Which data from Oracle must be moved to Snowflake?

If you are moving data from on-premise Oracle to Snowflake, please think about which data you can leave behind, and which data you actually need to move for operations. Efficiently balancing data storage between Oracle on-premise and Snowflake will help save on costs.

- Estimate the volumes to be migrated from Oracle to Snowflake

Plan for the amount of data that will be migrated upfront. How many small (< 10 GB), medium (10GB- 100GB) and large (> 100 GB) tables are there to be migrated? The large tables will need special effort, as the migration process should be completed in a timely manner and not across weeks, as fresh data will be continually added to these tables till cutover. Consider investing in a tool like BryteFlow Ingest that can handle multi-threaded parallel extraction techniques. Snowflake CDC With Streams and a Better CDC Method

- Oracle CDC should be part of the process

When migrating data, remember that at cutover, Oracle CDC using log-based change data capture will be required to sync data with changes at source, so that there is a smooth transition, no data is lost, and the process is seamless. Oracle CDC (Change Data Capture): 13 things to know

- Ongoing Data Cataloging in the Oracle to Snowflake journey

Ensure automated data cataloging happens throughout the migration process. This solution should provide a continuous and scalable data flow for analytics that can be audited. Oracle to Snowflake with BryteFlow

- Securing End-to-End Encrypted Connections

All connections between Oracle data sources and the Snowflake data cloud must be secured using end-to-end encryption. This step is needed to ensure data does not leak and it remains safe during the migration. Learn about Oracle CDC to Kafka

- Effective Cost Planning and Management for Oracle to Snowflake Migration

Snowflake’s pay-as-you-go model reduces infrastructure costs by migrating data to the Cloud and enables efficient capital allocation. However, for this to happen you need to work out the precise resources required each month and the approximate pricing based on estimated usage. Planning teams should assess costs before initiating the Oracle to Snowflake migration. Compare BryteFlow with Matillion and Fivetran for loading to Snowflake

- How much Data Storage will you need?

Estimate the volume of data/storage and the duration necessary for Oracle to Snowflake migration. When dealing with over 50 TB of storage and time constraints, consider using physical storage devices to move data. Oracle Replication in Real-Time

- Be Realistic about Migration Timelines

A lot of factors play a role in determining practical migration schedules. For instance, fixed deadlines for offloading on-premise Oracle database data may complicate the Snowflake migration due to potential unforeseeable issues like network glitches or equipment breakdowns. It’s always wise to incorporate a buffer when planning timelines. Connect Oracle to Databricks and Load Data the Easy Way

- Define Role-based Data Access Requirements

Identify the users who would need data access, who they are, roles and responsibilities, their access frequency, and the speed at which they require data access. Make sure they understand the per-query pricing model and the effect on costs. SQL Server to Snowflake in 4 Easy Steps

- New ELT Approach for migrating from Oracle to Snowflake

Consider using ELT (Extract Load Transform) rather than ETL (Extract Transform Load). ETL conducts transformations before loading data to the data cloud, leading to a more complex, time-consuming, and costly migration process. Conversely, ELT performs data transformations after data is loaded into the Cloud, enabling organizations to transform raw data as per requirement and convenience, making for a smooth data loading process and conservation of resources. ELT works well for cloud-native data warehouses like Snowflake since data transformation occurs within the target destination. BryteFlow for ELT in Snowflake

- Evaluate Your Network Speed to move from Oracle to Snowflake

Assess the bandwidth and connectivity between your Oracle server and Snowflake, taking into account source and target region/location, etc. This assessment will determine the time required for actual Cloud data migration.

- Implement Dynamic Sensitive Data Masking

Ensure that your technology stack incorporates dynamic data masking features to secure sensitive data during transit. This serves as an added layer of protection against unauthorized access to sensitive data. As an aside, BryteFlow provides automated data masking as an option.

Oracle to Snowflake Mapping – Data Types and Other Objects

When transferring data from Oracle to Snowflake, data may require transformation to align with specific business requirements. Apart from these tailored adjustments, there are some noteworthy points for seamless Oracle to Snowflake migration. Source to Target Mapping Guide

- Issues may arise due to character set disparities between the data source and target in Oracle and Snowflake. Notably, Snowflake accommodates various character sets, including UTF-8 and UTF-16. A comprehensive list can be accessed here.

- Snowflake views closely resemble Oracle views, provided that the Oracle view doesn’t rely on proprietary syntax or functions exclusive to Oracle. The latter may require substitution in Snowflake during migration. About Source to Target Mapping

- Migration from existing Oracle functions and procedures to Snowflake is not straightforward due to the more Java-oriented nature of Snowflake functions and procedures. Details on Snowflake functions can be found here.

- Maintaining a materialized view in Oracle involves additional overhead, unlike in Snowflake where it operates transparently. Although Snowflake incurs maintenance charges in the form of credits, the administration part is alleviated for users.

- Snowflake’s Stored Procedures enable the expansion of Snowflake SQL through the integration of JavaScript, allowing the inclusion of programming features like branching and looping. Specific details regarding Snowflake Stored Procedures can be found here.

- For those transitioning from an Oracle background, familiarity with the renowned DUAL table is essential. Snowflake supports querying on the DUAL table. Consequently, any logic reliant on the DUAL table in Oracle can be readily compiled in Snowflake without requiring alterations.

- Snowflake lacks support for packages, unlike Oracle. Thus, if your Oracle source database contains packages, the logic from those packages must be transitioned into Snowflake procedures (JavaScript) and functions (JavaScript), or the logic needs to be relocated to the application layer.

- For those who have utilized Oracle’s ROWID in their code, an alternative must be employed, as Snowflake does not support ROWID functionality.

- Unlike Oracle, Snowflake does not incorporate the concept of indexes. In Oracle, indexes are commonly used to address performance issues, often created on tables for various reasons. In the Oracle world, indexes are a widely utilized tool for performance tuning, a practice seen in almost every project.

- In Snowflake, the conventional method of deducting two dates using the minus operator in Oracle is not applicable. Instead, Snowflake requires the use of the DATEDIFF built-in function to achieve the same result.

- The use of Oracle HINTs in Snowflake SQL is not supported. Thus, any Oracle HINTs present in the SQL must be removed during migration to Snowflake. Snowflake’s automatic handling of most optimization processes eliminates the need for manual tuning.

- Date and time formats demand careful attention in creating data pipelines. Snowflake offers flexibility in this realm as well. If a custom format is employed for dates or times in the file being inserted into a table, it can be explicitly specified using the “File Format Option.” The complete list of date and time formats supported can be found here

- Snowflake’s comprehensive type system encompasses a wide range of basic and sophisticated data types, incorporating complex nested structures such as structs and arrays.

- During the transfer of data from Oracle to big data systems, maintaining data integrity can often be challenging due to the absence of support for SQL constraints. However, Snowflake alleviates this concern by fully supporting various SQL constraints such as UNIQUE, PRIMARY KEY, FOREIGN KEY, and NOT NULL constraints. This robust support ensures that data migration occurs as intended. . Learn about Oracle to SQL Server Migration

The table below outlines the Oracle data types and their respective equivalents in Snowflake. Summary of the same can be found here.

| Oracle Data Type | Snowflake Data Type |

| number | BYTEINT, SMALLINT, INTEGER, BIGINT, DECIMAL (except precision and scale cannot be specified.) |

| float | float |

| number | numeric, decimal |

| float | char(Up to 16MB) |

| varchar2/nvarchar2 | varchar(Up to 16MB) |

| char(n) | char varying(n) |

| float | real |

| date | date |

| timestamp(Only HH:MI:SS) | time |

| timestamp | timestamp_ntz(0) |

| timestamp with local tz | timestamp_ltz(0) |

| timestamp with tz | timestamp_tz(0) |

| binary_float/binary_double | varbinary |

| clob/ varchar2 | variant |

| clob/ varchar2 | array |

| binary_double | binary(Up to 8M) |

| binary_float | binary(Up to 8M) |

| blob | binary |

| char(s byte), 1 <= size <= 2000 | varchar(4) |

| char(s char), 1 <= size <= 2000 | varchar(4) |

| clob | varchar |

| date | timestamp_ntz(0) |

| float(precision), 1 <= p <= 126 | varchar(255) |

| integer | varchar(255) |

| long raw | binary |

| long(2147483648 bytes) | varchar(65535) |

| nchar(s char), 1 <= size <= 2000 | varchar(4) |

| nclob | varchar |

| number(*,s), -84 <= s <= 127 | char(255) |

| number(p,s), 1 <= p <= 38, -37 <= s <= 37 | number(p,s), 1 <= p <= 38, 0 <= s <= 37 |

| number(p,s), 1 <= p <= 38, -84 <= s <= 127 | char(size), 40 <= size <= 130 |

| nvarchar2(s char), 1 <= size <= 4000 | varchar(4) |

| raw(size), 1 <= size <= 2000 | binary(size), 1 <= size <= 2000 |

| rowid | varchar(18) |

| timestamp(precision) with time zone, 0 <= p <= 9 | timestamp_tz(precision), 0 <= p <= 9 |

| timestamp(precision), 1 <= p <= 9 | timestamp_ntz(precision), 1 <= p <= 9 |

| varchar2(s byte), 1 <= size <= 4000 | varchar(size), 4 <= size <= 4000 |

| varchar2(s char), 1 <= size <= 4000 | varchar(size), 4 <= size <= 16000 |

| months_between(dt1, dt2) | months_between(dt1, dt2) |

| sysdate | current_timestamp(0) |

| to_char(datetime, format) | to_char(datetime, format) |

| to_date(str, format) | to_timestamp(str, format) |

| trunc(datetime) | trunc(datetime) |

Oracle to Snowflake Migration Steps

These are the steps in the migration process from Oracle Database to Snowflake.

- Why are you moving from Oracle to Snowflake – Defining Migration Objectives

Before the Oracle to Snowflake migration begins, you must define the goals and objectives. This involves recognizing the primary drivers for the Oracle to Snowflake migration, such as cost reduction, enhanced performance, scalability, or improved security. These goals can be categorized into business and technical objectives. Evaluate your aims and collaborate with key stakeholders to establish these objectives. They should be specific, measurable, achievable, relevant, and time-bound and communicated to all stakeholders directly or indirectly affected by the migration.

- Evaluate your current Oracle environment before moving to Snowflake

Thoroughly analyze your current data setup to understand the extent and complexity involved in migrating. Know your various data sources such as databases, data warehouses, files, and applications. Determine the size and growth rate of the data, assess the existing data processing and analytical capabilities, evaluate performance, review security measures, recognize limitations or challenges, address business requirements, and identify any compliance or dependencies. Connect Oracle to Databricks and Load Data the Easy Way

- Oracle Data Inventory Assessment

Assess your current Oracle database, considering data volumes, schemas, tables, stored procedures, views, and interdependencies. Prior to commencing Oracle to Snowflake data migration, it’s essential for you to document the data and information slated for migration.

- Data Selection and Segmentation

Transferring data from Oracle sources to Snowflake Cloud can be streamlined by adhering to a structured process, leveraging advanced tools, and making use of valuable resources. Enterprises should initiate the process by selecting and segmenting data using tools like ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform). ELT for Snowflake

- Oracle to Snowflake Schema and Data Mapping

Develop a Snowflake schema that aligns with your Oracle schema, accommodating both normalized and denormalized data models. Examine and adapt your data so it conforms to the Snowflake structure, ensuring alignment of data types, formatting, and schema compatibility.

- Define your Oracle to Snowflake migration approach

The next step involves defining a strategy for migration. This entails outlining which data will be migrated, selecting suitable migration tools and procedures, and establishing a timeline for the migration process. Multiple migration strategies exist for Snowflake, such as lift and shift migration, parallel migration, phased migration, hybrid migration, and cloud-only migration. The most appropriate strategy will depend on your organization’s existing data environment, business requirements, and available resources. About Cloud Migration Challenges

- Creating a plan for Oracle Snowflake integration

The findings from the assessment phase will help in forming a comprehensive plan for implementing the Oracle to Snowflake migration. This includes determining the optimal data architecture, configuring the data warehouse, and strategizing data ingestion and processing. These requirements will provide insights into initial deployment considerations for Snowflake, such as the preferred region and edition to use. Moreover, this plan will offer a better understanding of security protocols, developer usability, and ongoing support needs. By the end of this phase, you should have a ready-to-migrate Snowflake platform or a template-based code structure that can be deployed in a tailored Snowflake environment, that is built to your specifications.

- Establish a Snowflake Account

If you don’t have one, register for a Snowflake account. Choose the edition and size that aligns with your specific requirements.

- Networking Setup for moving from Oracle to Snowflake

Establish network access and connectivity between your existing on-premise systems and Snowflake. Set up Virtual Private Cloud (VPC) peering if necessary.

- Prepare for your Oracle to Snowflake migration

Once your migration strategy is established, the subsequent step is to plan the migration of data, ETL workflows, application cutover, and more. This includes strategies for migrating existing data from the current data warehouse platform, direct data ingestion from the source, data processing, testing, validation, application migration, and cutover planning.

- Initiate Pilot Phase for migrating Oracle data to Snowflake

Now you need to validate the strategy. Recognizing that transitioning Oracle to Snowflake is a resource-intensive task, the logical next step is to initiate a pilot so you can test the strategy before committing full time. This phase involves testing Snowflake in a controlled environment to ensure it aligns with your requirements. The testing process encompasses identifying a small data or application subset for migration, setting up a test environment, utilizing migration tools like BryteFlow, migrating data, testing performance, evaluating functionality, collecting feedback, and adjusting the plan if necessary based on results.

- Transition to Production for Oracle to Snowflake migration

Upon successful completion of the trial phase, proceed with the actual Oracle migration to Snowflake.

- Extracting data for Oracle to Snowflake ELT

Now you need to extract data from your Oracle database, utilizing tools such as SQL*Plus, Oracle Data Pump, or third-party migration tools like BryteFlow. About BryteFlow Ingest

- Data Staging for loading data from Oracle to Snowflake

Prepare the extracted data in formats compatible with Snowflake, such as CSV, JSON, or Parquet. Data can be held in external stages or Snowflake’s internal stages. When staging it’s better for the data to be partitioned into logical paths that have details about geographical location, date of writing and other source identifiers.

- Data Loading for Oracle to Snowflake migration

Utilize Snowflake’s data loading tools like SnowSQL, Snowpipe, or third-party migration tools to transfer the staged data from Oracle into Snowflake tables. Compare BryteFlow with Matillion and Fivetran for loading to Snowflake

- Code Adaptation for Oracle to Snowflake migration

Reconfigure any Oracle-specific SQL, PL/SQL code, stored procedures, and functions for compatibility with Snowflake’s SQL.

- Testing the code for moving data from Oracle to Snowflake

Examine the converted code to ensure proper functioning within Snowflake.

- Data Validation and Functional Testing

Verify the consistency of data in Snowflake against the data in Oracle. Conduct data quality checks and validation procedures. Test the migrated applications in Snowflake to confirm their proper operation. Automated Data Reconciliation with BryteFlow TruData

- Query Optimization and Indexing

Review and optimize SQL queries to leverage Snowflake’s unique architecture and features. Adjust indexing strategies to align with Snowflake’s indexing capabilities. With Snowflake you can get automatic indexing and do not need to do manual index management. A combination of metadata, clustering keys, and automatic indexing is used to enhance query performance.

- Final Data Synchronization in Oracle to Snowflake Migration

Perform a final data synchronization to make sure any changes made in Oracle during the migration, are synced in Snowflake. Then direct your applications and users to begin using Snowflake as the primary data source. Oracle CDC: 13 Things to Know

- Monitoring and Optimization of the Snowflake environment

Continuously monitor and optimize your Snowflake environment for high performance. This includes managing query performance and usage, optimizing the data warehouse, and processing costs.

- Backup and Disaster Recovery for Data in Snowflake

Establish backup and disaster recovery systems within Snowflake.

Why you should use BryteFlow to get your Oracle Data to Snowflake

If you need to efficiently migrate Oracle data to Snowflake, choosing BryteFlow as your Oracle replication tool can automate your entire process. It can help cut down on time and cost and spare your DBA’s time since BryteFlow is self-service, with a point-and-click interface. In cases where your tables exceed 100 GB, BryteFlow XL Ingest can be used to do the initial full refresh of data. It uses parallel, multi-thread loading and smart configurable partitioning to ingest even the heaviest datasets in minutes.

- BryteFlow uses log-based CDC replication with minimal impact on the source, low latency, and very high throughput of 1,000,000 rows in 30 seconds approx. (6x faster than Oracle GoldenGate). GoldenGate CDC Alternative

- BryteFlow delivers data in real-time using Oracle CDC from an Oracle sources to popular destinations like Snowflake, SQL Server, Azure Synapse, ADLS Gen2, Redshift, S3, BigQuery, Oracle, Databricks, Postgres, Teradata and Kafka for effective and easy consumption of data.

- BryteFlow XL Ingest effortlessly manages large data volumes through parallel, multi-thread loading and automated partitioning mechanisms designed for high-speed performance.

- BryteFlow automates every process including data extraction, CDC, merging, masking, and SCD Type-2 history. Automating Change Data Capture

- BryteFlow’s data replication minimizes Snowflake data costs by employing very low compute resources. How to load data to Snowflake fast

- With BryteFlow, data is time-stamped, and incremental changes are merged with existing data automatically.

- BryteFlow TruData provides automated data reconciliation with row counts and columns checksum, so there is no incomplete or missing data.

- As a data replication tool, BryteFlow provides high availability out-of-the-box.

- You get ready-to use data on Snowflake with automated data type conversion, no tedious data prep required. Snowflake CDC With Streams and a Better CDC Method

- BryteFlow can be set up in a matter of hours and you can get delivery of data in just 2 weeks.

- BryteFlow offers comprehensive replication support for all Oracle versions, covering Oracle 12c, 19c, 21c, and upcoming releases for long-term compatibility. BryteFlow for Oracle CDC

How to migrate data from Oracle to Snowflake Step by Step with BryteFlow

Prerequisites: Please ensure that all prerequisites have been met and all security and firewalls have been opened between all the components.

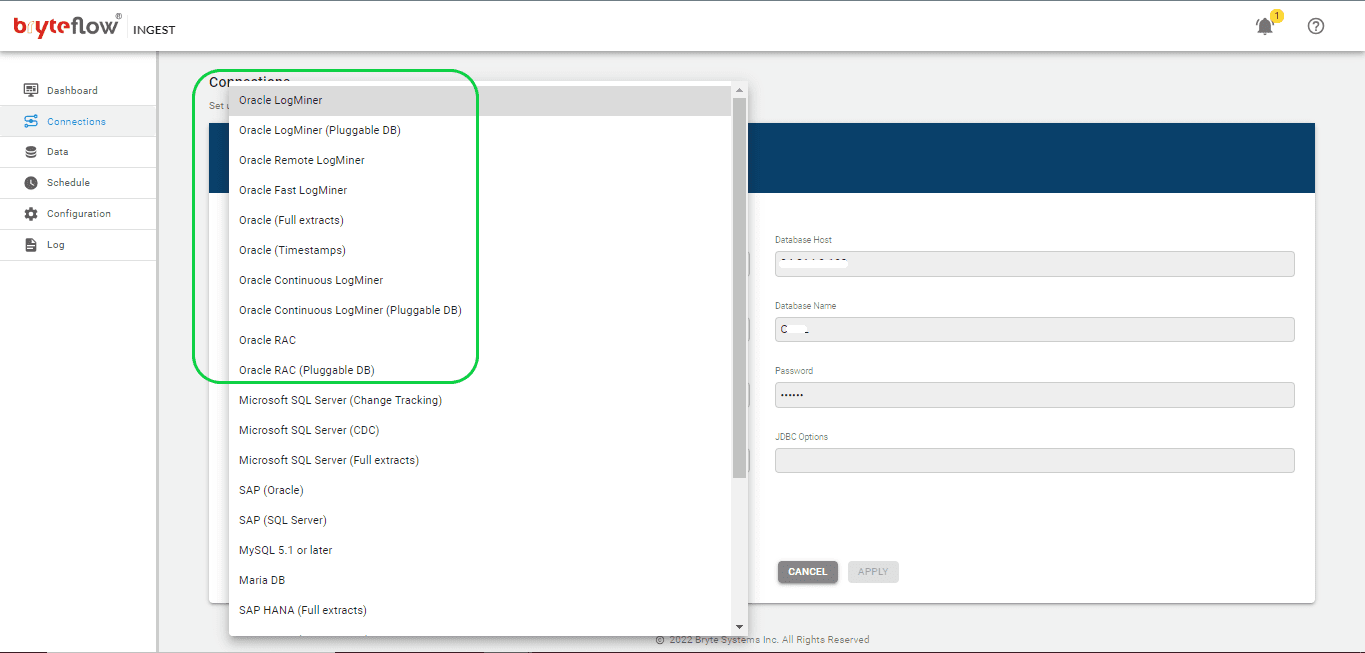

Step 1:Once the BryteFlow Ingest software is installed, set up Oracle as the source connection under “Connection Tab” and test the connection.

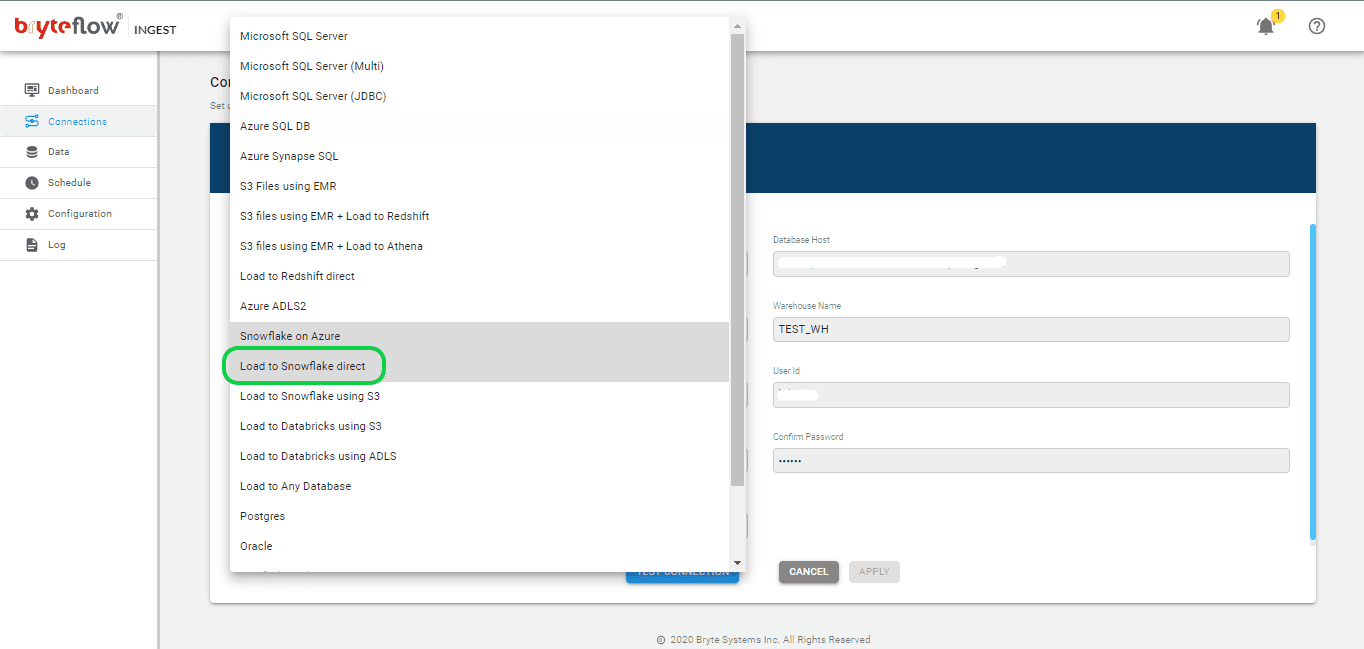

Step 2: Set up destination as Snowflake under “Connection Tab” and test the connection,

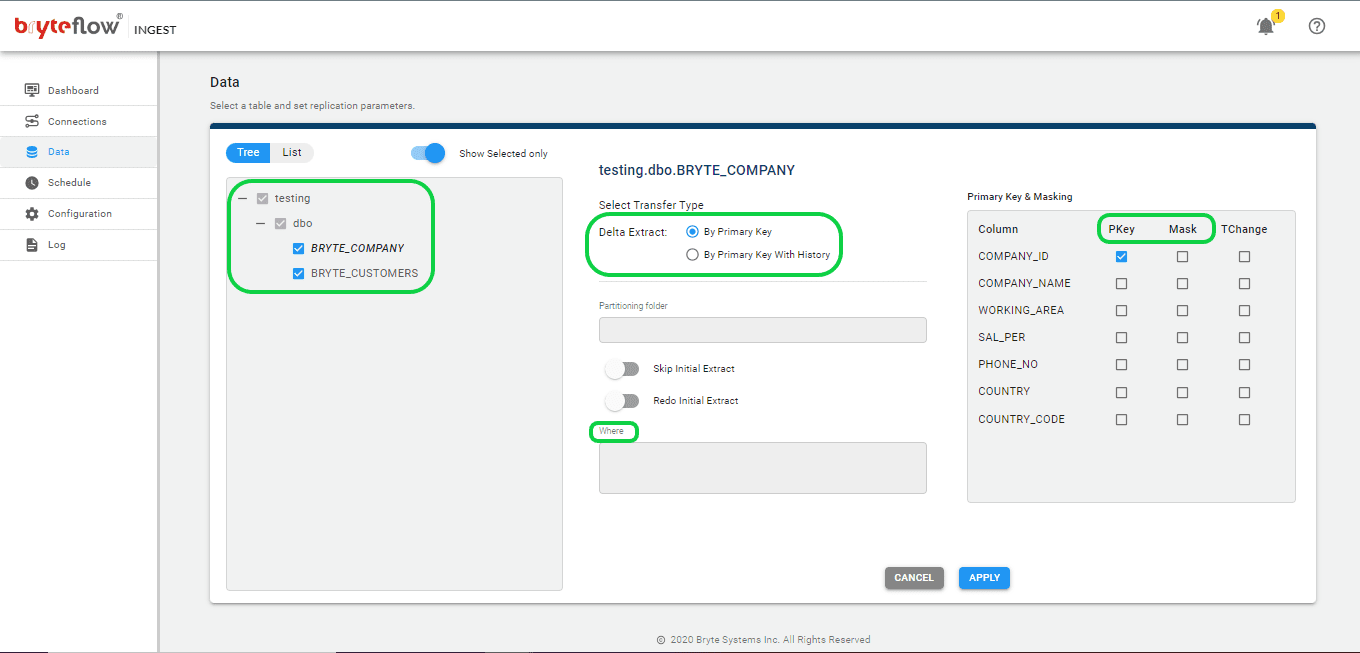

Set up the source table(s) to be replicated, select the primary key and the transfer type for each table. ‘By Primary key with History’ automatically keeps SCD Type2 history on Snowflake. ‘By Primary Key’ transfer type keeps a mirror of the source, without history. Additionally, you can filter out the unwanted data using the “Where” option and Byteflow Ingest also gives you the option to “mask” the sensitive data in the column.

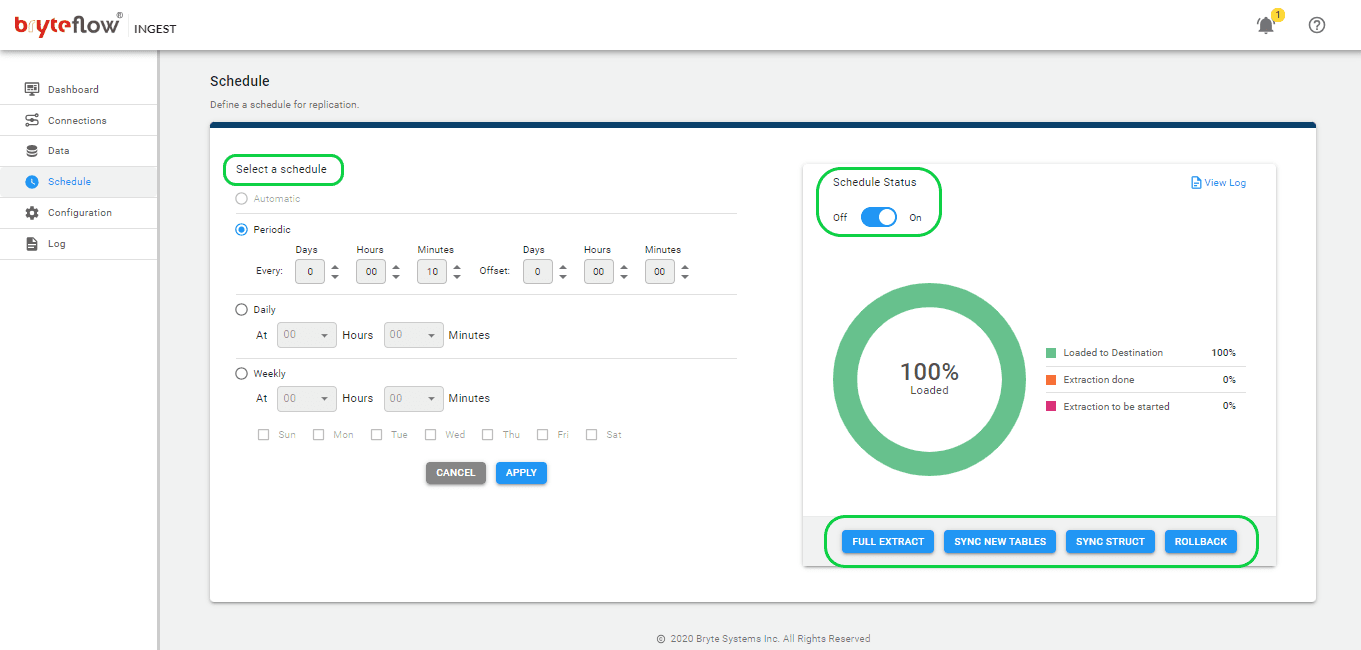

Once the tables are selected, then just schedule your data replication time and get your data flowing to Snowflake in near real-time. Here you have the option to select “Periodic”, “Daily” and “Weekly”. The “Full extract” option is for initial load (For very large tables, XL Ingest is the best option). The “Sync New Tables” is for adding new tables after replication is enabled for other tables, the “Sync Struct” is for replicating any structure change of the existing table and “Rollback” is to go back into any successful previous run (mainly used when replication has stopped due to an outage).

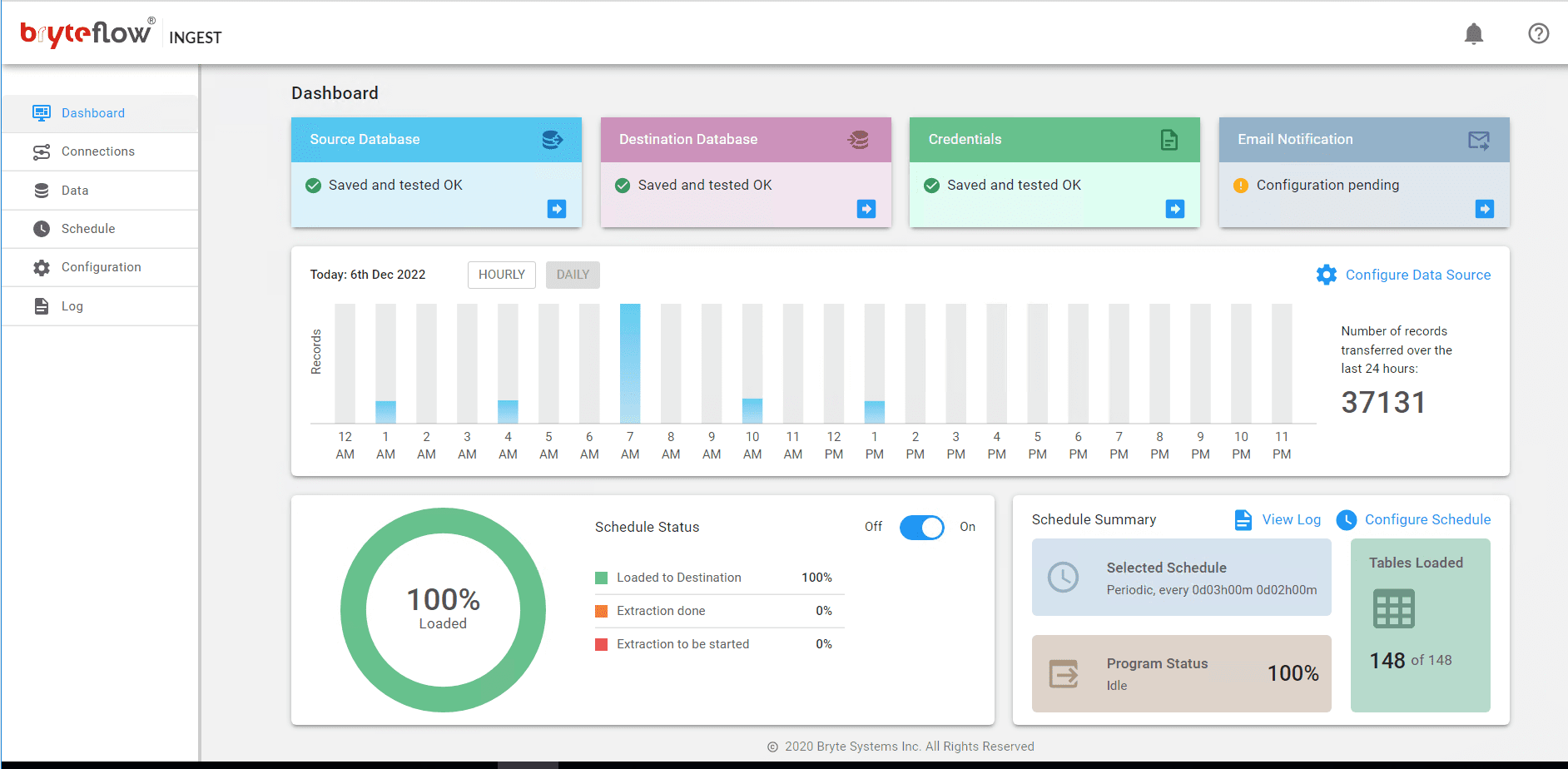

Monitor the progress in the “Dashboard”. The Dashboard gives you a detailed overview of what is happening in BryteFlow Ingest. The details of the total records loaded, and number of tables loaded, whether the Ingest is currently loading or not, status of the connection etc.

We have discussed the advantages and challenges of migrating from Oracle to Snowflake along with the strategies and steps involved, and reasons why BryteFlow is the ideal no-code Oracle to Snowflake replication tool. Do contact us for a Demo