BryteFlow SAP Data Lake Builder for real-time SAP ETL

Modernize your SAP Business Warehouse with an enterprise-wide SAP Data Lake

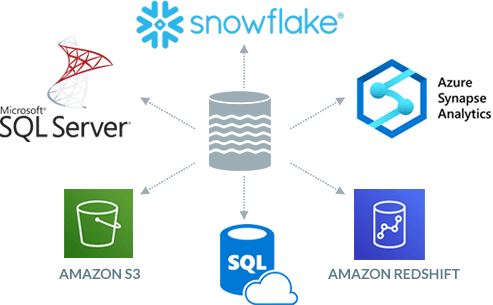

Are you looking to modernize your SAP Business Warehouse and integrate SAP data on scalable platforms like Snowflake, Redshift, S3, Azure Synapse, ADLS Gen2, Azure SQL Database, Google BigQuery, Postgres, Databricks, SingleStore or SQL Server? Do you need to merge SAP data with that from heterogeneous sources in real-time, for analytics? An SAP cloud data lake is the perfect solution to manage both – storage and analytics and get the most from your SAP ERP data. An SAP cloud data lake or SAP cloud data warehouse is an infinitely more agile, flexible, scalable and cost-effective solution.

About SAP BW and how to create an SAP BW Extractor

Please Note: SAP OSS Note 3255746 has notified SAP customers that use of SAP RFCs for extraction of ABAP data from sources external to SAP (On premise and Cloud) is banned for customers and third-party tools. Learn More

Build an SAP Data Warehouse. Replicate SAP ERP data at application level in real-time, with business logic intact

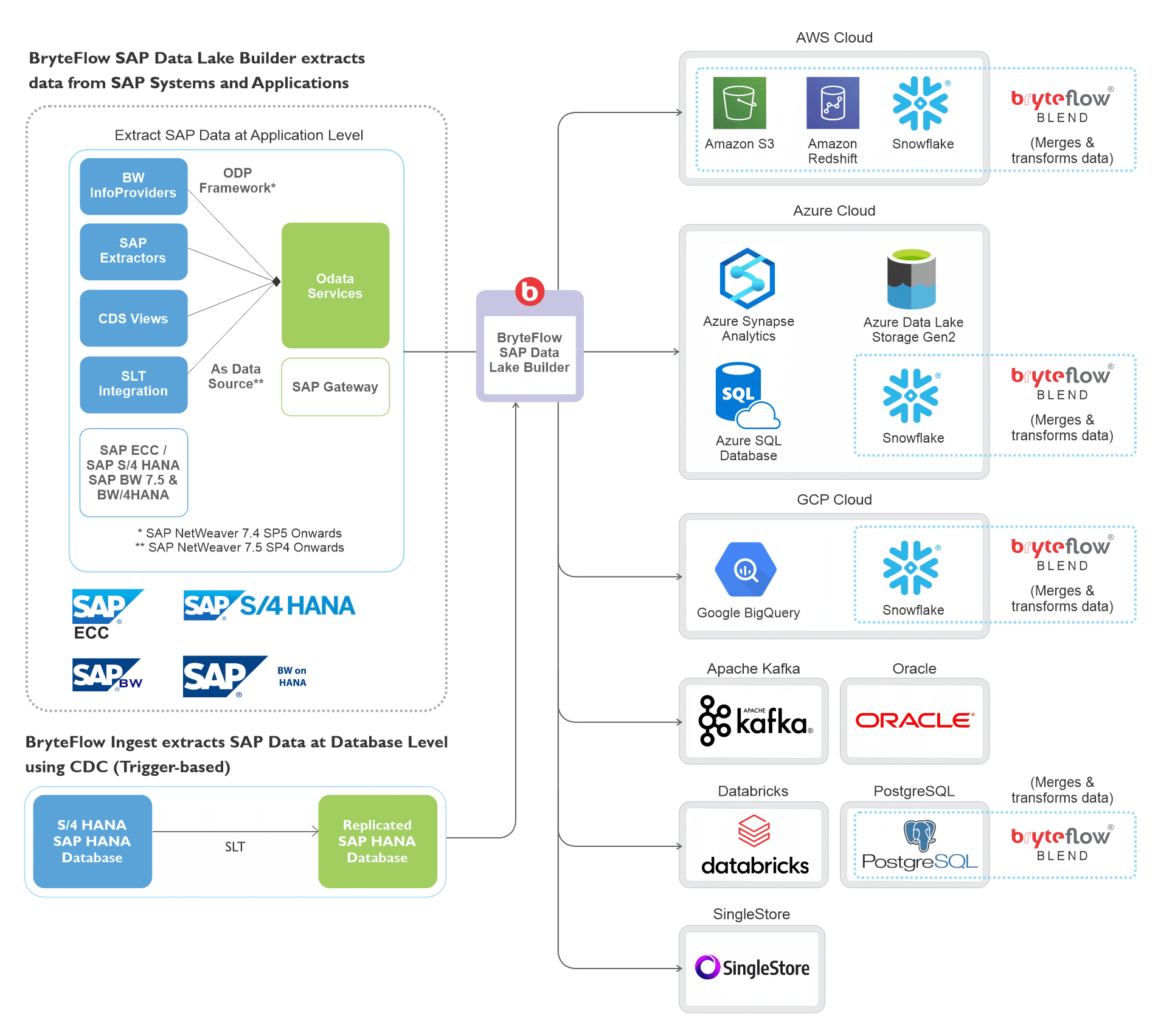

If you need SAP data integration or SAP replication without hassle, BryteFlow has developed an SAP ETL tool- the BryteFlow SAP Data Lake Builder. It extracts your SAP ERP data at the application level with business logic intact. It extracts data from SAP ERP applications like SAP ECC, S4HANA, SAP BW, SAP HANA using the Operational Data Provisioning (ODP) framework and OData services, and replicates data with business logic intact to your SAP Data Lake or SAP Data Warehouse. You get a completely automated setup of data extraction and automated analysis of the SAP source application. Your data is ready-to-use on the target for various uses cases including Analytics and Machine Learning. 5 Ways to extract data from SAP S/4 HANA

SAP to AWS (Specifically S3) – Know as Easy Method

SAP ECC and Data Extraction from an LO Data Source

CDS Views in SAP HANA and how to create one

SAP Extraction using ODP and SAP OData Services (2 Easy Methods)

Option to ETL SAP data at database level with BryteFlow Ingest

You can also extract SAP data at the database level using CDC with BryteFlow Ingest. Extract Pool and Cluster tables with just a few clicks to your SAP Data Warehouse.

How to Carry Out a Successful SAP Cloud Migration

Learn about BryteFlow’s SAP Replication at Database Level

SAP HANA to Snowflake (2 Easy Ways)

SAP ETL Tool Highlights

- The SAP ETL tool automates data extraction, using OData services to extract the data, both initial and incremental or deltas. The tool can connect to Data extractors or CDS views to get the data. SAP BODS as an ETL Tool

- If underlying database access is available, it can also do log based Changed Data Capture of ERP data from the database using transaction logs. It can extract data from Pool and Cluster tables to the SAP Data Warehouse or Data Lake. Extract data from SAP S/4 HANA in 5 Ways

- BryteFlow enables automated upserts to keep the SAP data lake on Snowflake, Redshift, Amazon S3, Google BigQuery, Databricks, Azure Synapse, ADLS Gen2, Azure SQL DB ( or SQL Server) continually updated for real-time SAP ETL.

- Automated SCD Type 2 history of the data on the SAP data warehouse or data lake preserves history of every transaction.

SAP SLT Replication using ODP Replication Scenario - Zero coding to be done for any process, including data extraction, merging, mapping, masking, or type 2 history. No external integration with third party tools like Apache Hudi is required. SAP Extraction using ODP and SAP OData Services

- Data in your SAP Data Lake or SAP Data Warehouse is Analytics-ready. Best practices and high performance for integration into Snowflake, Redshift, S3, Azure Synapse, Azure SQL DB and SQL Server RISE with SAP (Everything You Need to Know)

- For S3, an Enterprise grade Data Preparation Workbench with a point-and-click interface is available to easily separate and categorize raw and enriched or curated data. Build an S3 Data Lake in Minutes

- BryteFlow SAP Data Lake Builder provides easy configuration of ERP data for partitioning, file types and compression. Easy SAP Snowflake Integration

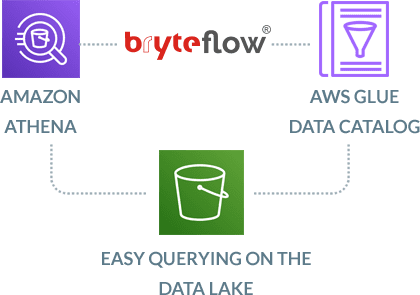

- Automated Integration with Amazon Athena and Glue Data Catalog (at API level) for an SAP Data Lake on AWS. SAP HANA to Snowflake (2 Easy Ways)

- Automated upserts and transformation on Amazon S3 with BryteFlow Blend enables ready-to-use data models for Machine Learning, AI, and Analytics.

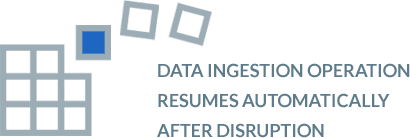

- Built-in resiliency with automatic network catch-up. How to Carry Out a Successful SAP Cloud Migration

BryteFlow SAP Data

Lake Builder Technical Architecture

Automate extraction of SAP application data via Odata Services and build an SAP Data Lake or SAP Data Warehouse without coding. Download the BryteFlow SAP Data Lake Builder Datasheet

The SAP ETL tool that builds your SAP Data Lake or SAP Data Warehouse without coding

BryteFlow SAP Data Lake Builder extracts and replicates SAP ERP data at application level, keeping business logic intact

The BryteFlow SAP Data Lake Builder connects to SAP at the Application level. It gets data exposed via SAP BW ODP Extractors or CDS Views as OData Services to build the SAP Data Lake or SAP Data Warehouse. It replicates data from SAP ERP systems like ECC, HANA, S/4HANA and SAP Data Services. SAP application logic including aggregations, joins and tables is carried over and does not have to be rebuilt, saving effort and time. It can also connect at the database level and perform log based Change Data Capture to extract and build the SAP Data Lake or SAP Data Warehouse. A combination of the 2 approaches can also be used.

No-Code, Real-time, Continuous ERP Data Integration

Get automated upserts to keep your SAP Data Lake or SAP Data Warehouse continually updated in real-time. No coding or third-party tool like Apache Hudi is required. There is zero coding to be done for any process, including data extraction, merging, masking, or type 2 history. It automates the DDL creation with automated data type mapping and recommended keys.

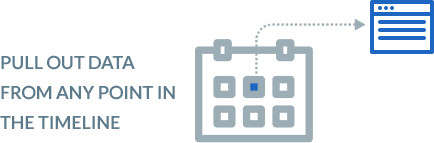

Time -series data with Automated SCD Type 2 History

Maintains the full history of every transaction with options for automated data archiving. You can go back and retrieve data from any point on the timeline. This versioning feature is invaluable for historical and predictive trend analysis.

Extracting SAP data in SAP BW by creating an SAP OData Service

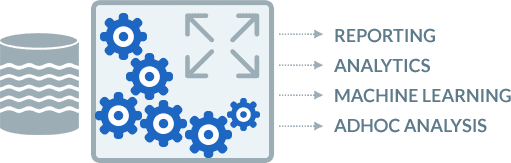

Automated data transformation – access ready data in your SAP Data Lake for Machine Learning, AI and Analytics

Transform, remodel, schedule, and merge data on your AWS data lake from multiple sources in real-time with BryteFlow Blend, our data transformation tool, and get ready-to-use data models for Machine Learning, AI and Analytics. No coding required.

SAP to AWS (Specifically S3) – Know as Easy Method

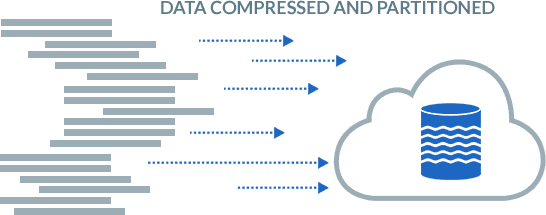

Smart Partitioning and Compression of Data

BryteFlow SAP Data Lake Builder ingests data into the SAP Data Lake or SAP Data Warehouse and provides an intuitive, point-and-click interface for configuration of partitioning, file types and compression. This delivers faster query performance.

Automated Integration with Glue Data Catalog and Amazon Athena enables easy querying on the data lake

For an AWS data lake, the SAP ETL tool makes the data available on the data lake to Amazon Athena, so as the data is ingested, you can query it in Athena. The integration with Glue Data Catalog is at the API level, so there is no need to wait or schedule crawlers. Run ad hoc queries on the data lake with Amazon Athena or with Redshift Spectrum, if Amazon Redshift is part of your stack.

Deep integration with Cloud services for SAP ETL

BryteFlow SAP Data Lake Builder uses the cloud intelligently as required, and automates SAP ETL. It is embedded in the cloud and delivers high performance with low latency. It replicates data with application logic intact to SAP Data Lakes and SAP Data Warehouses on Snowflake, S3, Redshift, Azure Synapse, Azure SQL Database and SQL Server. We also replicate SAP data to Kafka

Modernize your SAP BW with a Cloud SAP Data Lake

Prepare and store SAP data on your data lake of choice and get SAP out of its silo. Access raw SAP data with no limits on your data lake and make data your workhorse, by putting it to several uses including Reporting, Analytics, Machine Learning, sharing with other applications, business adhoc analysis to uncover unexpected insights and joining with non-SAP data easily.

How to extract SAP Data using ODP and SAP OData Services (2 Easy Methods)

Get built-in resiliency with automatic network catch-up

The BryteFlow SAP Data Lake Builder has an automatic network catch-up mode. It just resumes where it left off in case of power outages or system shutdowns when normal conditions are restored.

Consolidate ERP Data with

BryteFlow SAP Data Lake Builder

Fast SAP ETL: No need to rebuild business logic at destination

The BryteFlow Data Lake Builder extracts SAP data directly from SAP applications (using SAP BW ODP Extractors or CDS Views as OData Services) so it gets across the data with application logic intact – a huge time-saver.

Avoid SAP Licensing Issues when creating your SAP Data Lake

Some users cannot connect to the underlying database due to SAP licensing restrictions; the BryteFlow SAP Data Lake Builder allows access to the data directly without licensing issues. For database access, BryteFlow Ingest can be used for database log-based Change Data Capture.

Run queries on the SAP Data Lake itself

The BryteFlow SAP Data Lake Builder prepares, transforms, and merges your data on your data lake. Enrich the data as required or build curated data assets that the entire organisation can access for single source of truth.

Automated, No-code and Real-Time SAP ETL without tech expertise

SAP data replication and integration is no-code and real-time with BryteFlow SAP Data Lake Builder. There is continuous data integration as changes in application data are merged on the destination in real-time. The point-and-click interface means you don’t have to rely on technical experts for SAP ETL processes and can save on resources.

A Scalable SAP Data Lake and a Distributed Data Approach to save on costs

Your SAP Data Lake is infinitely scalable, flexible, and agile. Storage on the cloud is cheap, you can get across only the data you need for analytics to your data warehouse. This can really bring down SAP ETL costs.

Fast deployment and fast time to delivery

Set up your SAP data lake or SAP data warehouse at least 25x faster than with other tools and get delivery of data within just 2 weeks. No third party tools are needed and configuration is very easy.