This blog deals with the concept of database replication, the different types, benefits and challenges of database replication and the 6 best database replication tools on the market. How BryteFlow Works

Quick Links

- What is Database Replication?

- Database Replication Types

- Benefits of Database Replication

- Database Replication Challenges

- Types of Database Replication Tools

- What should you look for in a Database Replication Tool?

- 6 Popular Database Replication Tools

What is Database Replication?

Database Replication is simply the copying of a primary database to a single or multiple replica databases to keep data identical and consistent across all of them. Database replication keeps the data easily accessible, trustworthy and reliable. Database replication creates copies of the data to act as fault-tolerant resilient backups, should the system face some adversity like a natural calamity, virus attack or hacking. Usually, data replication is a continuous process that happens in real-time as data gets created, updated or deleted in the main database but it can also be a one-time event or batch replication scheduled at regular intervals. The Complete Guide to Real-Time Data Replication

Synchronous and Asynchronous Database Replication

Within database replication there are two basic patterns – Synchronous and Asynchronous Replication.

Synchronous replication: The Synchronous replication pattern writes data in the primary database and replica database concurrently. Oracle Replication in Real-time, Step by Step

Asynchronous replication: The Asynchronous replication pattern copies data to the replica only after the data updates have been done in the primary database. This replication is not usually real-time (though potentially possible) and happens in scheduled batches. Oracle CDC (Change Data Capture): 13 Things to Know

Database Replication Types

Transactional Replication

In this method of data replication, a first full copy of the database is done. After that incremental data updates are treated as data changes. Data changes are replicated in real-time from the main database to the replica database in the same sequence as they happen, so transactional consistency is maintained. Transactional replication is used in server-to-server environments, it captures every change and replicates it consistently. SingleStore DB – Real-Time Analytics Made Easy

Snapshot Replication

Snapshot Replication is generally used where data updates do not happen frequently. Snapshot replication delivers the complete data (snapshot) present in the database at any moment and does not monitor further changes to data. It is slower than transactional replication since it copies multiple records (comprising the whole database) from source to replica. Snapshot replication is ideal for the initial sync between publisher and subscriber, but you cannot rely on it for subsequent updates. Postgres CDC (6 Easy Methods to Capture Data Changes)

Merge Replication

Merge replication is the process by which data from two or more databases is aggregated into one database. It is a complex replication pattern since the publisher and subscriber can both make changes independently to the database and is usually used in client-server environments. This method starts with distributing a snapshot of the data to replicas and then maintaining a sync of data across the system. Each node can independently make changes to data, but all updates are combined into a unified entity. How to Select the Right Data Replication Software

Key-Based Replication

Key-based data replication uses a replication key to earmark, search and modify only specific data that has changed after the last update. This reduces load and speeds up the backup process, since only the changes in the isolated information are to be updated. Though key-based replication is quick to refresh new data, a drawback is that it cannot replicate deletes in data. SQL Server CDC for Real-Time Replication

Peer-to-Peer Replication

Peer-to-peer replication, also called Active-Active Geo-Distribution, depends on continual transactional data using nodes. In the Active-Active mode all nodes constantly transmit data to one another to sync the database. All nodes are writable – anybody over the global network can modify data and all the other nodes will reflect the changes irrespective of geographical location, enabling real-time consistency of data.

Log-based Incremental Replication

Log-based incremental replication is based on the copying of data from the database binary log file which identifies changes made to the primary database including inserts, updates and deletes. Many modern databases support log-based replication including MySQL, PostgreSQL, Oracle and SQL Server. This is one of the most efficient forms of database replication since it has minimal impact on source systems. Postgres Replication Fundamentals You Need to Know

Benefits of Database Replication

- Database Replication provides trustworthy data for Analytics

Database replication provides a single source of truth for businesses by delivering data from different sources to a data lake or data warehouse where it can be subjected to analytics using BI tools. This enables you to derive valuable, actionable insights from trustworthy data to enhance business outcomes. The Complete Guide to Real-Time Data Replication - Database Replication ensures higher availability of data through backups

Data replication stores copies of the database in different locations so even if one system fails, the backups are still available for access, ensuring business continuity, and minimizing data loss. SingleStore DB – Real-Time Analytics Made Easy - Database Replication provides scalability and performance

Database replication distributes data processing across multiple nodes, reducing load on the servers. This enhances system performance and scalability, ensuring optimal performance during peak times. It allows for multiple users to access data concurrently without impacting query performance. Debezium CDC Explained, and a Great Alternative CDC Tool - Database Replication enables faster access to data and reduces latency

By replicating data closer to users’ locations, latency is reduced, and access speed is improved, especially for users located in different geographic locations. Successful Data Ingestion (What You Need to Know) - Database Replication improves application performance

Database replication enhances scalability, performance and availability of database-dependent applications since you it allows you to run analytics and BI tools on non-production systems. Oracle Replication in Real-time, Step by Step - Database Replication helps to ensure data integrity

Data replication processes and updates data in target systems so it is the same as in source, which ensures integrity and data consistency. How to Manage Data Quality (The Case for DQM) - Database Replication delivers data redundancy

Through database replication you can achieve redundancy to protect the read performance and availability of important operational databases and to maintain business continuity. Postgres Replication Fundamentals You Need to Know - Database Replication can reduce movement of data

Database replication enables a distributed database system allowing replicas to be saved in proximity to the point of transaction or where the data is entered. Cloud Migration (Challenges, Benefits and Strategies)

Database Replication Challenges

Database replication is great, but it does come with its own set of challenges.

Database replication can be complex

For businesses, replication systems can be complex to set up and maintain, this factor can increase exponentially depending on number of databases involved, volume of data and replication structure.

Database replication can be challenged by inconsistent data

If data needs to be synchronized across multiple locations in a distributed system, especially with multi-master replication setups, data inconsistencies and conflicts may result. Ensuring that all replicas are consistently updated requires careful planning and management. Cloud Migration (Challenges, Benefits and Strategies)

Database replication can increase resource costs

In database replication you need to store multiple copies of data across locations which can attract additional storage and processing expenses.

Data loss might be an issue in Database Replication

You may lose data if database configurations or primary keys used for validating data integrity in replicas are inaccurate. Data Migration 101 (Process, Strategies, Tools)

Database Replication can involve a lot of administrative effort

It takes a lot of effort from IT resources to manage and maintain the data replication process, constant synchronization and failover scenarios. Multiple databases must be monitored and maintained, all of which can burden your IT resources. How to Select the Right Data Replication Software

Database Replication can be affected by Latency Issues

In case of replication between sites in different geographic locations, high latency may affect the ‘real-timeliness’ of the data adversely, especially if the data is being used for real-time applications and business insights that need the most current data.

Types of Database Replication Tools

There are different types of database replication tools and software available in the market.

Built-In Database Replication Tools

Modern databases like PostgreSQL, MySQL, Oracle, SQL Server etc. are bundled with built-in tools and functionalities for data replication. About SQL Server CDC

Specialized Data Replication Tools

Intended specifically for database replication, these software offer features like data compression, real-time replication, and automated failover. Oracle GoldenGate, Fivetran are examples. About Oracle CDC

ETL (Extract Transform Load) Tools

ETL tools can also be used as data replication software. Tools like SSIS (SQL Server Integration Services) and Informatica are usually used to extract data, transform it to a consumable format and load it into the destination database. About Postgres CDC

CDC (Change Data Capture) Tools

CDC tools capture the changes that happen at the source and carry them out on the target database. They are one of the most efficient forms of data replication since they only transmit the deltas or changed data. Oracle GoldenGate, Qlik Replicate and our very own BryteFlow are some of them. For the record, BryteFlow can also be used as a data migration, data integration and ETL tool besides a tool for real-time data replication.

Data Integration Tools

Besides database replication, data integration tools can also combine data from different sources, carry out data quality checks and synchronize data. They include tools like Informatica, SnapLogic and IBM InfoSphere. SingleStore DB – Real-Time Analytics Made Easy

Cloud-based Replication Services

Many Cloud vendors like AWS and Azure offer their own database replication services to customers. For e.g. AWS provides AWS DMS (AWS Database Migration Services) and Azure offers the SQL Server Management Studio (SSMS). AWS DMS Limitations for Oracle Replication

Streaming CDC Replication with Message Brokers

Message brokers like Apache Kafka, RabbitMQ, Redis, Amazon SQS, Active MQ, and others perform real-time streaming replication which also serves as a CDC mechanism. Apache Kafka is a distributed messaging software, and as with other message queues, Kafka reads and writes messages asynchronously. Kafka CDC transforms databases into data streaming sources. Debezium connectors get data from external sources to Kafka in real-time while Kafka connectors stream the data from Kafka to various targets – again in real-time, with Kafka acting as a message broker. Learn about Oracle CDC to Kafka

What should you look for in a Database Replication Tool?

The Database Replication Tool should be Cloud-Native

It should be designed ground up for Cloud data warehouses since most organizations need to get their data to the Cloud for analytics or machine learning purposes. Such Cloud-native tools use the advantages of the Cloud to optimize performance, deliver cost-efficiency and better data management by using services available in the Cloud. How BryteFlow Works

The Database Replication Tool should be compatible with a wide range of sources and targets

The Data replication tool needs to be compatible with and support your source databases and destination platforms (e.g., data warehouses, Cloud services). The tool should integrate seamlessly for efficient data transfer and to enhance operational performance. How to Select the Right Data Replication Software

The Database Replication Tool should have good ETL capabilities

A good database replication tool needs to deliver some degree of ETL. It should be capable of extracting data from sources efficiently, transforming it to a consumable format and loading it into the data repository with low latency. This keeps data accessible, consistent and always current.

The Database Replication Tool be highly scalable to handle increasing loads

You need to select a database replication tool that can handle your current data volume and velocity while also being scalable to accommodate future growth without compromising performance.

The Database Replication Tool should deliver data in real-time

Look for a database replication tool that can provide real-time data replication to ensure synchronization across systems, especially if immediate data availability is critical. It should also be capable of batch replication if needed. The Complete Guide to Real-Time Data Replication

The Database Replication Tool should be easy to deploy and use

Focus on getting a database replication tool with a user-friendly interface that is easy to configure, use and maintain. Avoid tools that require extensive training to use and expert technical resources to maintain them.

Can the Database Replication Tool handle schema evolution?

The database replication tool should be capable of handling changes in schema – modifying a database schema while preserving existing data and ensuring compatibility with the previous schema.

Does the Database Replication Tool provide Change Data Capture?

Change Data Capture is a much-needed component of the replication process for low-impact, high performance, reliable replication, make sure your database replication tool provides it.

The Database Replication Tool must integrate with your existing ecosystem

Ensure the data replication tool integrates smoothly with your current data management ecosystem, including ETL processes, analytics platforms, and data governance tools, to avoid complications in your data environment. ELT in Data Warehouse

The Database Replication Tool should provide customization and automation

The database replication tool should have advanced customization options to accommodate your requirements. It should also have a high degree of automation, automating most time-consuming processes, so your team can focus on productive tasks rather than the time-consuming chores of replication like data prep, data cleansing etc. Why automate your ETL pipeline?

The Database Replication Tool should have support for a wide range of APIs

The database replication tool should have extensive API support for easy integration with various system architectures. How to Make CDC Automation Easy

The Database Replication Tool should have security features in place

Select a database replication tool with robust encryption, strict access controls, and compliance with data protection standards like GDPR or HIPAA to safeguard your sensitive data.

Is the Database Replication Tool reliable and fault-tolerant?

The database replication tool should have strong recovery features, such as checkpointing, automatic retries, automated network catchup, and transaction consistency to ensure data integrity and minimize data loss. How to Select the Right Data Replication Software

Does the Database Replication Tool provide a good ROI?

Evaluate the pricing model (e.g., subscription, volume-based, perpetual license) of the database replication tool against your budget, considering both initial and long-term costs, including maintenance and support.

The Database Replication Tool must be high performance with high throughput and low latency

Make sure the database replication tool delivers high performance with various features like parallelism, partitioning and compression. It should be capable of delivering high volumes of data within seconds to provide data in near real-time. SingleStore DB – Real-Time Analytics Made Easy

The Database Replication Tool must have advanced monitoring and management features

Make sure the database replication tool has advanced monitoring and management features for comprehensive oversight and control.

6 Popular Database Replication Tools

Here we present some of the premier database replication tools in the market with varying capabilities and strengths. Of course we may be biased, but we do believe our BryteFlow deserves its place at the top!

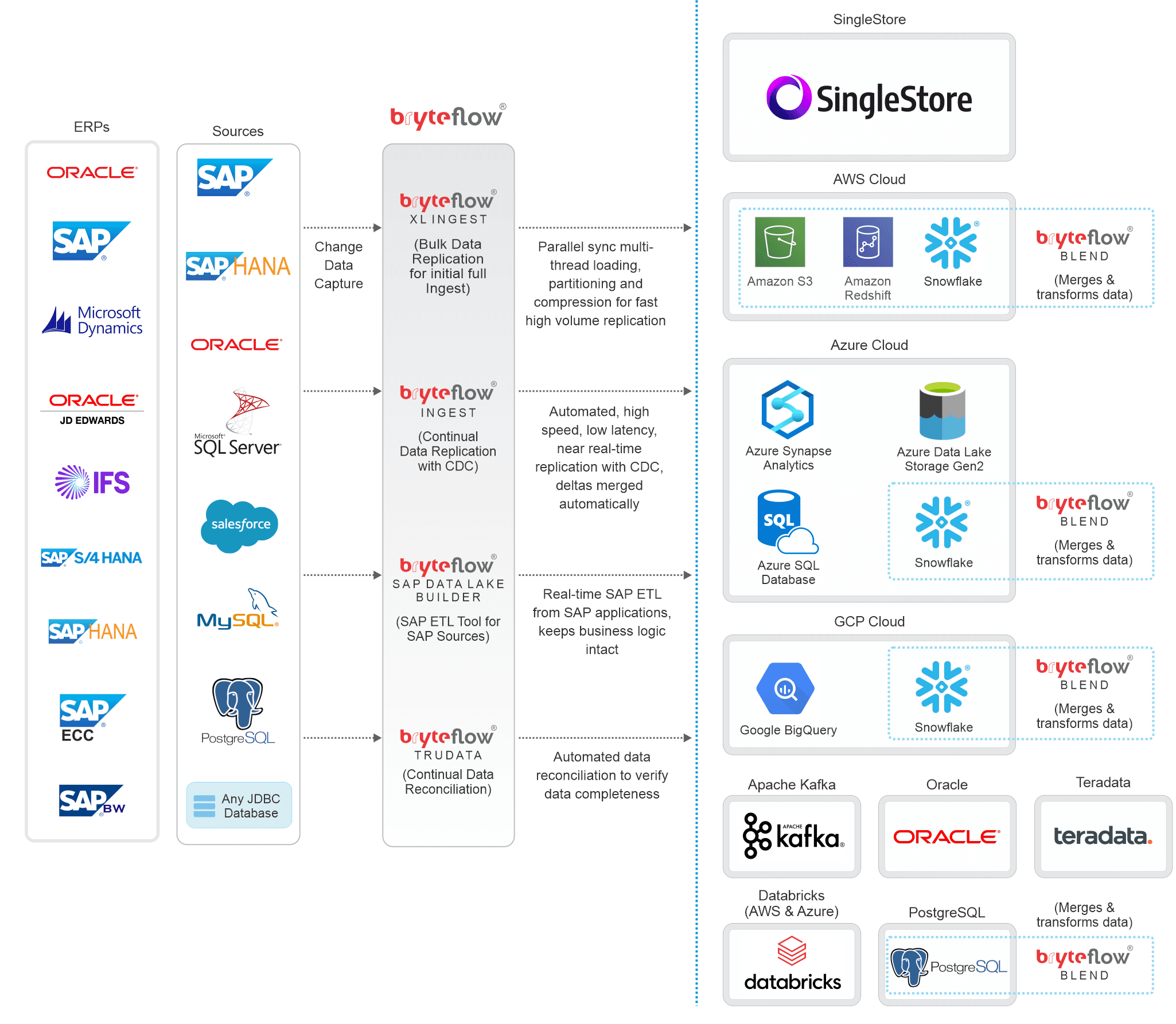

BryteFlow

BryteFlow is a data replication and data integration tool that is completely no-code and easy to use. BryteFlow’s flagship products BryteFlow Ingest and XL Ingest work seamlessly to deliver enterprise-scale data in real-time from transactional databases like SAP, Oracle, SQL Server, MySQL and PostgreSQL to popular platforms like AWS, Azure, SQL Server, BigQuery, PostgreSQL, Snowflake, SingleStore, Teradata, Databricks and Kafka in real-time, providing ready for consumption data on the destination. BryteFlow replicates data to on-premise and Cloud destinations, is quick to deploy, and you can start receiving data in as little as 2 weeks. It supports both – real-time and batch replication as per your requirement.

Features of the BryteFlow Database Replication Tool

- Supports rapid ingestion of very heavy datasets with parallel, multithread loading, smart configurable partitioning, and compression.

- BryteFlow XL Ingest performs the initial full ingest (>50GB) followed by BryteFlow Ingest to sync incremental data and deltas with the source in real-time, using log-based CDC. It supports a variety of CDC options for SQL Server including CDC for Multi-Tenant Databases

- Very high throughput of 1,000,000 rows in 30 seconds – 6x faster than Oracle GoldenGate.

- Completely no-code replication tool – automates every process including schema and table creation, data extraction, Change Data Capture, merging, masking, data mapping, DDL and SCD-Type2 history.

- BryteFlow’s optimized in-memory engine merges inserts, updates, and deletes automatically with existing data so your data always stays updated.

- Automates data type conversions (e.g. Parquet-snappy, ORC) so data delivered is immediately ready for consumption. Enables configuration of custom business logic to collect data from multiple applications or modules into AI and Machine Learning ready inputs.

- Has a user-friendly, point-and-click interface that any business user can use.

- Supports data versioning, data is saved as time-series data, including archived data.

- Your data is secure since it is under your control and never leaves your network. BryteFlow Ingest encrypts the data at rest and in transit. It uses SSL to connect to data warehouses and databases.

- Provides special support for ETL from SAP applications and databases with the BryteFlow SAP Data Lake Builder. It extracts data from SAP systems with business logic intact and automates table creation on target.

- Has an automated network catchup feature, it resumes replication automatically from the point of stoppage after normal conditions are restored.

- Seamlessly integrates with BryteFlow TruData to verify data completeness using row counts and columns checksum.

Pricing

Pricing information can be found on our pricing page.

Diagram to illustrate BryteFlow Data Replication

Informatica

Informatica provides data integration capabilities with both on-premise and Cloud deployment options. It combines advanced hybrid integration and data governance features with an intuitive self-service interface for analytical tasks. Worth mentioning is Informatica’s CLAIRE Engine, that is metadata driven and uses machine learning to process data. However, this comprehensive, enterprise-level data integration solution is complex, expensive and takes time to deploy.

Features of the Informatica Database Replication Tool

- Replicates changes and metadata in real-time over LAN or WAN using batched or continuous replication methods.

- Replicates data over diverse platforms and databases with consistency.

- Supports low-impact CDC techniques.

- Ensures efficient data loading with InitialSync and Informatica Fast Clone. This includes Oracle replication and data streaming for targets like Greenplum and Teradata.

- Scales to accommodate data distribution, migration, and auditing needs.

- Provides encryption both in-transit and at-rest.

Pricing

Has a consumption-based pricing model. More info on pricing page.

Qlik Replicate

Qlik Replicate is a robust and user-friendly data replication solution that simplifies and accelerates the process of replicating, ingesting and streaming data across operational stores and data warehouses. It creates copies of production endpoints and distributes data across different systems. Its web-based console and replication server enable real-time data updates through high-performance change data capture (CDC) technology. It supports a wide range of data sources, including Oracle, Microsoft SQL Server, and IBM DB2.

Features of the Qlik Replicate Database Replication Tool

- Real-Time Data Replication – Replicates data in real-time using log-based CDC

- Wide Range of Source and Target Support: Connects with a diverse array of data sources and targets for flexible integration.

- Supports big data loads with parallel threading.

- Automatically generates target schemas based on source metadata.

- Secure Data Transfer: Ensures data is transferred securely with built-in encryption protocols.

- Supports movement of SAP data to any major database, data warehouse or Hadoop, on-premise or in the Cloud.

Pricing

Qlik offers many products that are bundled together. For pricing details, you will have to contact their team here

Fivetran

Fivetran is a SaaS based automated data integration platform that specializes in syncing data from diverse sources like databases, Cloud applications and logs. It supports efficient movement of data with minimal latency. It’s powerful sync capability can update data even when schemas and APIs evolve.

Features of the Fivetran Database Replication Tool

- Provides a variety of CDC options including low-impact log-based CDC.

- Has over 400 ready-to-use source connectors so no coding is required. Has compatibility with hundreds of connectors for extracting data from sources like Salesforce, Facebook, AWS, Azure, Google Analytics, and Oracle. Users can also create custom APIs or request additional connectors if needed.

- Has high-volume agents for replicating large data volumes in real-time using log-based CDC

- Log-free replication is possible with teleport sync. Fivetran’s Teleport Sync presents a specialized database replication technique, combining snapshot detail with log-based system speed.

- Fivetran is highly automated, simplifying data extraction, cleaning, and transformation with minimal coding. It provides numerous connectors, automation tools, and synchronization options to streamline data management for databases and data warehouses. BryteFlow as an alternative to Matillion and Fivetran for SQL Server to Snowflake Migration

Pricing

With Fivetran you pay for monthly active rows (MAR), which are rows inserted, updated or deleted by the connectors (not total rows). The MARs are counted once per month, regardless of the number of changes. More info on pricing page.

Matillion

Matillion is an ETL / ELT tool that ingests data from sources into Cloud platforms like AWS, Azure, GCP and Snowflake. Besides ingestion, it makes data analytics-ready, so it can be consumed by analytics and BI tools. It uses the capabilities of Cloud platforms to load, transform, sync and manage data, to deliver data for business insights fast. Matillion is Cloud-native and has the capability to integrate and transform data from a variety of sources, including flat files, databases and Cloud applications.

Features of the Matillion Database Replication Tool

- Users can use the low-code intuitive interface to build workflows.

- Cloud-native tool, scalable and optimized to perform efficiently with Cloud providers like Google Cloud, AWS and Azure.

- Potential to use unstructured data in analytics and build AI pipelines for new GenAI use cases without coding.

- Both real-time and batch replication are possible for different kinds of data processing.

- Has several pre-built connectors to support replication from different kinds of data sources.

- Has native integration with Cloud data warehouses so vendor portability is easy.

- Provides native data transformation with the use of SQL and Python scripting.

Pricing

Matillion offers 3 pricing models as per your requirement. More info on pricing page.

Hevo

Hevo is an ETL tool that offers zero-maintenance data pipelines to sync data from 150+ sources covering SQL, NoSQL, and SaaS applications. It provides 100 pre-built native integrations customized to specific source APIs. With Hevo you can choose how data lands in your repository by performing on-the-fly actions such as cleaning, formatting, and filtering that will not impact load performance.

Features of the Hevo Database Replication Tool

- Provides pipelines to more than 150+ data sources in minutes.

- No manual maintenance needed for changes in source data or APIs.

- Formats data on the fly with pre-load transformations and automatically detects and replicates schema changes.

- Processes and enriches raw data without coding.

- Intuitive dashboards for monitoring pipeline health and real-time views of data flows.

- Hevo offers end-to-end encryption for data security and compliance with major security certifications.

- Has a fault-tolerant architecture that scales seamlessly, ensuring zero data loss and low latency.

Pricing

Hevo offers a 14-day Free Trial and 3 basic pricing plans. More info on pricing page.

Conclusion

In this blog we have looked at what database replication is all about, why you need it – the benefits and challenges and the different types of data replication. We have also looked at different kinds of tools that can be used to perform data replication and the 6 top data replication tools in the market. If you would like to see a demo of BryteFlow, contact us.