Considering options for data migration to AWS?

There are a number of tools you can consider for migrating data to AWS. Here we will have a quick look at the AWS Database Migration Service, better known as AWS DMS, how it works, components needed and its pros and cons. Build a Data Lakehouse on Amazon S3 without Hudi or Delta Lake

What is AWS DMS or AWS Database Migration Service?

AWS DMS is an AWS cloud service created to migrate data in a variety of ways: to the AWS cloud, from on-premises or cloud hosted data stores. AWS DMS can migrate all kinds of data ranging from relational databases, data warehouses, NoSQL databases, and other types of data stores. It is quite versatile and can handle one-time data migration or perform continual data replication with ongoing changes, syncing the source and target. Here’s a comparison between AWS DMS and our very own BryteFlow. Learn how to Build an S3 Data Lake in Minutes

Benefits of AWS DMS

The AWS Data Migration Service handles many of the tedious, time-consuming tasks involved in data migration:

AWS DMS is server-less and can deploy, manage and monitor all hardware and software needed for migration automatically so you can avoid conventional tasks like capacity analysis, procurement of hardware and software, system installation and administration and testing/debugging of systems. Technically your migration can be started within minutes of AWS DMS configuration. ELT in Data Warehouse

AWS DMS allows you to scale migration resources up or down as per requirement. Say if you need more capacity, you can increase storage allocation easily and restart the migration in minutes. Alternatively, if you find you have excess capacity configured, you can downsize as per your reduced workload. How to Bulk Load Data to Cloud Data Warehouses

With AWS DMS you need to pay only for resources you use since it has a pay-as-you-go model unlike traditional licensing plans with up-front purchase costs and ongoing maintenance fees.

- AWS DMS provides automated management of the infrastructure associated with your migration server. This covers hardware, software, software patching, and error reporting. AWS DMS Limitations for Oracle Sources

- AWS DMS reassures with automatic failover. A backup replication server swings into action if the main replication server fails and takes over with hardly any interruption of service.

- AWS DMS can help you change over to a database engine that is modern and makes more financial sense like the managed database services provided by Amazon RDS or Amazon Aurora. AWS DMS can also enable moving to a managed data warehouse like Amazon Redshift, NoSQL platforms like Amazon DynamoDB, or low-cost storage platforms like Amazon S3. If you need to use the same database engine with the modern infrastructure, that is supported too. ELT in Data Warehouse

- Almost all DBMS engines like Oracle, Microsoft SQL Server, MySQL, MariaDB, PostgreSQL, Db2 LUW, SAP, MongoDB, and Amazon Aurora are supported as sources by AWS DMS

- AWS DMS covers a wide range of targets including Oracle, Microsoft SQL Server, PostgreSQL, MySQL, Amazon Redshift, SAP ASE, Amazon S3, and Amazon DynamoDB.

- AWS DMS enables heterogeneous data migration from any supported data source to any supported target. 6 reasons to automate your Data Pipeline

- Security is built in with an AWS DMS migration. Data at rest is encrypted with AWS KMS encryption (AWS Key Management Service). During migration, (SSL Secure Socket Layers) encrypts your in-flight data as it moves from source to target.

Build a Data Lakehouse on Amazon S3 without Hudi or Delta Lake

In a nutshell database migration is secure, fast and affordable with AWS DMS. It is low cost, fast to set up and you pay only for the compute. You can even use AWS DMS free for six months when migrating databases to Amazon Aurora, Amazon Redshift, Amazon DynamoDB or Amazon DocumentDB (with MongoDB compatibility). You can migrate data from almost any of the popular open-source or commercial databases as sources to any supported target engines.

Compare AWS DMS with BryteFlow

How AWS DMS works

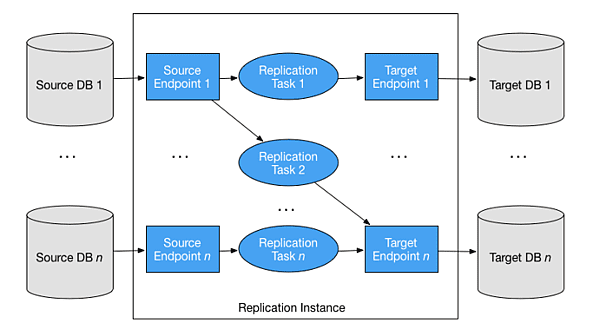

Basically think of AWS DMS as a server in the AWS cloud ecosystem that runs replication software. You define a source and a target connection so AWS DMS knows from where to extract the data and move it to. You can now schedule a task that runs on the server to migrate your data. AWS DMS will even create the tables and associated primary keys on the destination if they aren’t present. You also have the option to pre-create the target tables yourself. The AWS SCT (AWS Schema Conversion Tool) can be enlisted to create the target tables, indexes, views, triggers etc.

Components used in a data migration with AWS DMS

When using the AWS Database Migration Service there are some components that you should be familiar with.

Amazon DMS Components

Replication instance

A replication instance refers to a managed EC2 instance that hosts replication tasks. Replication instances are of various types:

- T2/T3: These are created for configuring, developing, and testing database migration processes. These instances can also be used for periodic migration tasks.

- C4: These instances are optimized for performance and suitable for compute-intensive workloads. These instances are appropriate for heterogeneous migrations.

- R4/R5: These are memory-optimized instances and should be used for ongoing migrations and high-throughput transactions.

Endpoints

An endpoint is used by the AWS DMS to connect target and source databases and transfer data. The type of endpoint will depend on the database type, but all endpoints generally need the same details such as endpoint type, engine type, encryption protocols, server name, port number, and credentials. AWS ETL Option: AWS Glue Explained

Database replication tasks

Replication tasks are used to outline what data is being transferred between target and source and when. You need to define target and source endpoints, replication instance and your option for migration type while creating a replication task. How to Create an S3 Data Lake in Minutes

AWS Data Migration Type Options

AWS DMS provides 3 migration type options:

Full Load

Full Load with AWS DMS migrates all the data in your database at that point, it does not replicate changes in data. This is a good option for a one-time migration and if you do not need to capture ongoing changes. AWS DMS Limitations for Oracle Sources

Full Load + CDC

Full load + CDC is another AWS DMS option that will migrate all your data at the start and then replicate subsequent changes at source too. It will monitor your database while the task is in progress. This is especially good when you have very large databases and do not want to pause workloads. More on Change Data Capture

CDC only

CDC only with AWS DMS will replicate only changes that have happened in the database not the initial full load of data. This option is suitable when you are using some other method to transfer your database but still need to sync with ongoing changes at source. An S3 Data lake in Minutes (tutorial)

Some AWS DMS Limitations

AWS DMS Schema migration and conversion issues

AWS DMS doesn’t usually do schema or code conversion. AWS DMS when migrating data as part of a homogenous migration, tries to create a target schema at destination. This isn’t always possible as in the case of Oracle databases. You may need third party tools to create schema. AWS DMS cannot do schema conversions in the case of heterogenous migrations and additional effort will be involved using the AWS Schema Conversion Tool (SCT). This allows you to automatically convert your source schema to a suitable format for your target, however not all formats are supported. If the tool does not support your format, conversions will need to be done with manual coding. This is one of the biggest AWS DMS limitations. AWS DMS Limitations for Oracle Sources

Coding requirements for incremental capture of data

AWS DMS needs a fair bit of coding for Change Data Capture of incremental loads which can be time -consuming and effort-intensive. For example for S3, the changes need to be merged by custom coding. Change Data Capture Types and CDC Automation

Ongoing replication issues

While doing ongoing replication of data, AWS DMS replicates only a limited amount of data definition language (DDL). AWS DMS cannot process items such as indexes, users, privileges, stored procedures, and other database changes not directly related to table data. Also, maintaining AWS DMS on an on-going basis can be challenging at times with daily intervention required in some environments, especially when records go missing in the replication. Create an S3 Data Lake with BryteFlow

AWS DMS may slow down source systems with large database migrations

If you have a large amount of data to migrate, it may prove heavy going with AWS DMS. With a full load, AWS Database Migration Service uses resources on your source database. AWS DMS needs to perform a full table scan of the source table for each table under parallel processing. Also, every task created for the migration, queries the source for changes as part of the CDC process which may impact the source.

Performance

By default, AWS DMS can load 8 tables in parallel. This may help performance to a degree while using a very large replication server. However, if the source generates high throughput data, it may lag in the replication.

BryteFlow for seamless AWS data migrations to AWS

If you would like faster replication to AWS and ETL for AWS that is completely automated, we would urge you to consider BryteFlow. You can compare AWS DMS with BryteFlow. It replicates terabytes of data in minutes using automated partitioning and parallel multi-thread loading and creates tables automatically on destination. Incremental data is replicated continually in near real-time to AWS, using log-based Change Data Capture. Automated data reconciliation is built in and you get alerts and notifications in the case of missing or incomplete data. If you use S3 in your data integration, you can get seamless merges of multi-source data and transformation on S3 with BryteFlow. Get a Free Trial of BryteFlow