This blog looks at SAP Snowflake migration, the benefits of moving data from SAP to Snowflake, a checklist to follow for successful migration and the tools you could use to transfer data from SAP to Snowflake. We also discuss why the fully automated Bryteflow SAP Data Lake Builder could be the easiest way to move your data from SAP to Snowflake. Click here to jump to the BryteFlow SAP Data Lake Builder section.

Quick Links

- About SAP

- Integrated SAP ERP Modules

- SAP ERP Module Customization and Data Integration Challenges

- About Snowflake

- SAP to Snowflake Migration Benefits

- SAP to Snowflake Migration Checklist

- SAP to Snowflake Migration / Replication Tools

- How BryteFlow SAP Data Lake Builder makes SAP Snowflake integration easy

- BryteFlow SAP Data Lake Builder as a Snowflake SAP Connector

About SAP

What is SAP? The abbreviation stands for Systems, Applications, and Products in Data Processing. SAP is a market leader in ERP software and is used by 93% of the Forbes Global 2000 companies. With a market share of 27.5%, (according to SAP’s annual report) SAP is used by 440,000 customers in over 180 countries. It is used by organizations big and small who are focused on improving operational efficiency and improving their bottom line. SAP is used in a variety of verticals including manufacturing, retail, healthcare, finance, and more. Learn about BryteFlow SAP Data Lake Builder

Please Note: SAP OSS Note 3255746 has notified SAP customers that use of SAP RFCs for extraction of ABAP data from sources external to SAP (On premise and Cloud) is banned for customers and third-party tools. Learn More

Integrated SAP ERP Modules

A great advantage of SAP software is that it has a lot of integrated ERP modules, each dealing with a particular aspect of business:

- Financial Accounting (FI): Managing financial transactions, financial reporting, and analysis.

- Controlling (CO): Management accounting and reporting, profitability analysis.

- Sales and Distribution (SD): Managing sales processes like order processing, pricing, and billing.

- Material Management (MM): Managing procurement processes- purchasing, inventory etc.

- Production Planning (PP): Managing production processes – scheduling products, material requirements

- Quality Management (QM): Managing quality control processes, quality control and inspection

- Plant Maintenance (PM): Managing plant maintenance processes, preventive maintenance etc.

- Human Capital Management (HCM): Managing human resource processes, managing talent, payroll etc.

- Project System (PS): Managing project planning, execution, and monitoring, allocating resources

- Business Warehouse (BW): Data warehousing and business intelligence capabilities

Apart from these SAP ERP modules, there could be other add-on modules for specific industries and business operations. As you can see, having an SAP implementation can be instrumental in providing a holistic 360-degree view of data. Every department has access to a unified accurate view of data and can query the data leading to actionable, effective insights. However, the very advantage that SAP has in providing different ERP modules and the ability to customize them is also the factor that adds to its complexity. Extract data from SAP ECC

SAP ERP Module Customization and Data Integration Challenges

When you customize SAP modules, you may be adding in new fields and tables for specific requirements, which can increase the complexity of the data model. It could also create interdependencies within the SAP system. For e.g., making changes in one module might require modifications in another module or perhaps creating a new report might need inputs from more data sources. Another issue could arise if a company has customized SAP to use a non-standard data format or structure, this could prove difficult to integrate with systems that are used to a standard format. Upgrading SAP to a new version may also be problematic if customizations have been done previously. Doing an upgrade may call for changes to custom code or data structures and will involve a lot of effort to make the customization work with the new version. One way out from these challenges could be extracting, replicating and integrating SAP data and non-SAP data on a scalable, flexible Cloud platform like Snowflake. SAP Data Integration on Snowflake

About Snowflake

Snowflake is a cloud data warehousing platform that is infinitely scalable and very secure. It enables users to store, manage, maintain, and analyze huge volumes of data. Snowflake is flexible and is available on multiple cloud providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Snowflake enables organizations to run multiple workloads for use cases like Analytics, IoT, Machine Learning and Business Intelligence. It also supports a variety of data types, including structured, semi-structured, and unstructured data. SAP HANA to Snowflake (2 Easy Ways)

SAP to Snowflake Migration Benefits

Migrate SAP data to Snowflake and avoid installation of hardware or software

Snowflake is Cloud-native and a fully managed service. With Snowflake there is no hardware or software to be installed, configured, or managed, ongoing maintenance, upgrades and management are handled by Snowflake itself, this frees up your resources for more productive tasks rather than having to manage infrastructure. Replicate SAP HANA to Snowflake

Integrate SAP data on Snowflake easily with data from other sources

On Snowflake you can easily integrate SAP data from areas like Finance, HR, Purchase, Sales, Accounts Receivable and Accounts Payable with data sets from other sources. Snowflake has the capability to ingest semi-structured data such as JSON, Avro, Parquet, and XML easily, and the data can be queried immediately, which is not the case with SAP. A big benefit of Snowflake is that it can handle a range of data types, including structured, semi-structured, and unstructured data. Load terabytes of data to Snowflake fast

Unlock SAP data on Snowflake for different use cases

SAP data is diverse and can be present in different formats according to different customizations. Taking it to Snowflake and integrating it with data from SAP systems, non-SAP data and even machine-generated data enables the data to be unlocked and used for multiple use cases like BI, Analytics, Machine Learning and IoT. Using Snowflake means you can get all your data in one place for reporting, analytics, machine learning and other use cases. This can help enhance ROI on your data and increase profitability and operational performance. Snowflake CDC With Streams and a Better CDC Method

Migrating from SAP to Snowflake means you can separate compute and storage

Snowflake’s architecture separates compute and storage, allowing users to scale each independently based on their needs. This allows for a more efficient use of resources and reduces costs. Snowflake uses virtual compute instances for compute needs and a storage service for persistent storage of data. The Snowflake storage repository is centralized and accessible from all compute nodes. However, queries on Snowflake are processed using MPP (Massively Parallel Processing) in the compute clusters where each node stores a part of the complete data set. Clusters can be spun up or down as required to scale with the size of the workload. Thousands of compute clusters can be scaled up instantly and petabytes of data can be analyzed without impacting performance. This is the best of both worlds – a shared-disk architecture, but with the high performance and scalability of a shared-nothing architecture. RISE with SAP (Everything You Need to Know)

Sensitive SAP data can benefit from Snowflake security controls

Snowflake security is also top-notch which is a boon if your SAP data is sensitive and needs to be in line with compliance and policy regulations. Snowflake provides encryption of data, role-based access, and audit trails. It also provides automatic backup and disaster recovery capabilities. Databricks vs Snowflake: 18 differences you should know

Loading SAP data to Snowflake can lower data costs

Snowflake provides a Pay-as-you-go pricing model. This means you pay only for resources you actually use. When not in use, the resources can be turned off. Snowflake’s data compression feature allows you to store almost unlimited volumes of data, so storage is cost-effective. Moving data from SAP to Snowflake also means organizations are spared the cost of managing and maintaining the required data infrastructure.

Besides usage-based, per-second pricing that is paid in arrears, you can also avail of discounts by paying in advance using pre-purchased credits for Snowflake capacity options. Learn more

SAP to Snowflake Migration Checklist

Before migrating from SAP to Snowflake, you need to consider some important factors for a smooth transition. Think of this as a quick checklist for your SAP to Snowflake migration. SAP HANA to Snowflake replication

Identifying data that needs to be moved from SAP to Snowflake

You will need to identify and prioritize the data in SAP systems that needs to be moved to Snowflake, and make sure it is compatible with Snowflake integration and storage capabilities. For this you will need to be familiar and learn about Snowflake architecture and how it manages and stores data. Databricks vs Snowflake: 18 differences you should know

Reviewing SAP integration and customizations

If SAP data is integrated with other applications like BI or analytics tools, you may need to modify these integrations for a hassle-free SAP to Snowflake migration. You may need to re-implement earlier SAP customizations or even replace them for use on Snowflake. SAP HANA to Snowflake (2 Easy Ways)

Cleansing and Transformation before SAP to Snowflake migration

Review the source data to see if it requires cleansing or transformation before loading it from SAP to Snowflake. Accordingly devise a plan to manage the process. SAP to AWS (Specifically S3) – Know as Easy Method

Assessing SAP to Snowflake migration tools

What will you use to connect and migrate from SAP to Snowflake? Some options could be using Snowflake’s built-in tools like Snowpipe (ingests data from staging server), using SAP Data Services which is SAP’s data integration and transformation tool, using custom scripts or a third-party SAP connector like our very own BryteFlow SAP Data Lake Builder which is a fully automated tool. How to Carry Out a Successful SAP Cloud Migration

Testing the SAP to Snowflake process

Before the SAP to Snowflake migration, conduct a thorough testing of the process to see whether the implementation is working as it should. This helps to prevent expensive issues that could impact production data in the future. Replicate SAP HANA to Snowflake

Give some thought to data governance when bringing data across from SAP to Snowflake

Do you have data governance policies in place? Having data governance means putting scalable access policies in place for users, ensuring data is accurate, complete, and consistent, keeping data secure and auditable and ensuring compliance of organizations with regulations like GDPR, HIPAA, etc. Good data governance sets up policies for managing data including data creation, retention, and deletion. RISE with SAP (Everything You Need to Know)

SAP to Snowflake migration – plan training for users

When getting data across from SAP to Snowflake, users will probably need training on how to use Snowflake and familiarize themselves on the features, operations, and processes. A training plan will minimize disruptions and make for a hassle-free transition. Load terabytes of data to Snowflake fast

SAP to Snowflake post-migration Testing and Validation

Once the migration is done, conduct comprehensive testing to ensure that data is accurate and not lost. The number of records from the database should be as anticipated and the table structures, application folders and directories should be updated correctly in the Snowflake database. Verify that mapping has not changed including field types, values, constraints etc. and that the system is functioning correctly. The application should be stable and business operations should not face any disruption. Why You Need Snowflake Stages

Establish data management processes after SAP to Snowflake migration

You will need to set up data monitoring and management procedures after your SAP to Snowflake migration or replication. These will include data quality checks, maintaining backups, and security protocols. Also work out a process for troubleshooting, getting updates, and upgrades. How to cut Snowflake costs by 30%

SAP to Snowflake Migration / Replication Tools

The SAP to Snowflake replication tools and mechanisms we are discussing here could be used for data migration as well as replication. Here we will talk about some common tools that could be used to replicate or migrate SAP data to Snowflake. SAP HANA to Snowflake (2 Easy Ways)

Azure Data Factory for replicating SAP data to Snowflake on Azure

Azure Data Factory or ADF is a cloud-based data integration solution that enables you to create and manage data integration pipelines. It has built-in connectors for various data sources and destinations, including SAP systems and Snowflake. The process involves using ADF’s dedicated SAP connector to extract SAP data and another Snowflake connector to load data to Snowflake. The connectors need to be configured with the required credentials and connection details. 6 Reasons to automate your Data Pipeline

After this, a data integration pipeline can be created to extract data from SAP and load it to Snowflake. ADF has a visual interface to drag and drop components like source and destination connectors, transformations, and data flows. After the data pipeline is created, it can be scheduled to run at a specific time or interval. The performance can be further monitored using ADF’s monitoring and logging features. SAP HANA to Snowflake (2 Easy Ways)

Amazon AppFlow for replicating SAP data to Snowflake on AWS

Amazon AppFlow is a fully managed AWS integration service that allows you to transfer data from SaaS applications like SAP, Salesforce, Zendesk and ServiceNow etc. to AWS services like Amazon S3 and Redshift. It can be used for SAP Snowflake integration. AppFlow enables you to run data flows at scale and at a schedule of your choice. Data transformation can be configured, including filtering and validation and data being transferred is automatically encrypted for security. How to Carry Out a Successful SAP Cloud Migration

For moving data from SAP to Snowflake, Amazon S3 is integrated with the AppFlow SAP OData Connector and SAP data can be configured and extracted to an S3 bucket through a simple user interface. The data can be further processed with native AWS services or third-party tools and delivered to Snowflake via Snowpipe. When data is being moved from SAP to your S3 bucket, the AppFlow SAP OData Connector supports AWS PrivateLink – this ensures the traffic is confined to the AWS network and is not exposed to the risks of the public internet. About Snowflake Stages

SAP Data Services or BODS for SAP Data Integration on Snowflake

SAP Data Services also called SAP BODS (SAP Business Objects Data Services) is an enterprise-grade ETL tool that combines capabilities for data integration, transformation, data quality, data profiling and text data processing to extract data from a range of sources including databases, flat files, XML, SAP systems, and web services into a data warehouse, database, or SAP application – in this case from SAP to Snowflake. Create CDS Views in SAP HANA for data extraction

SAP Data Services can map and transform data using the Designer, one of its components. It prepares data using various methods such as mapping, aggregation, filtering, pivoting, and cleansing. It standardizes and cleanses data using data quality functions such as address validation, data enrichment, and data profiling. A big advantage is that SAP Data Services provides a graphical interface for modeling data integration jobs. The interface has a drag-and-drop UI for designing data flows, data mappings, and data transformations. Creating an SAP OData Service for Data Extraction in SAP BW

SAP Data Services provides data lineage and supports real-time data integration from SAP to Snowflake using Change Data Capture (CDC) and message queueing. SAP Data Services integrates with SAP Landscape Transformation Replication Server (SAP SLT) to add delta capturing capability (through Triggers) for SAP and non-SAP source tables. This enables deltas to be captured and delivered to the target. SAP SLT in HANA- using the SLT Transformation Rule

How BryteFlow SAP Data Lake Builder makes SAP Snowflake Integration Easy

Delivers data directly for SAP Snowflake integration (without staging)

BryteFlow SAP Data Lake Builder delivers data directly from SAP to Snowflake without landing it to a staging platform unlike the tools we mentioned earlier. It uses Snowflake’s internal stage and uses all the best practices. The whole process is much faster without intermediate stops.

SAP Snowflake integration with complete automation

The tools discussed earlier, deliver data to a staging area on Cloud storage platforms. After that you need Snowpipe to deliver data from the respective staging areas to Snowflake and this needs a good amount of scripting. Snowpipe is the continuous data ingestion service offered by Snowflake, it loads files once they are available in the stage, whether on Amazon S3, Azure Blob or Google Cloud Storage.

BryteFlow SAP Data Lake Builder on the other hand, is no-code and provides complete automation. After the tool is configured, you just need to define the SAP source and Snowflake destination with a couple of clicks, select the tables you need to take across, set a schedule for replication and start receiving data almost immediately. Schema, tables, data mapping and conversion is all automated. It doesn’t get any easier than this!

SAP to Snowflake in one go, makes for a robust data pipeline

Processing data with BryteFlow SAP Data Lake Builder is just a one phase process and does not require 2-3 distinct operations as is the case with most SAP Snowflake integration tools. Data migration involving multiple hops increases latency, the risk of the pipeline breaking and data getting stuck. SAP to AWS (Specifically S3) – Know as Easy Method

Managing security for your SAP Snowflake integration is easier

Managing security is easier with BryteFlow SAP Data Lake Builder. You do not have to define and manage security on Azure Blob, Amazon S3 or Google Cloud Storage since staging is not needed. BryteFlow is configured and installed within your VPC, the data is subject to your security controls and never leaves your environment. SAP HANA to Snowflake (2 Easy Ways)

BryteFlow SAP Data Lake Builder as a Snowflake SAP Connector

BryteFlow SAP Data Lake Builder provides the easiest and fastest way to get data from SAP to Snowflake. It is completely automated (no-code) and can replicate or migrate SAP data to Snowflake on AWS, Azure and GCP with business logic intact. The tool replicates data to Cloud platforms like Snowflake, Redshift, S3, Azure Synapse, ADLS Gen2, Azure SQL Database, Google BigQuery, Postgres, Kafka, Databricks, SingleStore, or SQL Server. You save enormously on time and effort since you don’t need to create tables again on destination. You get real-time, ready for use data with extremely high throughput (1 million rows in 30 secs). BryteFlow SAP Data Lake Builder uses automated Change Data Capture (CDC) to deliver incremental data and deltas and syncs data with source continually or as per your specified schedule.

BryteFlow SAP Data Lake Builder extracts data from SAP applications like SAP ECC, S4HANA, SAP BW, SAP HANA using the Operational Data Provisioning (ODP) framework and SAP OData (Open Data Protocol) Services, and replicates data with logic intact to Snowflake. BryteFlow SAP Data Lake Builder can also integrate with SAP SLT for real-time replication. Data extraction and analysis of the SAP source application is completely automated with the BryteFlow SAP Data Lake Builder. Your data from SAP is ready-to-use on Snowflake for various uses cases including Analytics and Machine Learning. SAP HANA to Snowflake (2 Easy Ways)

Highlights of the Snowflake SAP Connector Tool

- BryteFlow SAP Data Lake Builder has multiple ways to connect to SAP at the application level. The tool can connect to ODP Extractors, SAP SLT (if licensed) or CDS Views to extract data and take it to Snowflake. SAP HANA to Snowflake (2 Easy Ways)

- Delivers data from SAP to Snowflake that is real-time and ready-to-use, with business logic intact. Extract data from SAP S/4 HANA

- Has flexible connections for SAP including Database logs, ECC, HANA, S/4HANA and SAP Data Services. More on BryteFlow SAP Data Lake Builder

- Automates data extraction, using SAP OData Services and the ODP framework to extract the data, both initial and deltas and uses Change Data Capture to sync data in real-time. SAP OData Services and ODP for extraction

- Captures inserts, updates, and deletes as they happen in the SAP application and merges them automatically with data in Snowflake. How to Carry Out a Successful SAP Cloud Migration

- Integrates seamlessly with BryteFlow Blend our data transformation tool to deliver data transformation on Snowflake.

- High throughput – replicates a million rows of data in 30 seconds. Snowflake CDC With Streams and a Better CDC Method

- No coding for any process including data extraction, masking, SCD Type2 or data merging. Source to Target Mapping Guide (What, Why, How)

- It encrypts the data at rest and in transit. It uses SSL to connect to data warehouses and databases. It can mask sensitive data for security purposes. RISE with SAP (Everything You Need to Know)

- Provides automated configuration for smart partitioning, file types and compression during data ingestion. BryteFlow’s SAP Replication at Database Level

- Automates DDLs, data mapping, data conversion and SCD Type 2 history. SAP HANA to Snowflake (2 Easy Ways)

- Provides end-to-end monitoring of workflows with BryteFlow ControlRoom.

- Has an automatic network catch-up mode and resumes when normal conditions are restored

Schedule a FREE POC of the BryteFlow SAP Data Lake Builder or Contact us for a Demo

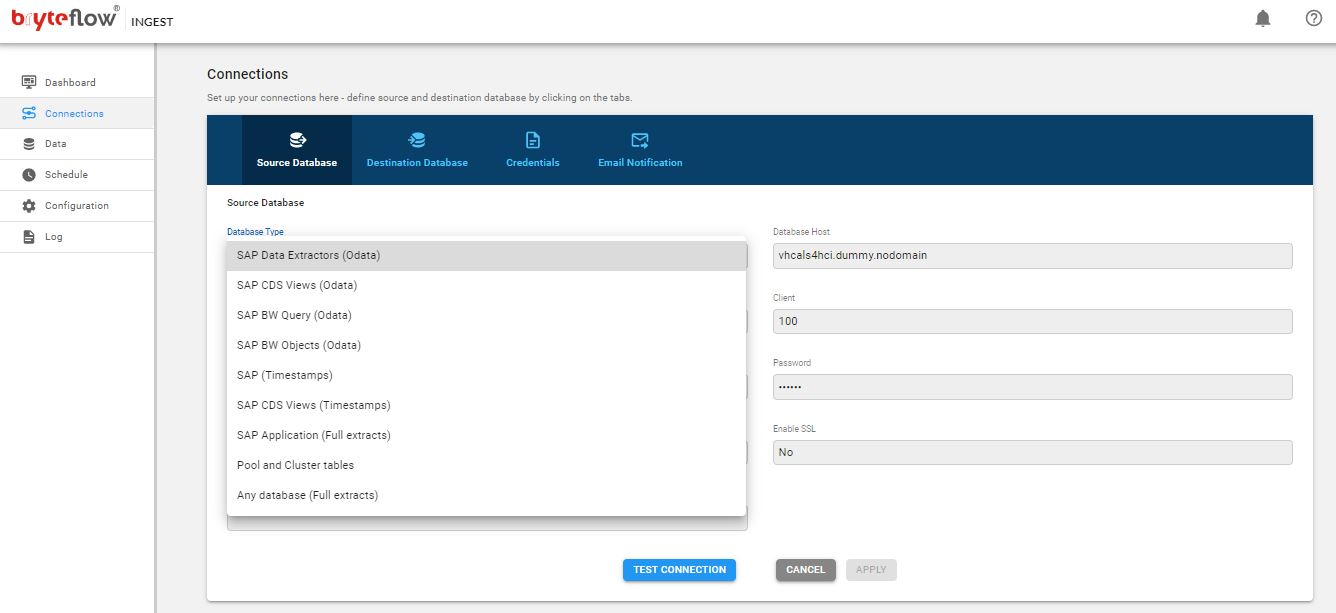

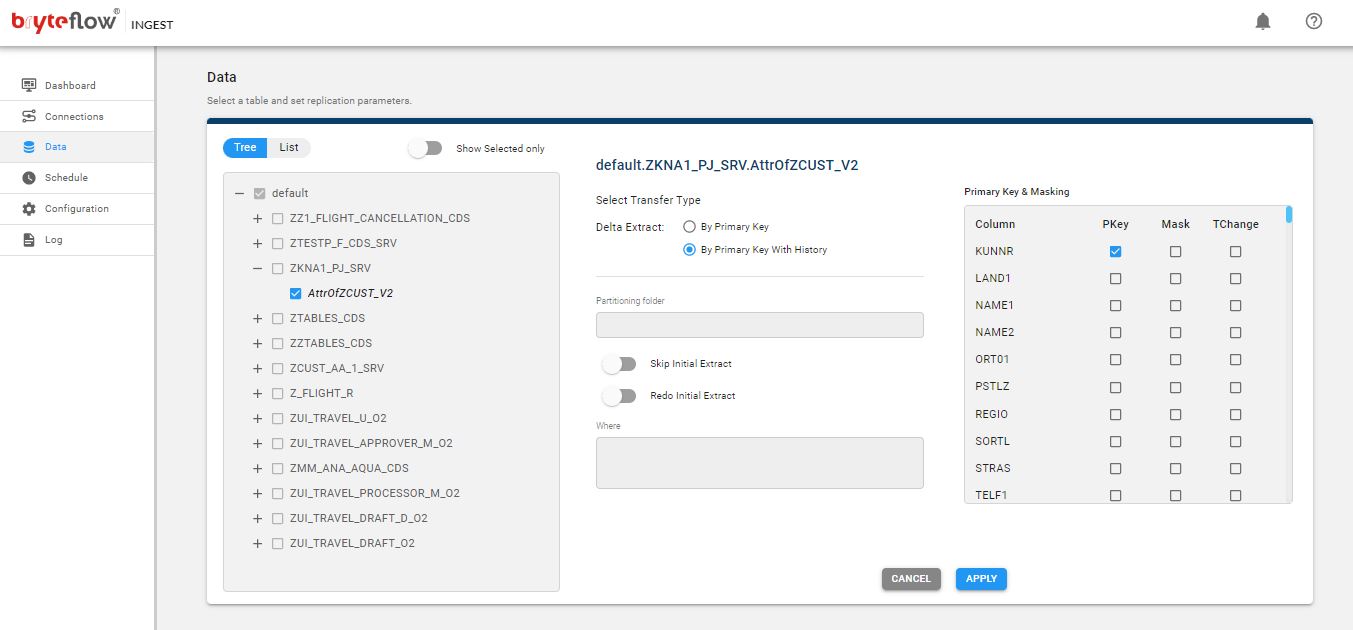

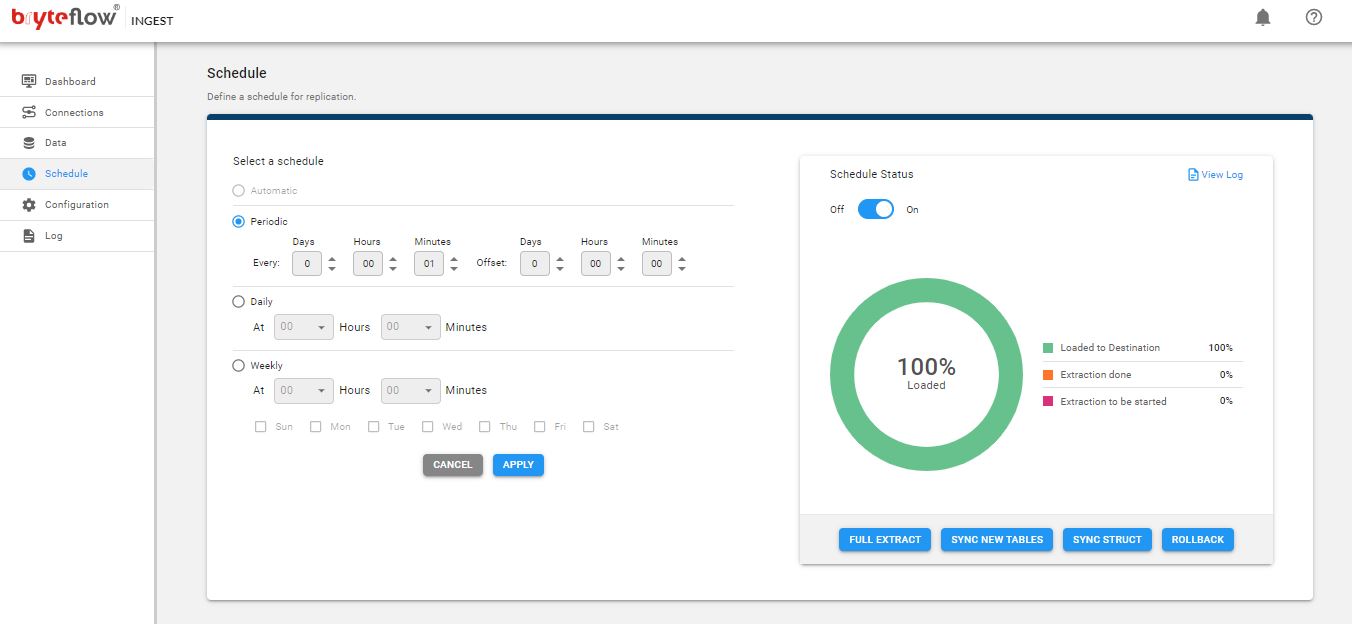

BryteFlow SAP Builder screenshots illustrating the SAP to Snowflake Journey

The first step is to select an SAP connector for the SAP application. The screenshot shows all available connectors including SAP Data Extractors, SAP CDS Views, SAP BW Query, SAP BW Objects, SAP Application (Full Extracts), Pool and Cluster Tables, Any Database (Full Extracts), SAP (Timestamps). You can put in the configuration parameters to connect to the SAP Application host.

The next step is to configure destination connectors for Snowflake. You can load data directly to Snowflake or select a staging area to do it indirectly. Also put in the login credentials and the Snowflake database details.

The screenshot shows all available SAP OData Services from the SAP Application Object Catalog (IGWFND). You can select the tables you need to take to Snowflake from the SAP application and whether the delta extract will be with ‘Primary Key’ or ‘Primary Key with History’. Selecting the latter will enable you to maintain SCD Type 2 history.

This screenshot shows schedule settings for the SAP Snowflake pipeline. With the ‘Periodic’ schedule settings, you can select at what time the pipeline should run and whether it should run daily or weekly. Select ‘Automatic’ if you need continuous extraction. This will run whenever there is a change at the SAP source, and it will merge the deltas with data on Snowflake.

Contact us for a Demo or Schedule a FREE POC of the BryteFlow SAP Data Lake Builder