Is SAP extraction in your SAP Business Warehouse (SAP BW) proving to be a challenge? Are you finding it difficult to extract and access SAP data from SAP systems and integrate it with data from non-SAP sources? Here we present 2 methods to make SAP extraction and integration easier by extracting SAP data using ODP and SAP OData Services in SAP BW.

Quick Links:

SAP BW and its SAP Extraction Objective

BW Storage: ADSOs, DSOs, InfoCubes and InfoProviders

ODP (Operational Data Provisioning)

ODP Extraction in SAP BW on HANA with BryteFlow

Creating SAP OData Service on SAP BW Query for SAP Extraction

Creating SAP OData Service on SAP BW ADSO for SAP Extraction

SAP Extraction and Integration Tool: BryteFlow SAP Data Lake Builder

SAP BW and its SAP Extraction Objective

SAP Business Warehouse or SAP BW as it is better known is SAP’s enterprise data warehouse. SAP Business Warehouse has a lot of functions, including data modelling, administration, and provision for staging. It integrates multi-source data, aggregates, and transforms data, carries out data cleansing and stores data. SAP BW stores data from SAP and non-SAP sources and users can access this through built-in reporting, BI and analytics tools and even third-party software. Build an SAP Data Lake

SAP BW’s priority was to deliver online analytical processing (OLAP) and was built on top of SAP’s transactional system R/3, which was meant for online transaction processing (OLTP). SAP BW’S objective was to extract SAP data from applications and report on it but now encompasses many additional functionalities. SAP to AWS (Specifically S3) – Know as Easy Method

Please Note: SAP OSS Note 3255746 has notified SAP customers that use of SAP RFCs for extraction of ABAP data from sources external to SAP (On premise and Cloud) is banned for customers and third-party tools. Learn More

BW Storage: ADSOs, DSOs, InfoCubes and InfoProviders

A short explanation on BW Storage and Objects within:

Transactional Data in SAP BW: Transactional data changes often and refers to daily activities that are part of business. This would include data generated by transactions by or with Vendors, Customers, and Materials. SAP BW stores transactional data in multiple objects like ADSO (Advanced Data Store Objects), DSOs (Data Store Objects) and InfoCubes. How to Carry Out a Successful SAP Cloud Migration

Master Data in SAP BW: Master Data is the core data that does not usually change and is stored in InfoObjects. SAP BODS, the SAP ETL Tool

Presentation of Data: The data from these objects is read through BEx (Business Explorer) Queries with all the business logic present applied to data. SAP SLT Replication using ODP Replication Scenario

About SAP BEx (SAP Business Explorer)

The component in SAP BW that provides reporting and analysis tools is the BEx or Business Explorer. BEx queries create views or reporting objects called InfoProviders. The InfoProviders are defined by InfoObjects and have business evaluation data like sales revenue, operating margins etc.- metrics relevant to the analysis being done. InfoObjects have characteristics like figures, dimensions, time, units, or technical characteristics like trouble ticket numbers. RISE with SAP (Everything You Need to Know)

Data Targets in SAP BW and the difference between DSOs and InfoProviders

A Data Warehouse stores data at a highly comprehensive level. Data Targets in SAP BW are objects that actually store data. DSOs or Data Store Objects are data targets but need not be InfoProviders. The Data Store Object (DSO), stores data at a granular level, tracks data changes and stores master data. InfoProviders in contrast, are objects that can be used to create queries for reporting purposes. SAP HANA to Snowflake (2 Easy Ways)

The InfoCube is the central data target which stores aggregated data that can be used to generate queries. In SAP BW, it is advisable to have a DSO as the staging layer to store transactional / multi-source data before delivering it to InfoCubes or other data targets.

Unlike InfoCubes, DSOs are transparent tables and can overwrite stored data for a specified key. The table’s key identifies each record in the table. DSOs can cumulate data for a specified key if configured, just like InfoCubes. SAP to Snowflake (Make the Integration Easy)

About ADSOs

For SAP BW on HANA, The ADSO (Advanced Data Store Object) is used which has new functions and table structures. ADSOs are more flexible than InfoProviders (used on BW systems not based on HANA) and can modify functions without loss of filed data. Contents of a table can also be modified if the type is changed. SAP ECC and Data Extraction from an LO Data Source

ODP (Operational Data Provisioning)

About Operational Data Provisioning (ODP) Framework

The Operational Data Provisioning (ODP) framework can be used to connect SAP systems, for e.g., SAP ECC as the source system to the SAP BW∕4HANA system. ODP provides a technical infrastructure to enable SAP extraction and replication. Communication and exchanging information between SAP systems is enabled by RFC or Remote Function Call. DataSources are available in SAP BW∕4HANA using the ODP context for DataSources (SAP extractors). ODP is recommended for SAP extraction and replication from SAP (ABAP) applications. 5 Ways to extract data from SAP S/4 HANA

Operational Data Provisioning enables loading of data in increments from ABAP CDS views, ADSOs, and SAP extractors. In the SAP BW/S4HANA environment, ODP is the main mechanism for SAP extraction and replication from SAP (ABAP) systems to the SAP BW/4HANA data warehouse. How to Carry Out a Successful SAP Cloud Migration

About Sap Extractors

An SAP Extractor is a program you can use to prepare and capture data in SAP applications via an extract structure. It can do a full load or delta load of different types to get the data from SAP ERP and CRM applications to SAP BW. The origin of the SAP extractor can either be a standard DataSource or custom-built DataSource. SAP HANA to Snowflake (2 Easy Ways)

SAP Extractors used as an SAP OData Service via ODP

Any SAP extractors that are whitelisted by SAP for ODP framework can be enabled to extract data from SAP and expose that data as an OData Service for consumption. SAP ECC and Data Extraction from an LO Data Source

What are SAP OData and SAP OData Service?

SAP OData is a standard Web protocol that builds on Web technologies like HTTP to query and update data present in SAP systems using the ABAP language. This enables access to information from external platforms, devices, and applications.

When you need to expose SAP data from Tables or Queries to an external environment like Fiori or HANA, you need an API to push out the data. We can achieve this by creating a service link using SAP OData that can be accessed online to perform CRUD (Create, Read, Update, and Delete) operations. This is the SAP OData Service. We use the SEGW (Service Gateway) transaction code to create an SAP OData Service within the SAP environment. Easy SAP Snowflake Integration

ODP Extraction in SAP BW on HANA with BryteFlow

BryteFlow SAP Data Lake Builder enables you to extract SAP data from SAP applications to SAP BW using the ODP framework and load data to destination in completely automated mode (no programming needed). From SAP BW 7.5 version onwards, you can create an SAP OData Service directly on the BW objects, which BryteFlow can then use to extract data out of SAP BW.

Data Extraction from SAP S/4 HANA

Creating an SAP OData Service directly on the BW objects (ADSOs) and on SAP BW Queries for SAP extraction using ODP

SAP OData Services are easily available for SAP BW queries. You just need to select a checkbox and register the service first. Bryteflow uses the SAP OData Services to extract the data out of BW Queries and load it on destination via ODP. In this blog we are going to show you how to create the SAP OData Service on ADSOs and on BW Queries. The methods explained are one of a kind, there could be other methods also, to create SAP OData Services on ADSOs and BW Queries. Create SAP OData Service using CDS Views

Creating SAP OData Service on SAP BW Query for SAP Extraction

Following are the steps to generate the SAP OData Service on SAP BW Queries

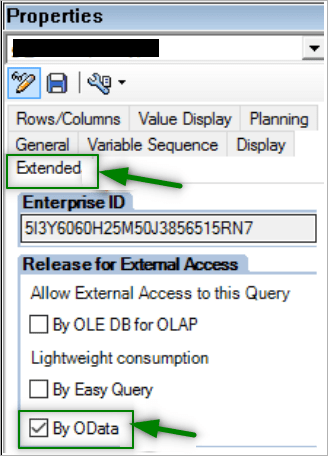

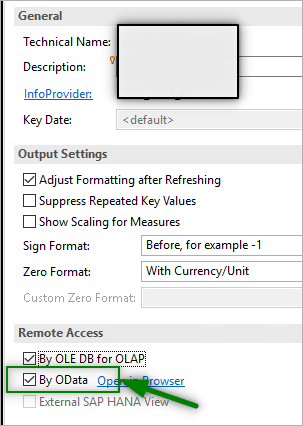

Step 1: In SAP BW Query, we enable the flag for OData as shown below in BW 7.4 and BW 7.5.

Step 2: Once the flag is checked and the query is saved, the system generates the backend objects along with the SAP OData Service. This service should be registered, without registration the service will not work.

Service Name will ideally be Query technical name appended with _SRV.

Example: If the Query Name is ZCP_FI_Q001 then the Service name would be ZCP_FI_Q001_SRV.

Follow the steps below to register the service:

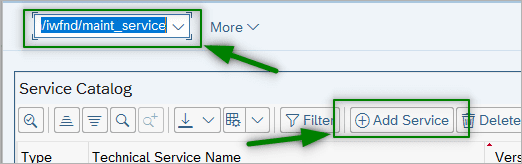

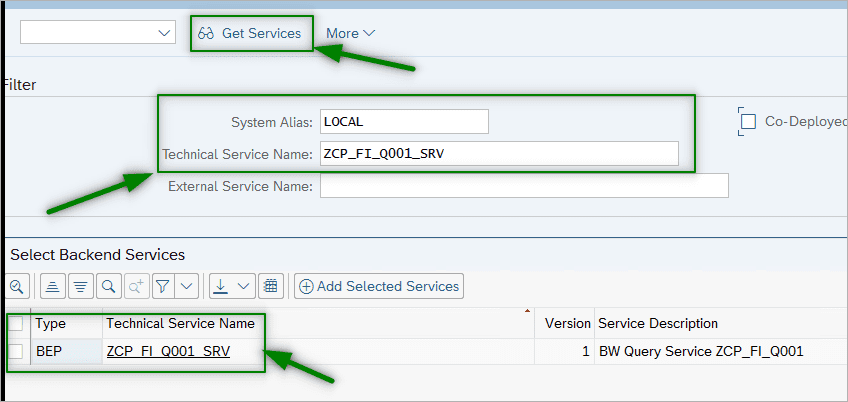

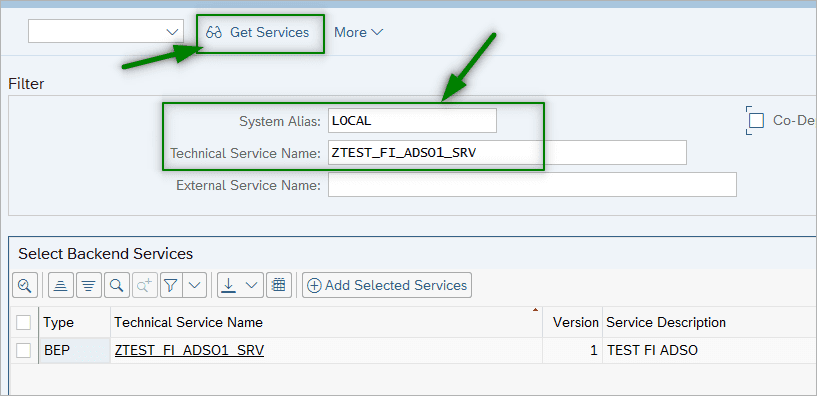

- Go to transaction /n/IWFND/MAINT_SERVICE. Click on Add Service

- In the next screen provide the System Alias, Service Name and click on Get Services to fetch the service details.

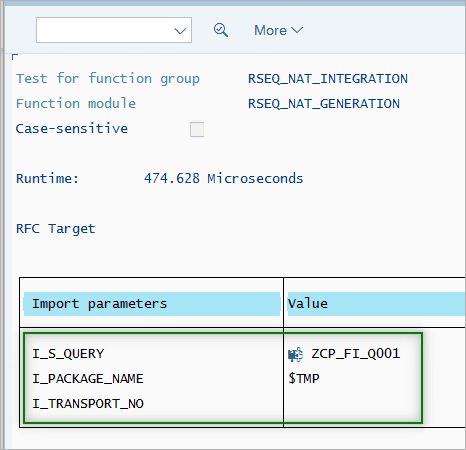

Note: If the SAP OData Service is not shown, then perform the following steps:- Go to transaction SE37 and Execute the Function Module “RSEQ_NAT_GENERATION”

- Provide the Query Name, Package Name and Transport number (if you are creating it as local object then provide package as $TMP and Transport number is not required.)

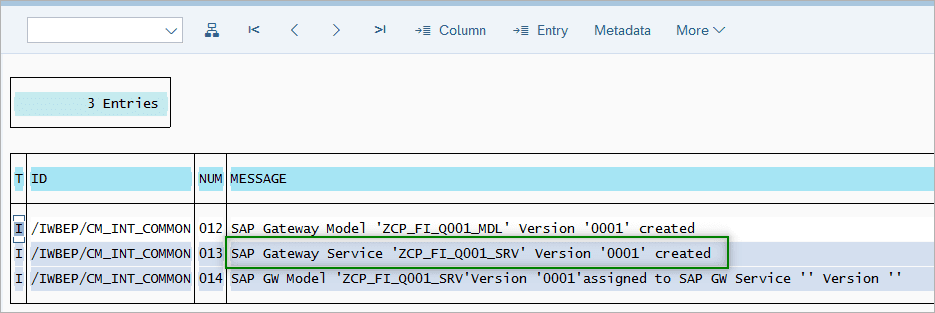

- Once the values are updated and the Function module is executed, the result will be shown below.

Now the SAP OData Service is successfully created. Execute Step 2 again and now you should be able to see the OData Service.

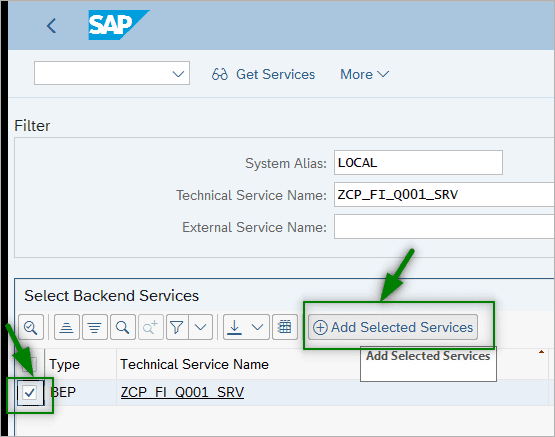

- Select the OData Service and click on Add Selected Service this will register the service. A popup message will appear upon successful registration of service.

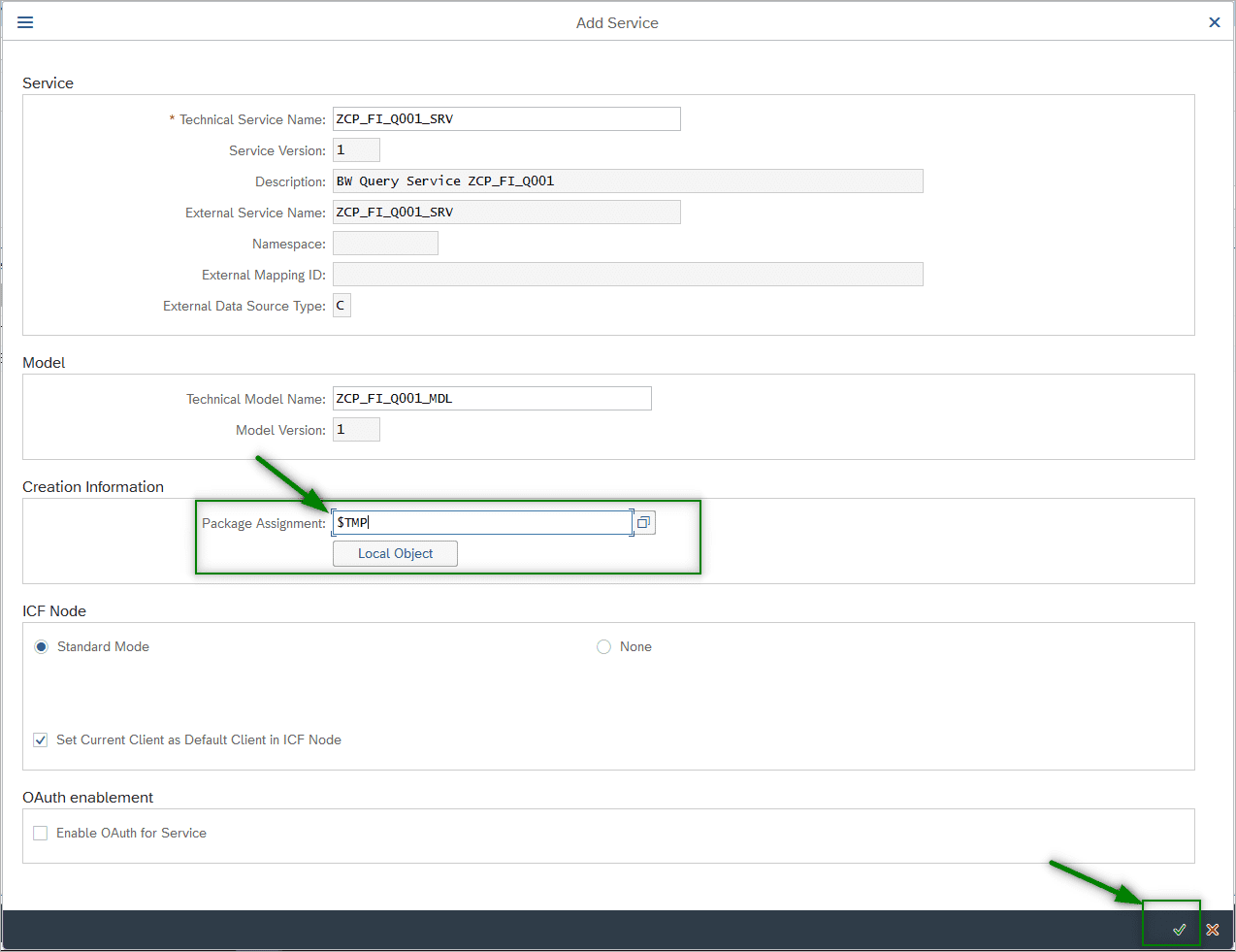

Once Add Service is clicked, we get a popup to provide the Package name. If it is a local object, the click on local object or enter $TMP. Then click on OK.

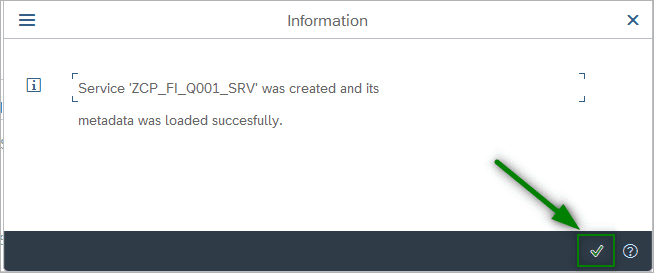

Once the SAP OData Service is registered successfully, we get the below popup. Click OK and the OData Service is ready to use.

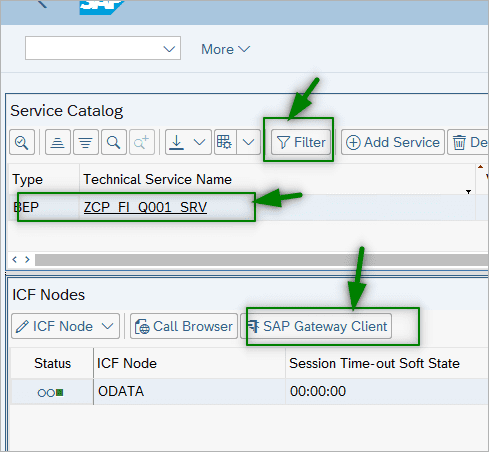

- Navigate to the main screen and click on filter, provide the OData Service name.

- Upon successful registration, the SAP OData Service will show up here. Select the OData Service and hit the OK button. Then click the SAP Gateway Client button to test the service.

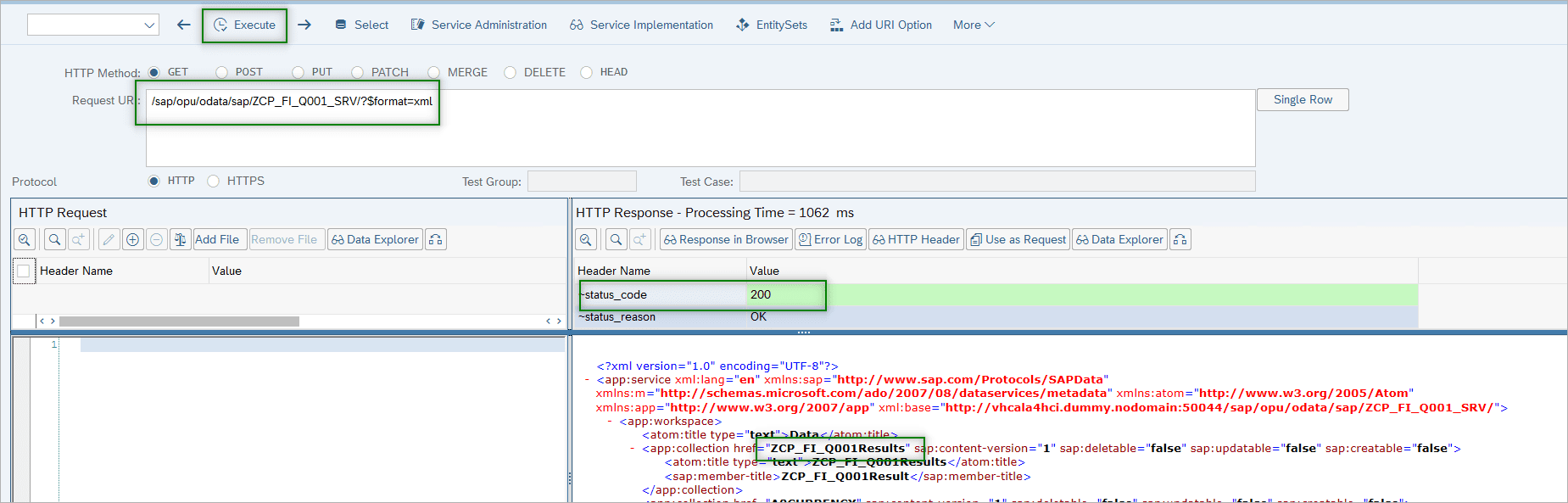

- In the new window, click on Execute button to test the SAP OData Service and notice the HTTP response.

- A successful working OData Service will show a status code as 200 as shown below.

Once the SAP OData Service is ready and successfully tested, it is ready to be consumed by BryteFlow SAP Data Lake Builder for the data to extracted from SAP and loaded into the target systems.

Note: The Entity which gives the query data output is the one which has Query name appended with Results.

Example: If the Query Name is ZCP_FI_Q001 then the Entity name is ZCP_FI_Q001Results.

Creating SAP OData Service on SAP BW ADSO for SAP Extraction

Follow these steps to generate the SAP OData Service on an ADSO (Advanced Data Store Object):

In BW versions before BW 7.5 we had to create Export DataSource to export data out of BW ADSO or DSO or Infocube. But with BW/4HANA we can directly create an SAP OData Service on BW Objects.

Step1: Create a Project in SAP transaction SEGW

- Go to transaction SEGW and create a project

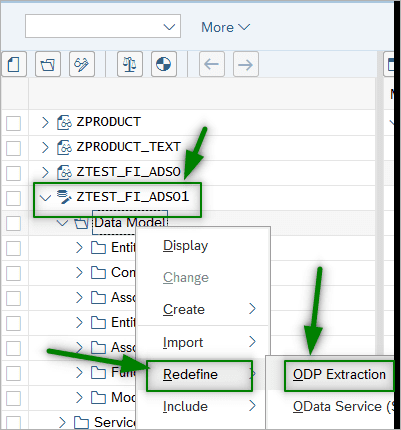

- Right click on the Data Model, select Redefine and then choose ODP extraction

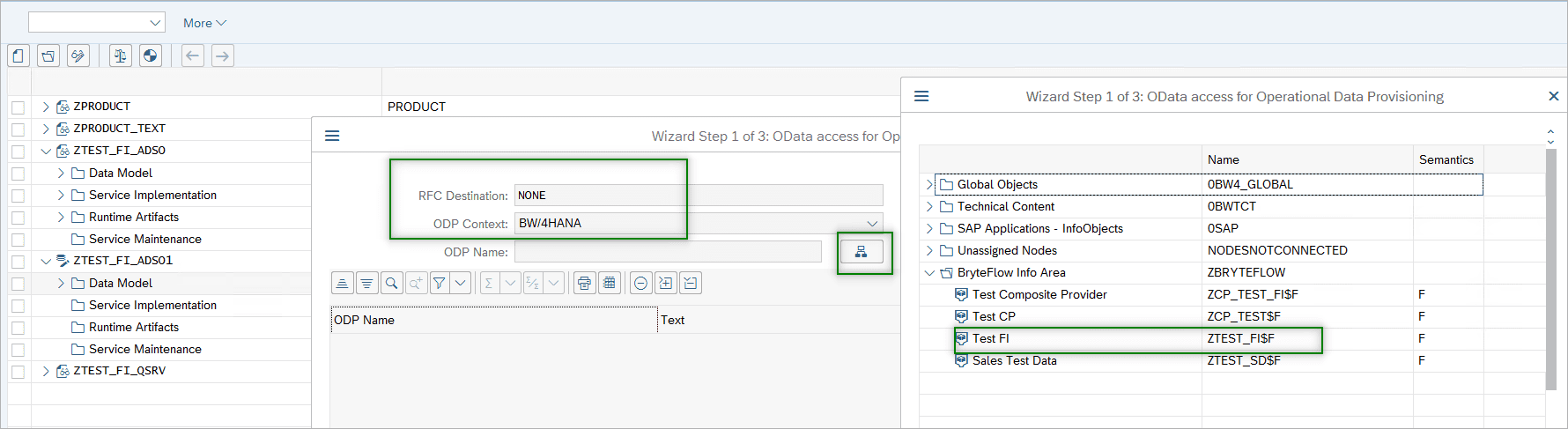

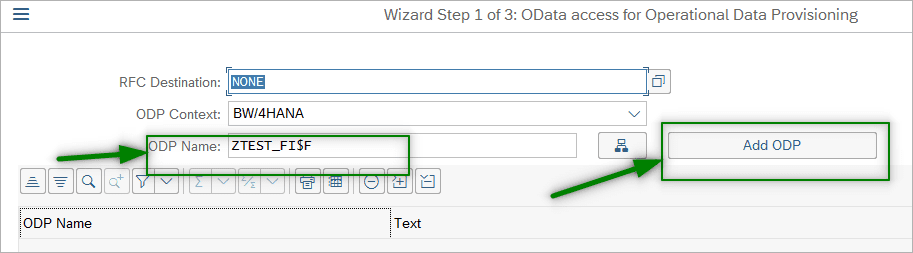

- Provide the RFC Destination, Choose the BW/4HANA Context and click on the button, a popup appears, then select the InfoProvider from the list of objects. Once selected, click on Add ODP.

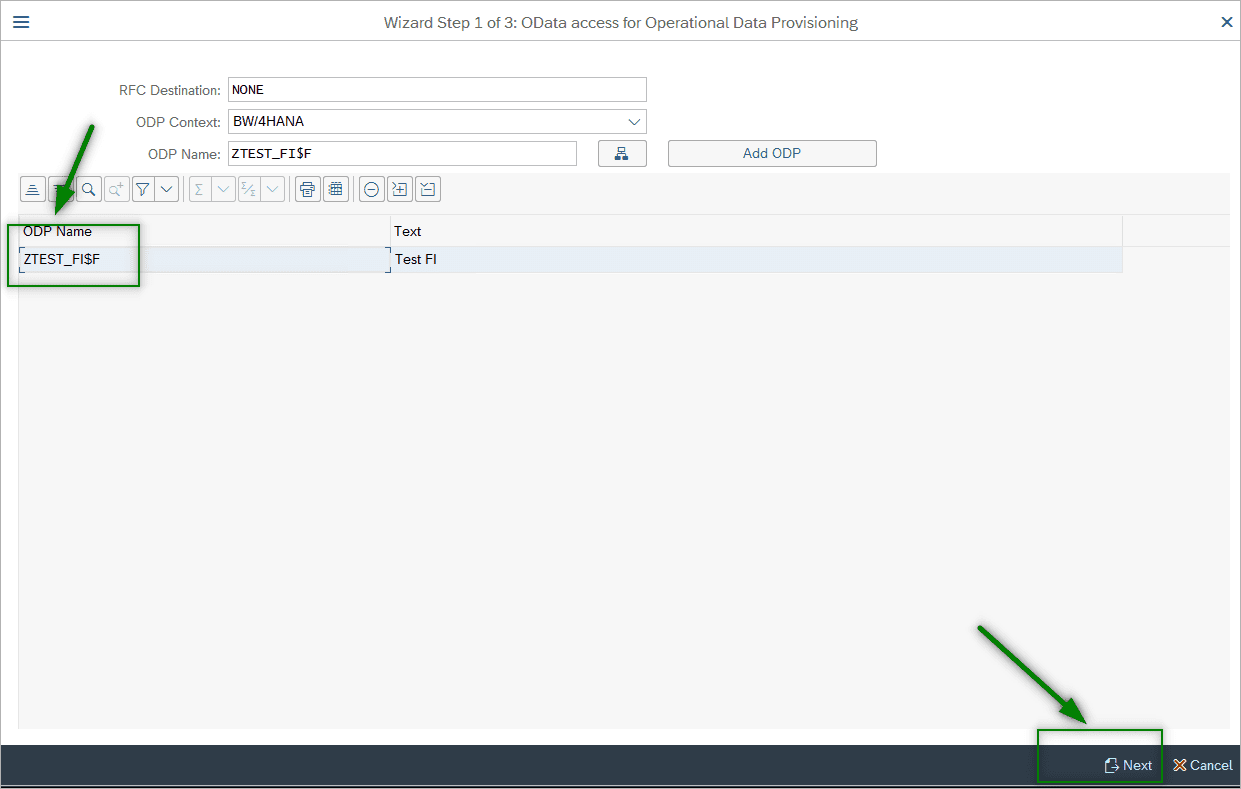

- Click on Add ODP, select the ODP name and click on the Next button.

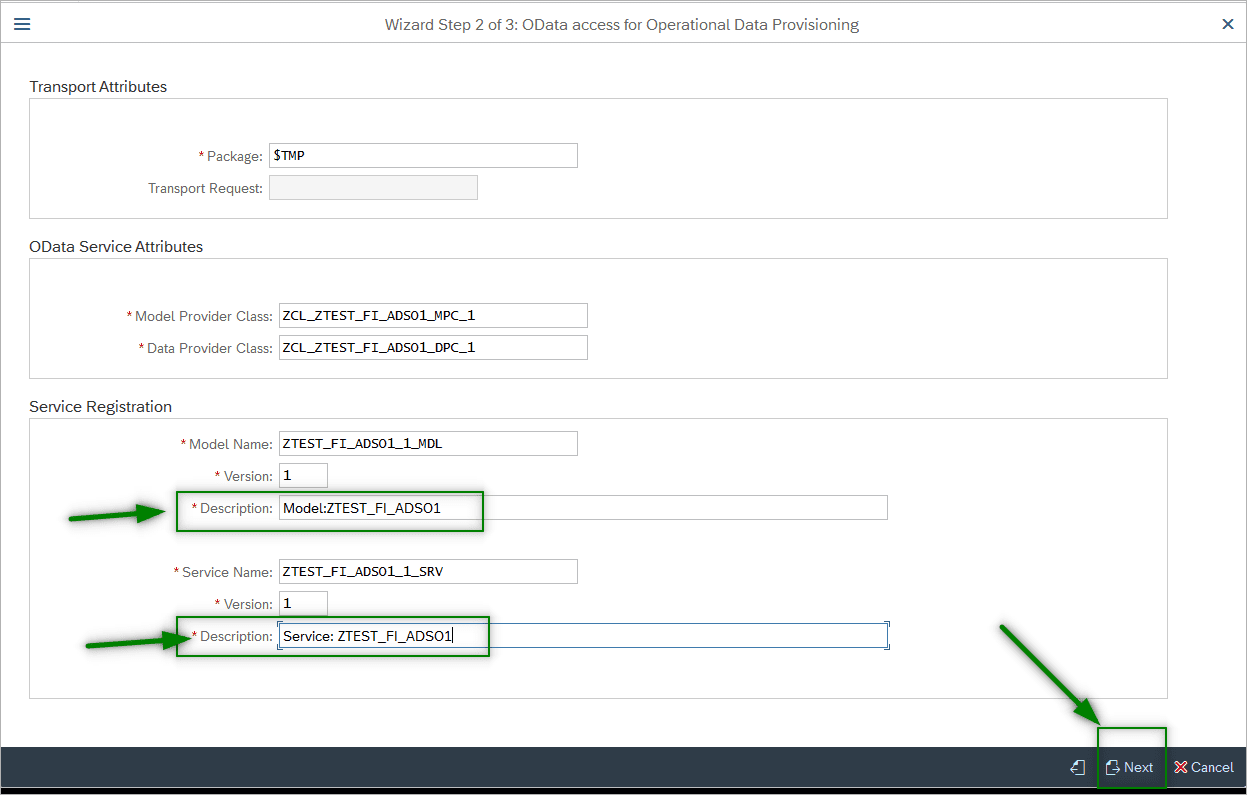

- Provide the necessary details and complete the steps to create a project.

- Click Generate to generate the SAP OData Service.

- This will generate the SAP OData Service, make a note of the service name, entity types, entity set names etc.Once the OData Service is generated it should be registered. Without registration the service will not work.Follow the steps below to register the service:

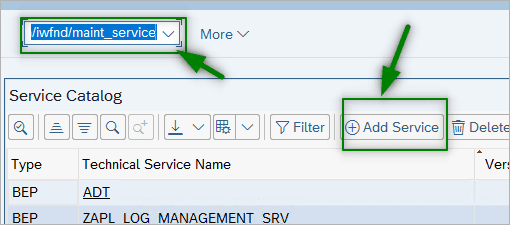

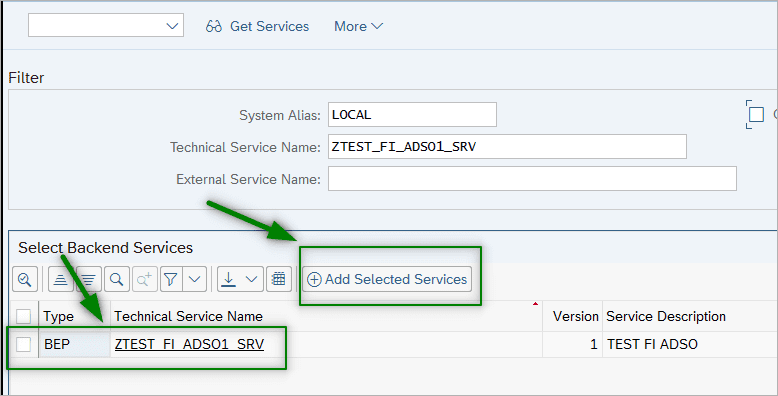

- Go to transaction /n/IWFND/MAINT_SERVICE. Click on Add Service

- In the next screen provide the System Alias and Service Name to fetch the service details.

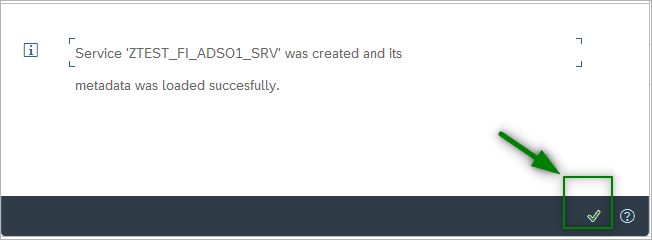

- Select the service and click on Add Selected Service, this will register the SAP OData Service. A popup message will appear upon successful registration of the service.

Click on Ok to continue.

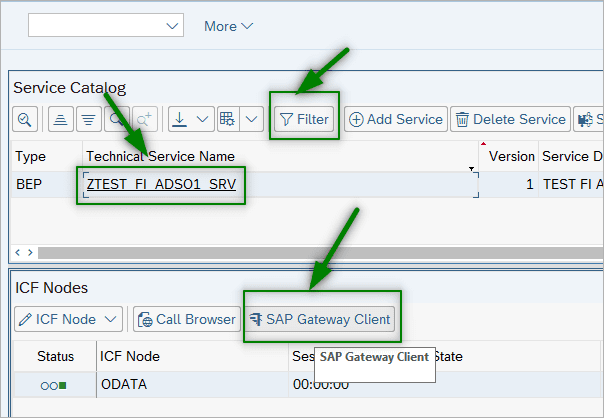

- Navigate to the main screen and click on filter, provide the service name. Upon successful registration, the SAP OData Service will show up here. Select the OData Service and hit the OK button. Then click the SAP Gateway Client button to test the service.

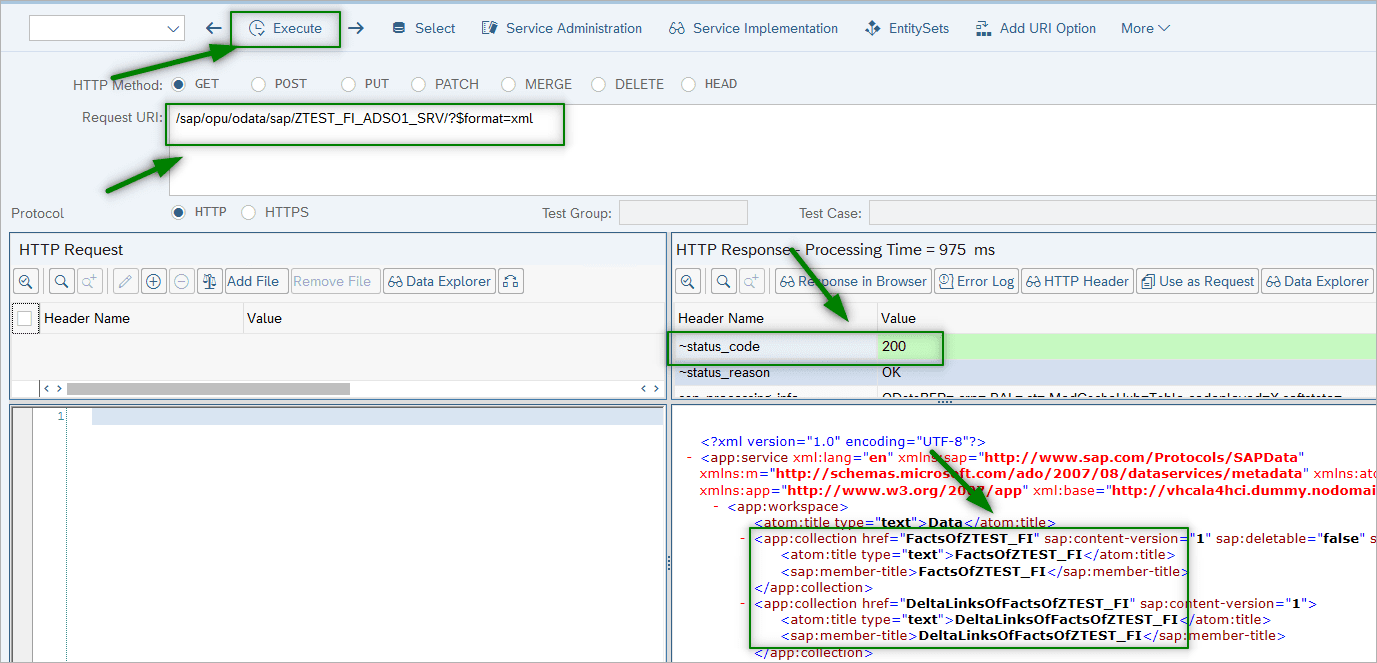

In the new window, Click on Execute button to test the SAP OData Service and notice the HTTP response. A successful working OData Service will show a status code as 200 as below.

Once the SAP OData Service is ready and successfully tested, it is ready to be consumed by BryteFlow SAP Data Lake Builder for the data to extracted from SAP and loaded into target systems.

SAP Extraction and Integration Tool: BryteFlow SAP Data Lake Builder

- Our SAP extraction and integration tool (BryteFlow SAP Data lake Builder) helps you extract SAP data automatically from SAP applications like SAP ECC, S4HANA, SAP BW, and SAP HANA, with business logic intact. SAP to AWS (Specifically S3) – Know as Easy Method

- You get a completely automated setup of data extraction and automated analysis of the SAP source application, with zero coding to be done for any process. You don’t need external integration with third party tools like Apache Hudi either. SAP BODS, the SAP ETL Tool

- The SAP integration tool enables automated ODP extraction, using SAP OData Services to extract the data, both initial and incremental or deltas. Our SAP ETL tool can connect to SAP Data Extractors or SAP CDS Views to get the data from SAP applications.

- BryteFlow also provides log-based CDC (Changed Data Capture) from the database using transaction logs if the underlying SAP database is accessible. It merges deltas automatically with initial data to keep the SAP Data Lake or SAP Data Warehouse continually updated for real-time SAP integration. RISE with SAP (Everything You Need to Know)

- Our SAP integration tool ensures ready-to-use data in your SAP Data Lake or SAP Data Warehouse for Analytics and Machine Learning. Best practices and high performance for SAP integration on Snowflake, Redshift, S3, Azure Synapse, ADLS Gen2, Azure SQL DB, SQL Server, Kafka, Databricks, Postgres and Google BigQuery are built in.