SAP SLT in HANA: How to use SLT Transformation Rule

SAP SLT in HANA Replication – an overview, use case scenarios where SLT replication can be used and how to use SLT Transformation Rules to correct or mask data being replicated from the source at table level. (a tutorial). The SAP Cloud Connector and Why It’s So Amazing

Quick Links

- What is SAP SLT?

- SAP SLT Replication in SAP HANA

- SLT in SAP HANA- why is it needed?

- SAP SLT Replication Use Cases

- SAP SLT in HANA: Data Transformation

- How to Assign SLT Transformation Rule Step by Step (Tutorial)

- SAP SLT Replication with BryteFlow

What is SAP SLT?

SAP SLT stands for SAP Landscape Transformation Replication Server, and it is a component of the SAP Business Technology Platform. As the name suggests, it replicates data from SAP applications and non-SAP systems in real-time to the SAP HANA database. SAP SLT is an SAP ETL tool that delivers real-time data using trigger-based Change Data Capture unlike SAP BO Data Services (BODS) that does batch replication. Trigger-based CDC means the deltas are captured when the database is triggered due to population of data in the logging table. SAP SLT Replication using ODP

Please Note: SAP OSS Note 3255746 has notified SAP customers that use of SAP RFCs for extraction of ABAP data from sources external to SAP (On premise and Cloud) is banned for customers and third-party tools. Learn More

SAP SLT Replication in HANA

For organizations using HANA as a reporting and analytics database, the priority is to replicate data to HANA fast and with a minimum of effort for timely reporting and business insights. SAP SLT in HANA provides real-time and scheduled replication from SAP and non-SAP systems, both on premise and in the Cloud. SAP SLT Server does not impact source systems either and is perfect for basic transformation. SLT replication ensures organizations have access to their data in HANA whenever they need it. SAP SLT does not have a wide range of data quality transform options as compared to other SAP ETL tools like SAP Data Services (BODS) or HANA Smart Data Integration (SDI). However, it is easy to set up and maintain. Create CDS Views in SAP HANA

SLT as a product has been around for a long time and had been developed as a replication tool for SAP HANA since 2010, namely Data Migration Server 2010 (DMIS). Over time SLT has become an ETL tool that enables real-time data replication with Change Data Capture to SAP BW, SAP HANA, SAP Data Services, and native SAP applications. SAP HANA to Snowflake (2 Easy Ways)

SLT in SAP HANA, why is it needed?

SAP SLT enables organizations to integrate and use data for analytics and align the current SAP system environment with changes that may happen due to business restructuring, company merges, updating current processes, integration of data from an acquisition or removal of data because of sale or spin-off of a subsidiary etc. These are some of the scenarios that could call for SLT HANA replication:

Integration of SAP Systems post-merger

When organizations undergo mergers, organizations need to be fast in modifying the processes and systems and integrating SAP data securely and flexibly from disparate systems in HANA to enable smooth functioning of the organization with minimal disruption. 5 Ways to extract data from SAP S/4 HANA

Spinning off and sale of subsidiaries

When a unit is being sold or carved off, IT teams need to cope with ensuing challenges for the spin-off to be accomplished within the specified duration. Data relevant to the sold or spun off company will need to be removed from the SAP HANA database without adverse effect. SAP HANA to Snowflake with BryteFlow

Automating data migration

When an organization needs to migrate its data from an SAP system like ECC or non-SAP source to SAP HANA. There could be multiple systems from where data needs to be collected and standardized to provide transformed data for Analytics or ML. SAP SLT is low impact, easy to set up and integrates well with HANA. How to Migrate Data from SAP Oracle to HANA

Changes within the organization

Major changes in the organization’s structure can be reason for implementation of transformation projects. ETL without errors is integral to carry out organizational changes, but data replication must be low impact and not impact business operations. SLT in HANA is extremely low impact. Easy SAP Snowflake Integration

SAP SLT Replication Use cases include:

- Real-time data integration and delivery for Reporting and Dashboards Reliable SAP CDC and Data Provisioning in SAP

- Minimizing admin effort for master data updates done frequently

- Aligning and syncing data between multiple SAP ERP systems The SAP Cloud Connector and Why It’s So Amazing

- Moving to SLT from another solution to enable real-time replication

- SLT can be used in tandems with BODS to deliver real-time replication

- Batch replication for EDWs. Extract SAP Data using SAP BW Extractors

SAP SLT in HANA: Data Transformation

When organizations replicate data from different sources for analytics, it is a given that formats will not be the same. It is crucial that the data should be delivered without errors, in a uniform format that can be easily consumed. On conversion the data format needs to match the destination system format. To make data usable, data transformation removes duplicates, enriches datasets, and converts data types. It also helps define the structure, maps data, transforms it and stores data in the suitable dataset. RISE with SAP (Everything You Need to Know)

Transformation Rules for SAP SLT Replication in HANA.

When replicating data from an SAP system or non-SAP system to HANA using SAP Landscape Transformation, data may need to be manipulated in various ways. For e.g., some sensitive data may need to be masked for compliance and security purposes. An issue that may also arise is of date replication. Date types from different sources are not recognized by SLT and are replicated with values of “00000000” or similar. This is where SLT Transformation Rules can make a difference. 5 Ways to extract data from SAP S/4 HANA

Transformation Rules for SAP SLT Replication in HANA.

When replicating data from an SAP system or non-SAP system to HANA using SAP Landscape Transformation, rules can be used to transform the data being transferred. Transformation Rules are ABAP-based and function like a Customer Exit. What are Customer Exits? SAP has provided ‘hooks’ or Customer Exits in standard programs on which customers can ‘hang’ or create functionality customized for certain business requirements. With SLT’s transformation rules, organizations have access to the transferred record and can modify it as per business requirements. The transformation rules can be used to transform data in the initial full load and in the subsequent replication process. How to Carry Out a Successful SAP Cloud Migration

However before beginning the replication, the parameters that SLT will use to replicate data must be defined. And these should be specified in a configuration (this contains information about source, target and various connections). The configuration can be created using SAP LT Replication Server Cockpit (Transaction LTRC). SAP HANA to Snowflake (2 Easy Ways)

What exactly is the SLT Transformation Rule?

SLT Transformation Rule can be considered as the layer present between source and target system, where users can write the code to manipulate the source system data to their requirements. Rules can be assigned under Rule Assignment in Advanced Replication Settings in SLT.

Commonly encountered SAP SLT HANA Replication requirements and issues

During the SAP SLT HANA replication we may often encounter the following requirements:

- Masking a source field in the SAP database, so it won’t be replicated in the target. How to Migrate Data from SAP Oracle to HANA

- Rectifying data if the Date format displays incorrect value specifically “00000000” since SAP SLT does not recognize the date formats of some sources and databases. SAP BODS, the SAP ETL Tool

How to Assign SLT Transformation Rule Step by Step (Tutorial)

In this scenario we have used SAP SLT to transfer data from an SAP system to SAP HANA and assigned SLT transformation rules to transform the data (masking and correcting date format).

System Setup: SLT ODP Replication via OData service

How to Configure the SLT

Please follow the below steps to replicate the tables through SLT replication via ODP through OData Service.

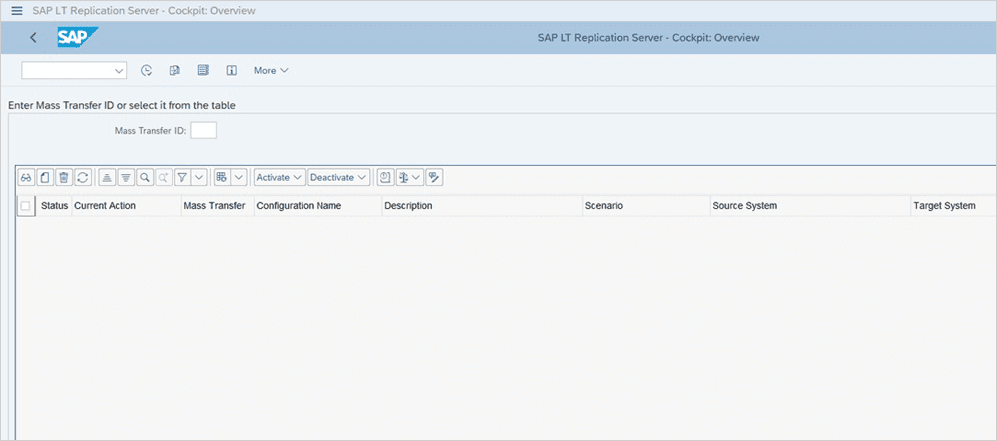

Step 1: Go to Transaction LTRC

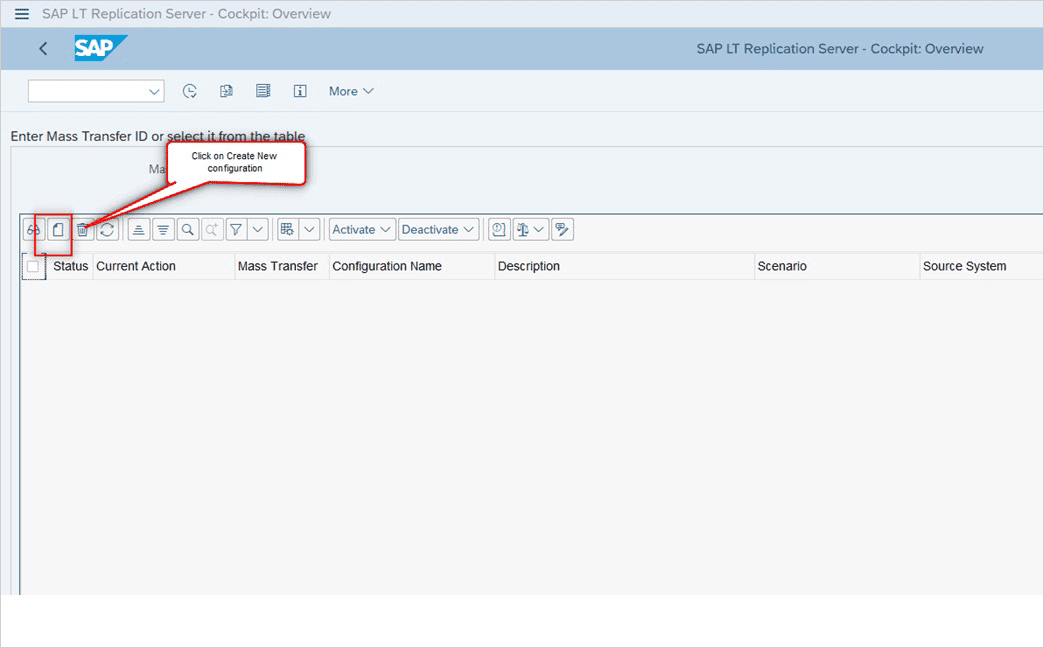

Step 2: Create a new configuration for the SLT as ODP by clicking on ‘Create New Configuration’

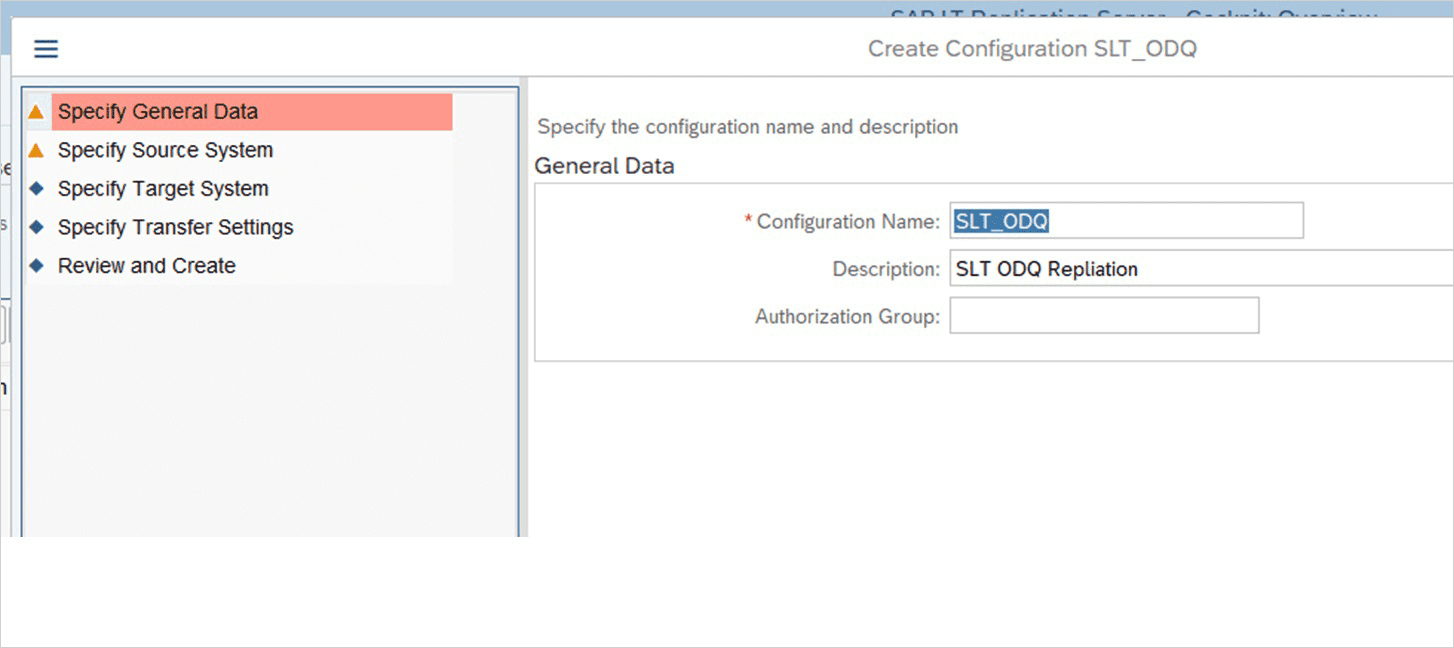

Step 3: Please give the Configuration a Name and Description.

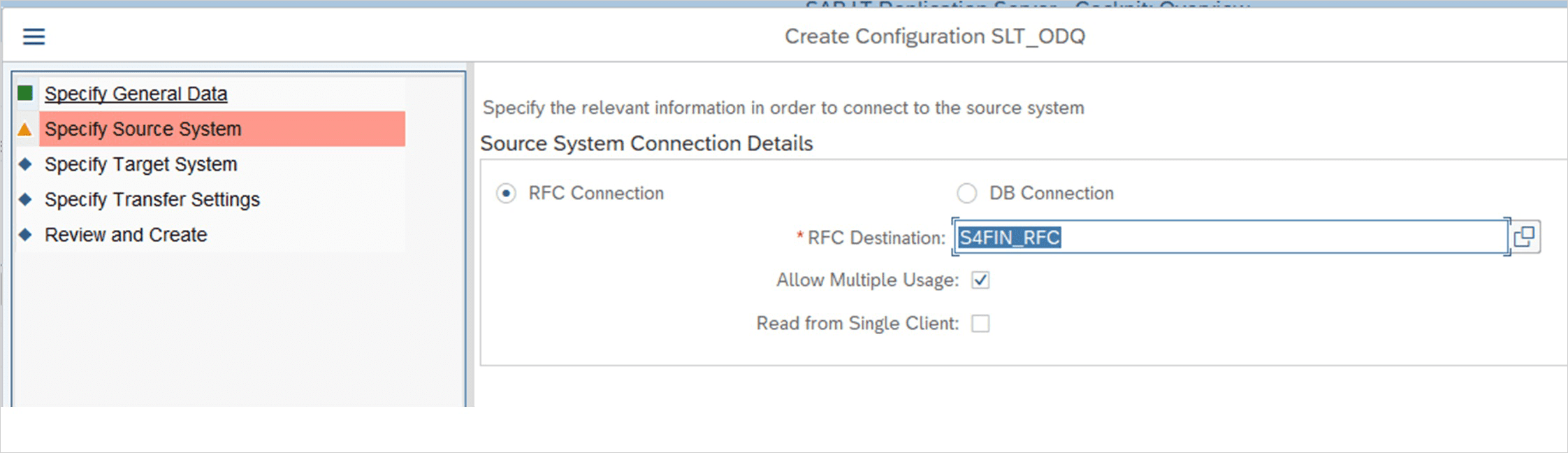

Step 4: Choose Source System DB connection.

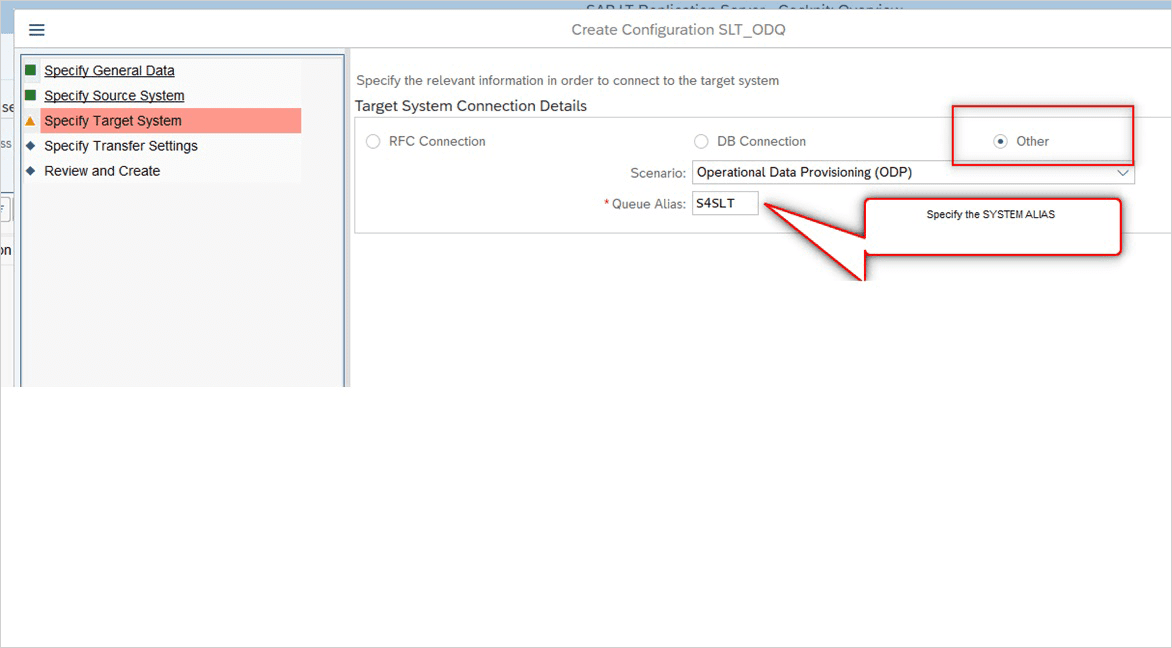

Step 5: Choose the Target System as ‘Other’ and select the ODP scenario and Queue Alias Name.

- Queue Alias name is mandatory.

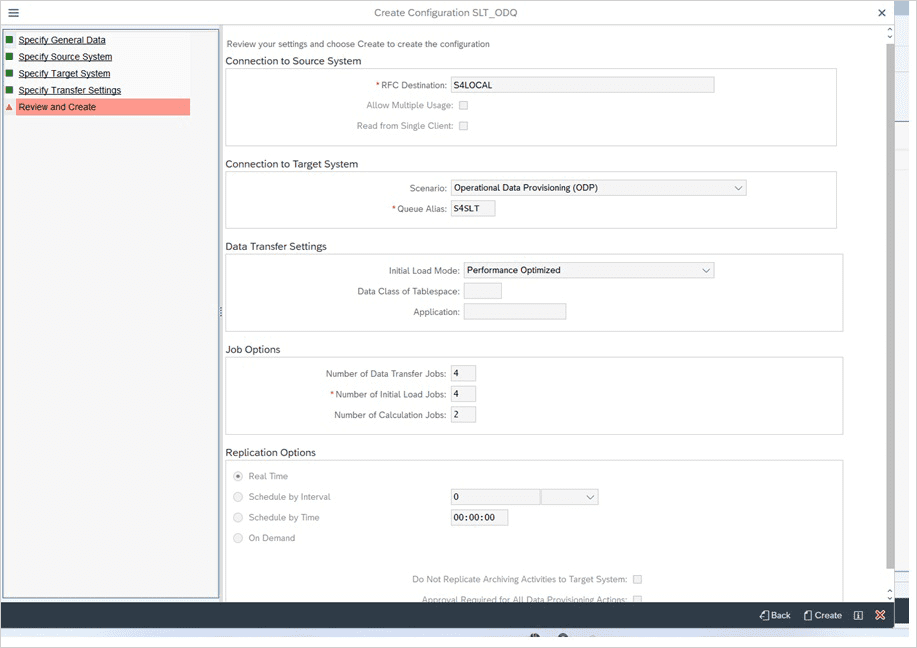

Step 6: Review and create the configuration.

Assigning the SLT Transformation Rules

With the SLT Transformation Rule we can write the code to manipulate and transform the source system data to our needs.

Use case for our SLT in HANA replication scenario

- Masking the source field in the SAP, so it won’t be replicated in the target.

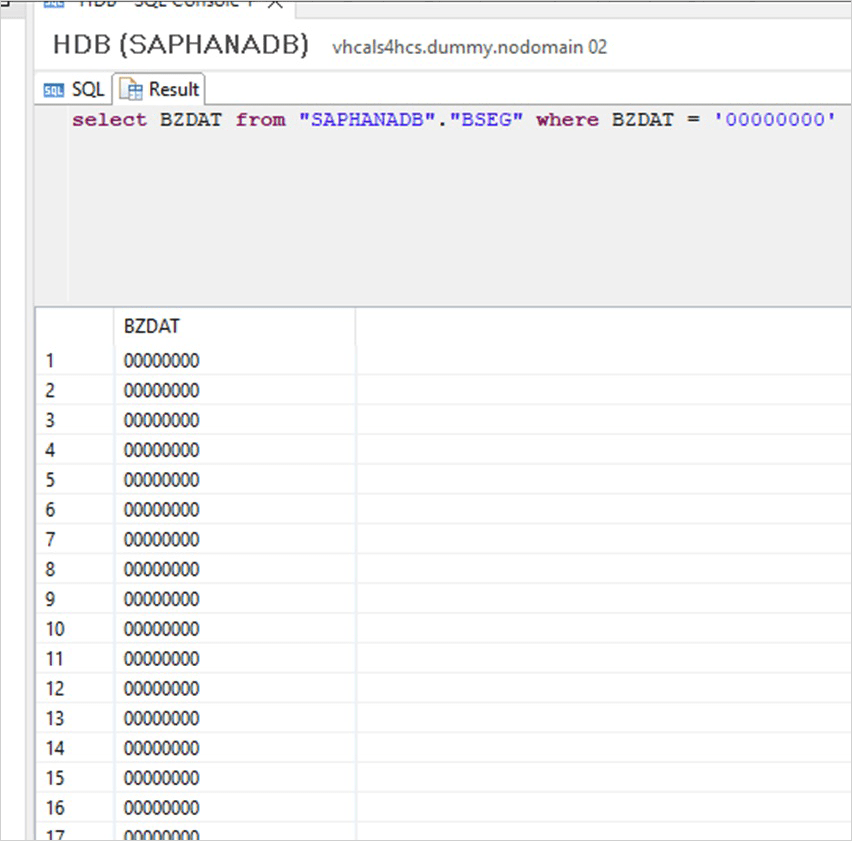

- When Date format displays an incorrect value specifically “00000000” because SLT cannot recognize the format. See below:

We can handle these things in the SLT using transformation rules.

How to Use the Transform Rule Application?

We have explained these steps using the table BSEG, where we can correct the incorrect date data and mask the source field.

Once the BSEG table is put to replication, we need to follow the below steps,

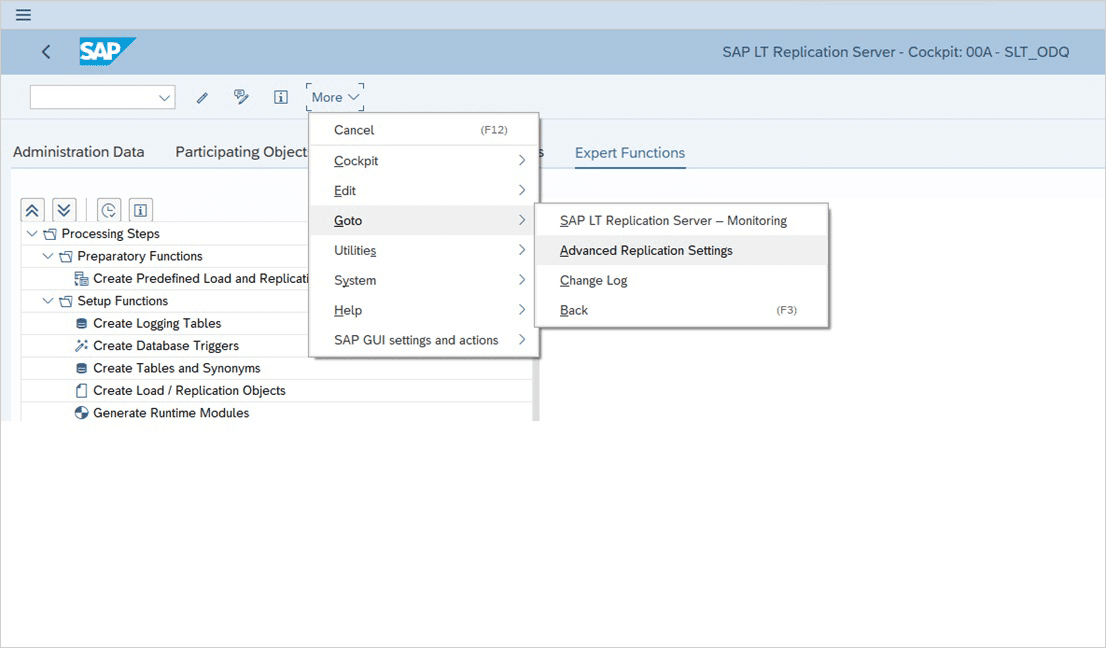

Step 1: Go to transaction LTRC and then to More –> Advanced Replication Settings.

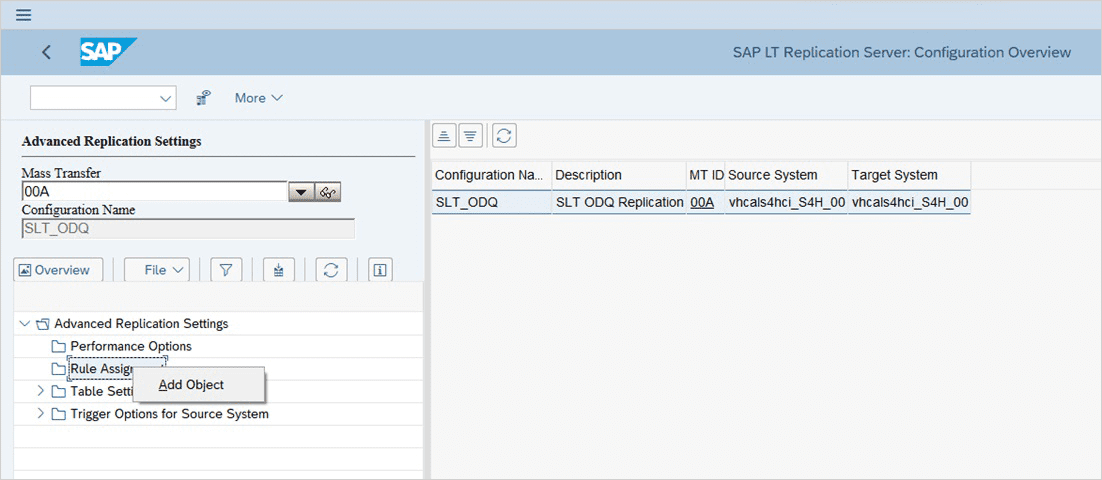

Step 2: Go to Rule Assignment then Add Object – Table BSEG.

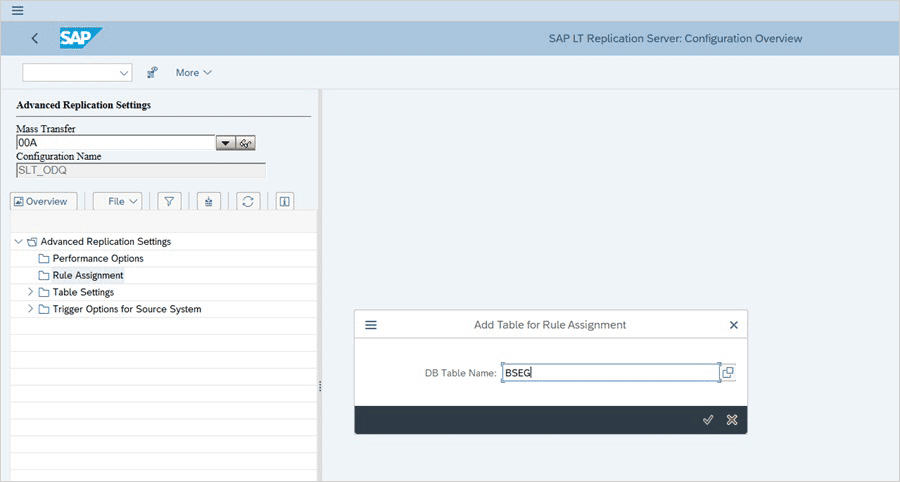

Step 3: Right click on the Rule Assignment and add the DB Table name – BSEG

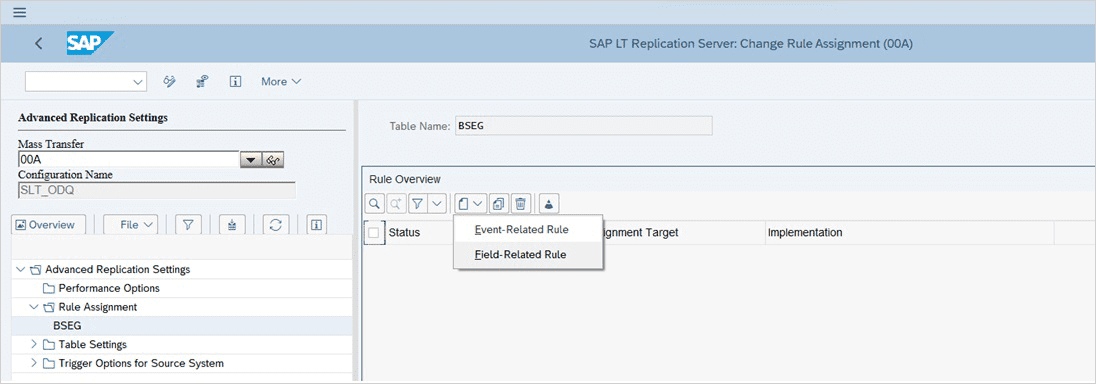

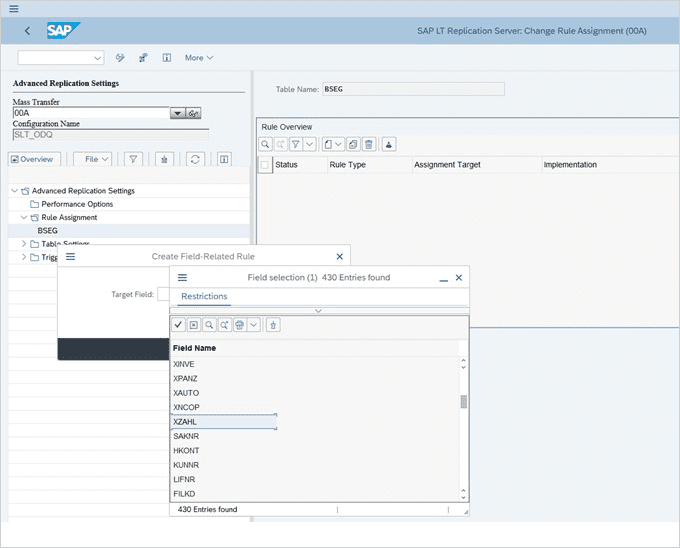

Step 4: Click on ‘Create Option’ and choose Field Related Rule.

Step 5: Please choose the specific field you wish to mask in the source system.

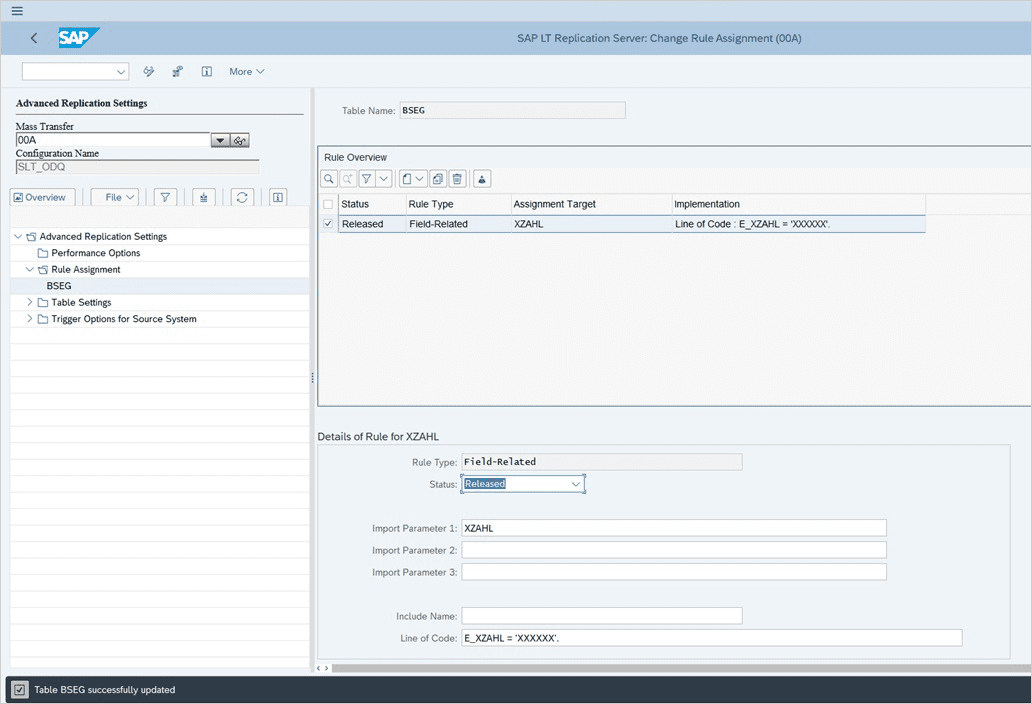

Step 6: Please pass the field name as an import parameter and Line of Code in the below given format with E_ prefix to the field name as mandatory.

Then hit on Save and Ok to finish the steps.

Let us now correct the incorrect date format, please follow the steps below

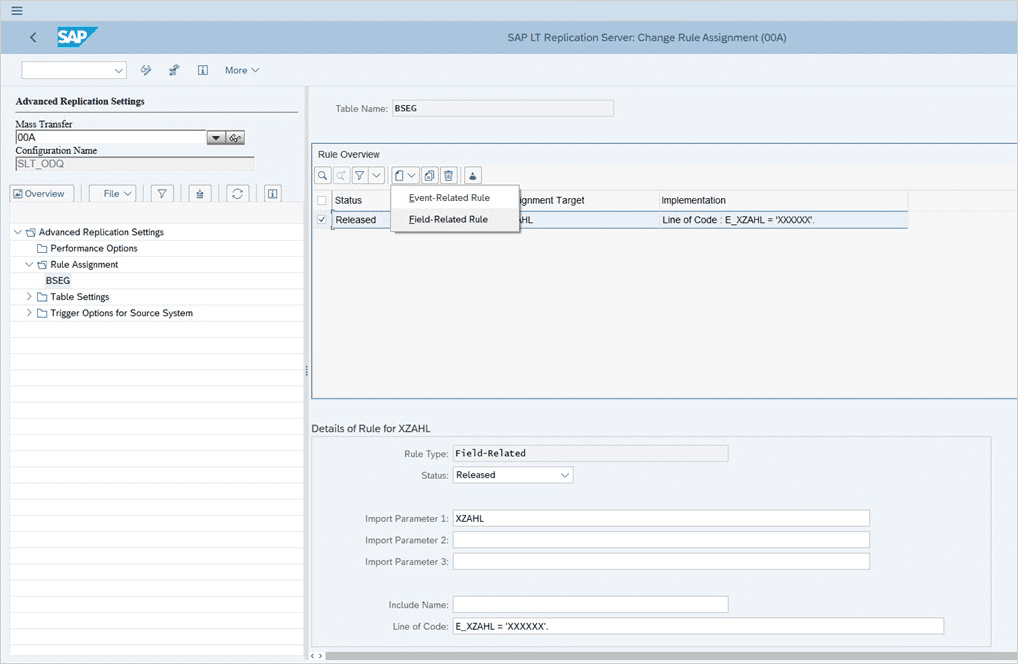

Step 1: Click on ‘Create Option’ and choose ‘Field Related Rule’.

Step 2: Please choose the target field, you wish to correct.

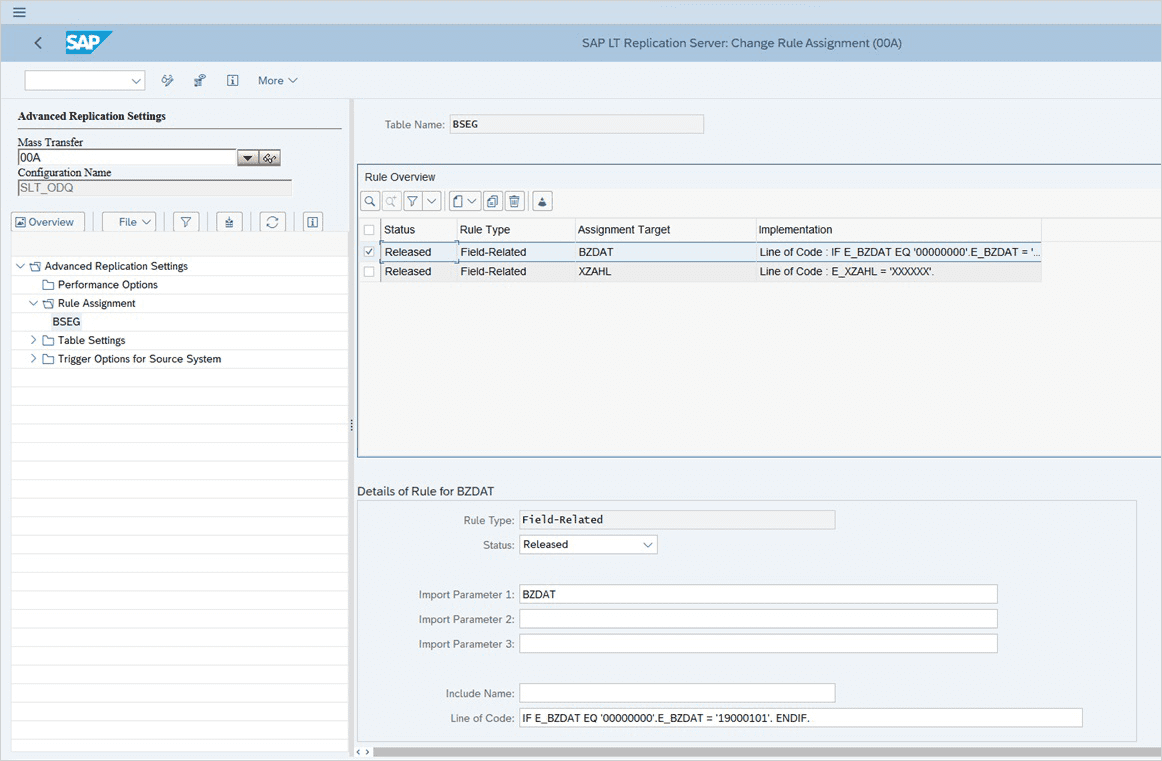

Please pass the Input Parameter as the source field and line code as given below

IF E_BZDAT EQ ‘00000000’. E_BZDAT = ‘19000101’. ENDIF.

Click on Save and OK to finish the code.

SAP SLT Replication with BryteFlow

BryteFlow can be used as a standalone SAP ETL tool, or it can be integrated with SLT to deliver ready-to-use data to your data warehouse. Bryteflow integrates with SAP SLT to ETL data at scale from SAP and non-SAP sources to Cloud and On-premise targets with high performance. Replication can be scheduled in real-time or at a desired frequency to various targets including Snowflake, Redshift, S3, Azure Synapse, ADLS Gen2, SingleStore, Azure SQL Database, Google BigQuery, Postgres, Databricks or SQL Server.

Bryteflow’s SAP Data Lake Builder extracts, transforms and loads SAP data from SAP sources like SAP ECC, S4HANA, SAP BW, SAP HANA using the Operational Data Provisioning (ODP) framework and OData Services, CDS Views or SAP Landscape Transformation Replication Server to deliver data with business logic intact to the SAP Data Lake or SAP Data Warehouse. The setup of data extraction and analysis of the source application is completely automated and no-code. Data is ready-to-use on target for various uses cases including Analytics and Machine Learning.

Bryteflow also supports SAP replication at database level through BryteFlow Ingest. Learn about 5 Ways to extract data from SAP S/4 HANA

Highlights of the SAP ETL tool: BryteFlow SAP Data Lake Builder

- The SAP ETL tool uses SAP OData Services to extract the data, both initial and incremental or deltas. The tool can connect to SAP Data Extractors, CDS Views or integrate with SAP SLT and automate data extraction. SAP HANA to Snowflake (2 Easy Ways)

- Performs log-based automated Change Data Capture from the database using transaction logs if the underlying SAP database access is available. 2 Easy Methods of SAP Data Extraction

- Initial data is merged automatically with deltas to keep data in the target continually updated. The SAP Cloud Connector and Why It’s So Amazing

- SAP data extraction, CDC, merging, masking, or type 2 history are all automated, no coding needed. SAP BODS, the SAP ETL Tool

- Data on the target is ready for consumption. Easy SAP Snowflake Integration

- Best practices and high performance for SAP integration on Snowflake, Redshift, S3, Azure Synapse, ADLS Gen2, Azure SQL DB, BigQuery, Databricks, Kafka, Postgres and SQL Server