SAP HANA to Snowflake migration

Need to migrate SAP HANA data to Snowflake?

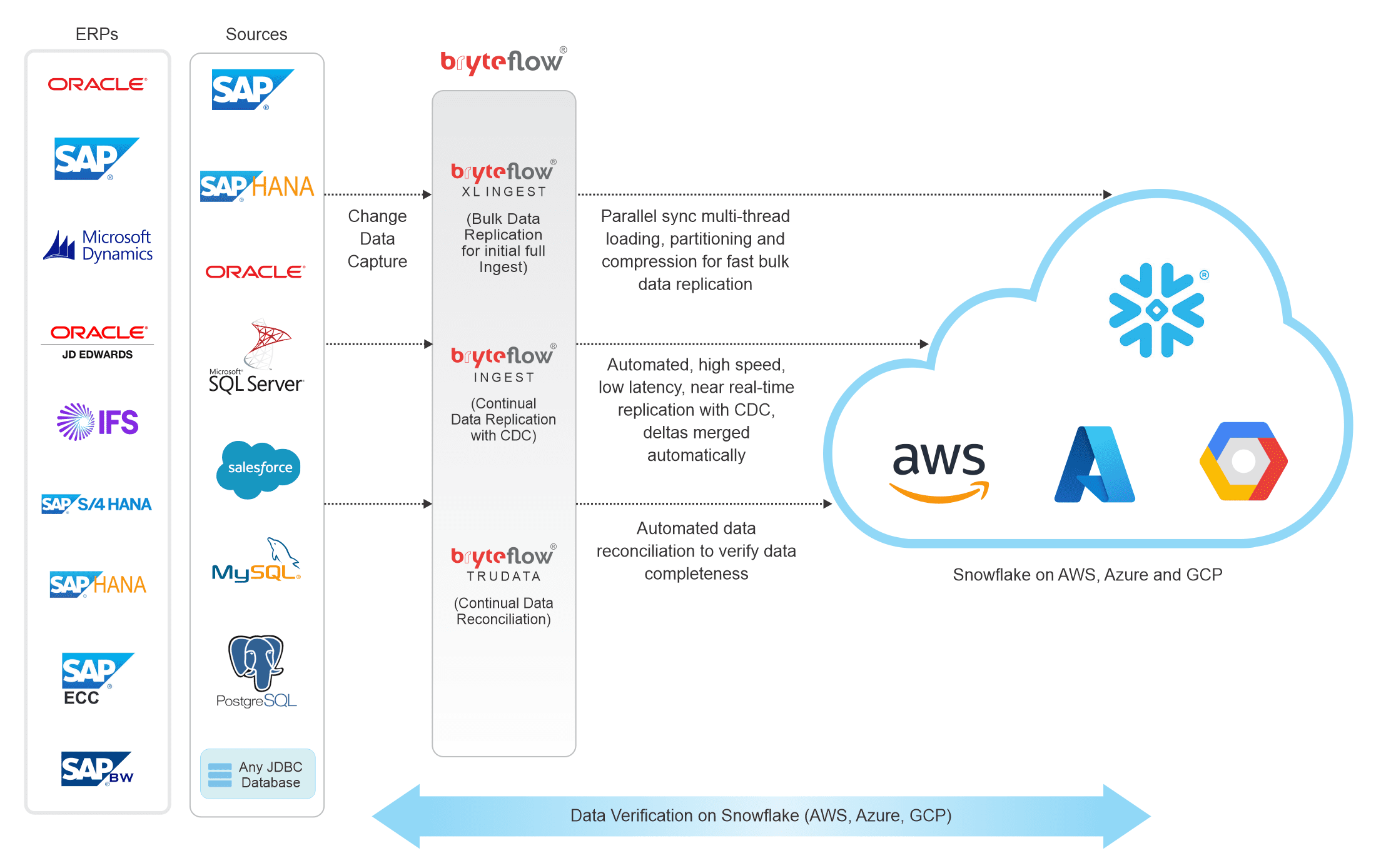

SAP HANA is SAP’s high performing, in-memory database that speeds up real-time, data-focused decisions and actions and supports varied workloads. SAP HANA enables advanced analytics on multi-model data in the cloud and on-premises. If you are looking to migrate data from SAP HANA to Snowflake, BryteFlow offers one of the easiest ways to do this with codeless, automated data replication to Snowflake. Create CDS View on SAP HANA for data extraction

SAP ETL Tool: Extract data from SAP systems with business logic intact

SAP ECC and Data Extraction from an LO Data Source

Codeless SAP HANA Snowflake replication

If you are using Snowflake as your data warehouse, BryteFlow can help you move your data from SAP HANA to Snowflake in almost real-time and refresh it continually, using Change Data Capture to keep up with changes at source. You won’t need to code at all with BryteFlow’s easy drag and drop interface and built-in automation. Just a few clicks to connect and you can begin to access prepared data in Snowflake for analytics. Merge data from any database, files or API with your SAP HANA data and transform it to a consumable format for Snowflake. BryteFlow continually reconciles your data with data at source and you will be alerted if data is missing or incomplete. SAP HANA to Snowflake (2 Easy Ways)

SAP BW and creating an SAP OData Service

Why use BryteFlow to move data from SAP HANA to Snowflake?

- SAP HANA to Snowflake low latency CDC replication with minimal impact on source. Easy SAP Snowflake Integration

- BryteFlow replication uses very low compute so you can reduce Snowflake data costs. SAP BODS, the ETL Tool

- Optimised for Snowflake, provides automated data merges and preparation How to Carry Out a Successful SAP Cloud Migration

- No coding needed, automated interface creates exact replica or SCD type2 history on Snowflake. About Snowflake Stages

- Manage large volumes easily with automated partitioning technology and multi-thread loading for high speed.

How to load terabytes of data to Snowflake fast

BryteFlow for SAP

5 Ways to extract data from SAP S/4 HANA

Snowflake CDC With Streams and a Better CDC Method

Real-time, automated SAP HANA to Snowflake data replication

BryteFlow replicates huge volumes of SAP HANA data to your Snowflake database fast

When your data tables are true Godzillas, including SAP HANA data, most data replication software roll over and die. Not BryteFlow. It tackles terabytes of data for SAP HANA replication head-on. BryteFlow XL Ingest has been specially created to migrate huge SAP HANA data to Snowflake at super-fast speeds.

The SAP Cloud Connector and Why It’s So Amazing

How much time will your Database Administrators need to spend on managing the replication?

Usually DBAs spend a lot of time in managing backups, managing dependencies until the changes have been processed, in configuring full backups etc. which adds to the Total Cost of Ownership (TCO) of the solution. The replication user in most of these replication scenarios needs to have the highest sysadmin privileges. With BryteFlow, it is “set and forget”. There is no involvement from the DBAs required on a continual basis, hence the TCO is much lower. Further, you do not need sysadmin privileges for the replication user.

Build a Snowflake Data Lake or Snowflake Data Warehouse without coding

No coding: SAP HANA Snowflake integration is completely automated

Most data tools will set up connectors and pipelines to stream your SAP HANA data to Snowflake but there is usually coding involved at some point for e.g. to merge data for basic SAP HANA CDC. With BryteFlow you never face any of those annoyances. SAP HANA data replication, data merges, SCD Type2 history, data transformation and data reconciliation are all automated and self-service with a point and click interface that ordinary business users can use with ease.

Data from SAP HANA to Snowflake is monitored for data completeness from start to finish

BryteFlow provides end-to-end monitoring of data. Reliability is our strong focus as the success of the analytics projects depends on this reliability. Unlike other software which set up connectors and pipelines to SAP HANA source applications and stream your data without checking the data accuracy or completeness, BryteFlow makes it a point to track your data. For e.g. if you are replicating SAP HANA data to Snowflake at 2pm on Thursday, Nov. 2019, all the changes that happened till that point will be replicated to the Snowflake database, latest change last so the data will be replicated with all inserts, deletes and changes present at source at that point in time.

Extract SAP data using ODP and SAP OData Services (2 easy methods)

Data maintains Referential Integrity

With BryteFlow you can maintain the referential integrity of your data when integrating SAP data in Snowflake. What does this mean? Simply put, it means when there are changes in the SAP HANA source and when those changes are replicated to the destination (Snowflake) you can put your finger exactly on the date, the time and the values that changed at the columnar level.

Extract SAP data from SAP systems with Business Logic intact

Data is continually reconciled and checked for completeness in the Snowflake cloud data warehouse

With BryteFlow, data in the Snowflake data warehouse is compared against data in the SAP HANA database continually or you can choose a frequency for this to happen. It performs point-in-time data completeness checks for complete datasets including type-2. It compares row counts and columns checksum in the SAP HANA database and Snowflake data at a very granular level. Very few data integration software provide this feature.

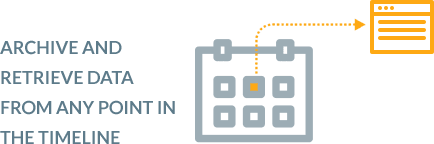

The option to archive data while preserving SCD Type 2 history

BryteFlow provides time-stamped data and the versioning feature allows you to retrieve data from any point on the timeline. This versioning feature is a ‘must have’ for historical and predictive trend analysis.

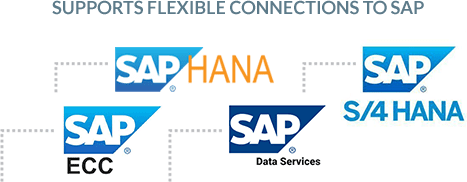

Support for flexible connections to SAP

BryteFlow supports flexible connections to SAP including: Database logs, ECC, HANA, S/4HANA and SAP Data Services. It also supports Pool and Cluster tables. Import any kind of data from SAP into Snowflake with BryteFlow. It will automatically create the tables on Snowflake so you don’t need to bother with any manual coding.

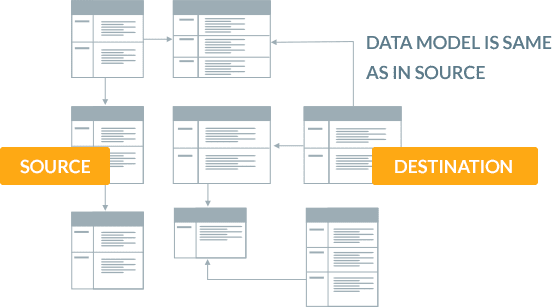

BryteFlow creates a data lake on Snowflake so the data model is as is in source – no modification needed

BryteFlow converts various SAP domain values to standard and consistent data types on the destination. For instance, dates are stored as separate domain values in SAP and sometimes dates and times are separated. BryteFlow provides a GUI to convert these automatically to a date data type on the destination, or to combine date and time into timestamp fields on the destination. This is maintained through the initial sync and the incremental sync by BryteFlow.

SAP BW and creating an SAP OData Service for data extraction

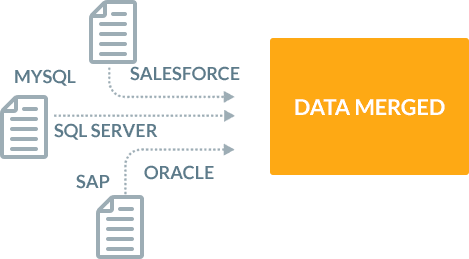

SAP HANA data can be easily merged with data from other sources and transformed -no coding needed

BryteFlow is completely automated. You can merge any kind of data from multiple sources with your data from SAP HANA and transform it, so it is ready to be used at the destination for Analytics or Machine Learning. No coding required – just drag, drop and click.

Automatic catch-up from network dropout

BryteFlow has built-in resiliency. In case of a power outage or network failure you will not need to start the SAP HANA data replication to Snowflake process over again. You can simply pick up where you left off – automatically.

About SAP HANA database

SAP HANA is an in-memory database developed by SAP. It is used for database management, advanced analytical processing, application development, and data virtualisation. SAP HANA is basically the backbone of the SAP environment. Organizations install applications that use HANA as a base, such as SAP applications for finance, HR, and logistics. SAP HANA can be run on-prem or in the cloud. It is an innovative, columnar Relational Database Management System (RDBMS) that stores, retrieves and processes data fast for business activities.

About Snowflake Data Warehouse

The Snowflake Data Warehouse or Snowflake as it is popularly known is a cloud based data warehouse that is extremely scalable and high performance. It is a SaaS(Software as a Service) solution based on ANSI SQL with a unique architecture. Snowflake’s architecture uses a hybrid of traditional shared-disk and shared-nothing architectures. Users can get to creating tables and start querying them with a minimum of preliminary administration.