In this blog we will discuss SAP Change Data Capture for ETL and why it is so challenging to extract SAP data. We also look at the steps involved in CDC of data from SAP, the role played by OData services and the ODP framework to capture and provision data. We study SAP’s delta mechanisms and three popular tools – SAP SLT, SAP Data services and BryteFlow as tools that can perform efficient SAP Change Data Capture. BryteFlow for SAP Database Replication

Quick Links

- About Change Data Capture (CDC)

- What is SAP Change Data Capture?

- Why Data Extraction for SAP CDC can be challenging

- SAP Change Data Capture Process in Steps

- SAP Change Data Capture – The Enablers

- SAP CDC Tools

- BryteFlow SAP Data Lake Builder

About Change Data Capture

Change Data Capture or CDC as it is popularly known, is the process by which changes in a source database are identified and captured, and then delivered in real-time to target systems like databases, data warehouses or data lakes. In environments where real-time data is critical, Change Data Capture provides low-latency, scalable and reliable data replication. It is appropriate for zero-downtime, low-impact Cloud migrations. CDC also powers real-time analytics and data science use cases. SAP Integration with BryteFlow SAP Data Lake Builder

What is SAP Change Data Capture?

As you know, SAP is a complex animal. SAP databases and ERP systems generate and hold massive amounts of data that is constantly being updated, whether it is in sales, finance, manufacturing or logistics, and which needs to be captured for actionable business insights, and to optimize business processes. As such, the need for real-time data is paramount in most cases. Consider events where new inventory has been stocked, new customers onboarded, or sales figures to be updated – it is a constant scramble to keep up with new data. SAP Change Data Capture helps to keep your data updated efficiently and seamlessly with sources. SAP Database Replication with BryteFlow

Why Data Extraction for SAP CDC can be challenging

SAP systems are complex

SAP systems, as we mentioned, have a large complex structure, not just in the number of processes but also in the volume of the data. An SAP system typically contains hundreds of thousands of tables with many complex relationships between them. A lot of these have 4-character abbreviations and 6-character field codes so it can be difficult to find the right tables and connections between them. Also, unlike many databases that run on SQL, SAP has its proprietary ABAP language, logic and processes that can prove difficult to integrate. Oracle to HANA Data Migration

SAP systems have governance and access policies

Since SAP systems can contain sensitive data, there may be governance policies in place that relate to extraction of data. SAP teams and stakeholders may need to arrange for required user access and to ensure operational processes are not at risk – which can be a time-consuming process. SAP HANA to Snowflake (2 Easy Ways)

Extracting SAP data can be done in different ways which can be confusing

SAP systems contain a lot of SAP-specific object types which may be confusing to users without an SAP background. Objects like SAP extractors, IDocs, ABAP reports, BADIs, ADSOs, Composite Providers etc. Using the ODP framework with SAP extractors for delta extraction and to enable incremental loading, will need the help of SAP Basis teams. SAP Extraction using ODP and SAP OData Services

SAP data extraction may have licensing restrictions

Data extraction and replication from a HANA database may have licensing constraints. Most customers have a HANA Runtime license and legally cannot extract data from the database layer but from the application layer only. Extraction could attract lawsuits from SAP. SAP BW and how to create an SAP OData Service

SAP Change Data Capture Process in Steps

Here are the broad steps involved in the SAP CDC process.

SAP CDC Step1: Detecting the Changes

The system identifies alterations in the primary database. Various methods, such as database triggers, log-based capture, or timestamp-based approaches, can be used to identify the changes.

- Log-Based CDC

Here transaction logs of the database are used to detect changes. Every time there is a change in data, it gets recorded in the transaction log, which is then read by the SAP CDC process to recognize the change. SAP Database Replication by BryteFlow

- Triggers-Based CDC

In certain setups, database triggers are employed to capture changes. Triggers are special procedures that automatically run when specific database events (INSERT, UPDATE, DELETE) take place. How to Carry Out a Successful SAP Cloud Migration

- Timestamp-based CDC

By using timestamps to monitor data change timings, the system can identify new or modified records since the previous capture. SAP ECC and Data Extraction from an LO Data Source

SAP CDC Process Step 2: Capturing the Changes

The CDC system captures any detected changes, recording specifics such as the altered data, the type of change, and the timing of the change.

SAP CDC Process Step 3: Transforming the Data

Before applying the captured data changes to the target system, they typically need to be transformed to be compatible with the format and structure of the target system.

SAP CDC Process Step 4: Delivering the Data

The target system receives the changes that have been captured. Depending on the objective and use case, this may involve either batch processing or real-time streaming of data.

SAP CDC Process Step 5: Applying the Data Changes

The changes are integrated into the target system and applied to the data. This ensures the target has the latest data and maintains consistency and data accuracy across platforms. How to Select the Right Data Replication Software

SAP Change Data Capture – The Enablers

SAP Change Data Capture can be enabled in different ways. Here we discuss SAP Change Data Capture powered by CDS Views based data extraction and the role played by ODP,

Data services and the SAP Gateway in enabling SAP CDC. We also look at trigger-based CDC performed by the CDC engine in SAP S/4HANA.

SAP CDC using CDS Views Based Data Extraction

SAP Core Data Services or SAP CDS is an infrastructure for data modelling that enables data models to be defined and consumed on the database server rather than the application server. SAP Change Data Capture can be enabled with CDS-based data extraction in SAP S/4HANA, works efficiently in on-premise and Cloud environments and is typically used to get data from SAP S/4 HANA into SAP Business Warehouse (SAP BW) and SAP Data Intelligence (SAP DI).

SAP CDS Views for SAP extraction

CDS-based data extraction is implemented with the help of CDS Views that are defined as extraction views with the use of special CDS annotations (@Analytics.dataExtraction.enabled). They are virtual data models of SAP HANA that allow direct access to underlying tables of the HANA database. The CDS views that make up the Virtual Data Model (VDM) have consistent modeling and naming rules. They make the information stored in database tables accessible, in a format based on business semantics, which is simpler to use. CDS Views can be exposed as an OData Service for accessing and extracting SAP data. They are database independent and can be run on any database system that supports SAP. CDS Views in SAP HANA and how to create one

The database views created from the CDS extraction views allow for Full Extraction where the complete data is extracted or Delta Extraction which extracts only the changes after the first full extraction. This needs the CDS extraction view to be defined as delta-enabled (@Analytics.dataExtraction.delta.*). SAP to Snowflake (Make the Integration Easy)

External systems can extract data based on CDS views through several APIs:

Operational Data Provider (ODP) Framework

The Operational Data Provider (ODP) framework is a component that provides for extraction of business data from SAP operational systems like SAP/S4 HANA and for analytics. Besides other things, it enables data extraction with the use of corresponding APIs and extraction queues. In an on-premise situation SAP BW can call an RFC provided by the ODP framework in SAP S/4 HANA while in SAP S/4HANA Cloud, a SOAP (Simple Object Access Protocol) service can use the same API. SOAP is an XML based protocol for exchanging structured information via Web services. SAP Extraction using ODP and SAP OData Services

Cloud Data Integration (CDI) API

CDS-based data extraction can also be done through the Cloud Data Integration (CDI) API. CDI provides a data extraction API based on open standards and supports data extraction through OData services. This process is also based on the ODP framework. The consumer operator in SAP Data Intelligence can be used to extract data from SAP S/4HANA through the CDI OData API. SAP Cloud Connector and Why It’s So Amazing

ABAP CDS Reader Operator

The ABAP CDS reader operator is another option you can use to extract data into SAP Data Intelligence. This is done using an RFC through WebSocket to extract data from the SAP ABAP pipeline in SAP S/4 HANA. The ABAP pipeline engine can run ABAP operators used in data pipelines in SAP Data Intelligence, like the ABAP CDS reader operator. Note: SAP has now prohibited use of RFCs for SAP-external systems.

How OData and the ODP Framework enable SAP CDC

The Operational Data Provisioning (ODP) framework is a data distribution framework for data provisioning and consumption. It can be used to connect SAP systems and provides a technical infrastructure to enable SAP extraction and replication of deltas. ODP is recommended for SAP extraction and replication from SAP (ABAP) applications to various target applications including SAP BW, SAP BW/4HANA, SAP Data Services and SAP HANA Smart Data Integration (SAP HANA SDI). ODP enables loading of deltas from various data sources such as ABAP CDS views, ADSOs, and SAP extractors. ODP first appeared in SAP BW 7.40 in a basic form but has evolved over the years to its current form for SAP BW/4HANA. Right now, ODP is the preferred mode for SAP extraction and replication. SAP SLT in HANA: How to use SLT Transformation Rule

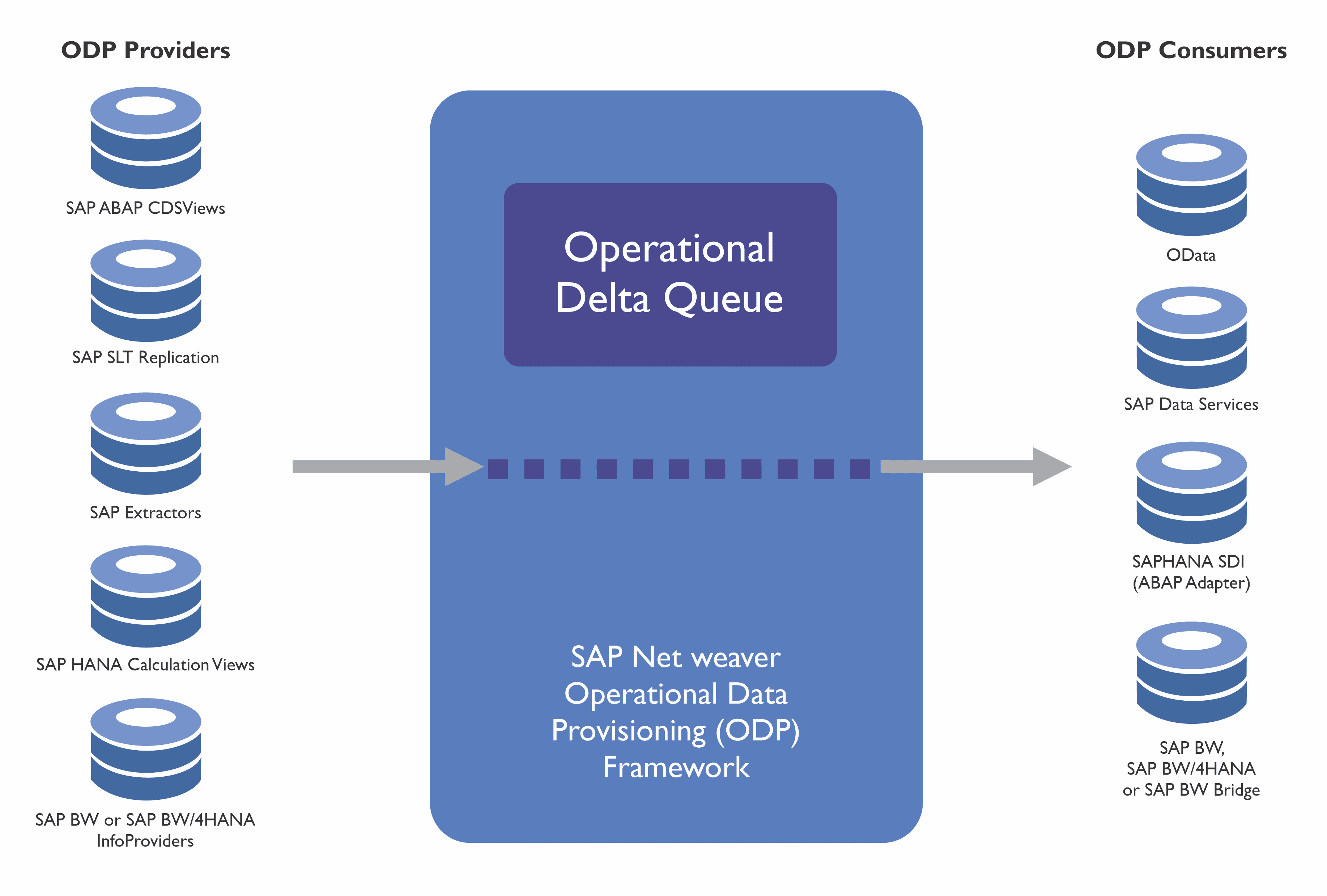

How ODP supports delta extraction with the Operational Delta Queue

To support loading of deltas, data from a source is written automatically using an update process applied to a delta queue called the Operational Delta Queue (transaction code ODQMON). Data is then transmitted to the delta queue through an SAP extractor interface. The sources that can deliver data to the delta queues are called ODP Providers and include ABAP Core Data Services Views (ABAP CDS Views), DataSources (Extractors), SAP BW or SAP BW/4HANA (InfoObject, DataStore Object), SAP Landscape Transformation Replication Server (SLT), and SAP HANA Information Views (Calculation Views). The target applications, also called ODP Consumers or Subscribers, get the data from the delta queue and process it on an ongoing basis. The Delta Queues thus help to monitor the data extraction and data replication.

The Role of OData Services in SAP Change Data Capture

OData represents the default method to connect to and communicate with an SAP backend for data integration objectives. A standard REST-based protocol, OData is based on Web technologies and builds on HTTP, AtomPub, and JSON using URIs (Uniform Resource Identifiers) to address and access data feed sources. This enables access to information from relational databases, file systems, devices, websites and applications. It queries and updates data present in SAP systems using the ABAP language. SAP Extraction using ODP and SAP OData Services (2 Easy Methods)

If SAP data from Tables or Queries needs to be exposed to an external environment like Fiori or HANA, an API is needed to push out data. A service link, which is the SAP OData Service can be created with OData and can be accessed online to perform CRUD (Create, Read, Update, and Delete) operations. The SEGW (Service Gateway) transaction code is used to create an SAP OData Service within the SAP environment. SAP to Snowflake (Make the Integration Easy)

After the service has been generated, it can be used by external applications and standard OData requests can be used to extract the data. OData is based on REST (Representational State Transfer), and all resources of the entity sets are addressed via URLs. Model metadata can also be extracted via URL. Entity sets can have URLs (that represent ODPs) which have different components that can be used to specify the requested data in greater detail. How to Carry Out a Successful SAP Cloud Migration

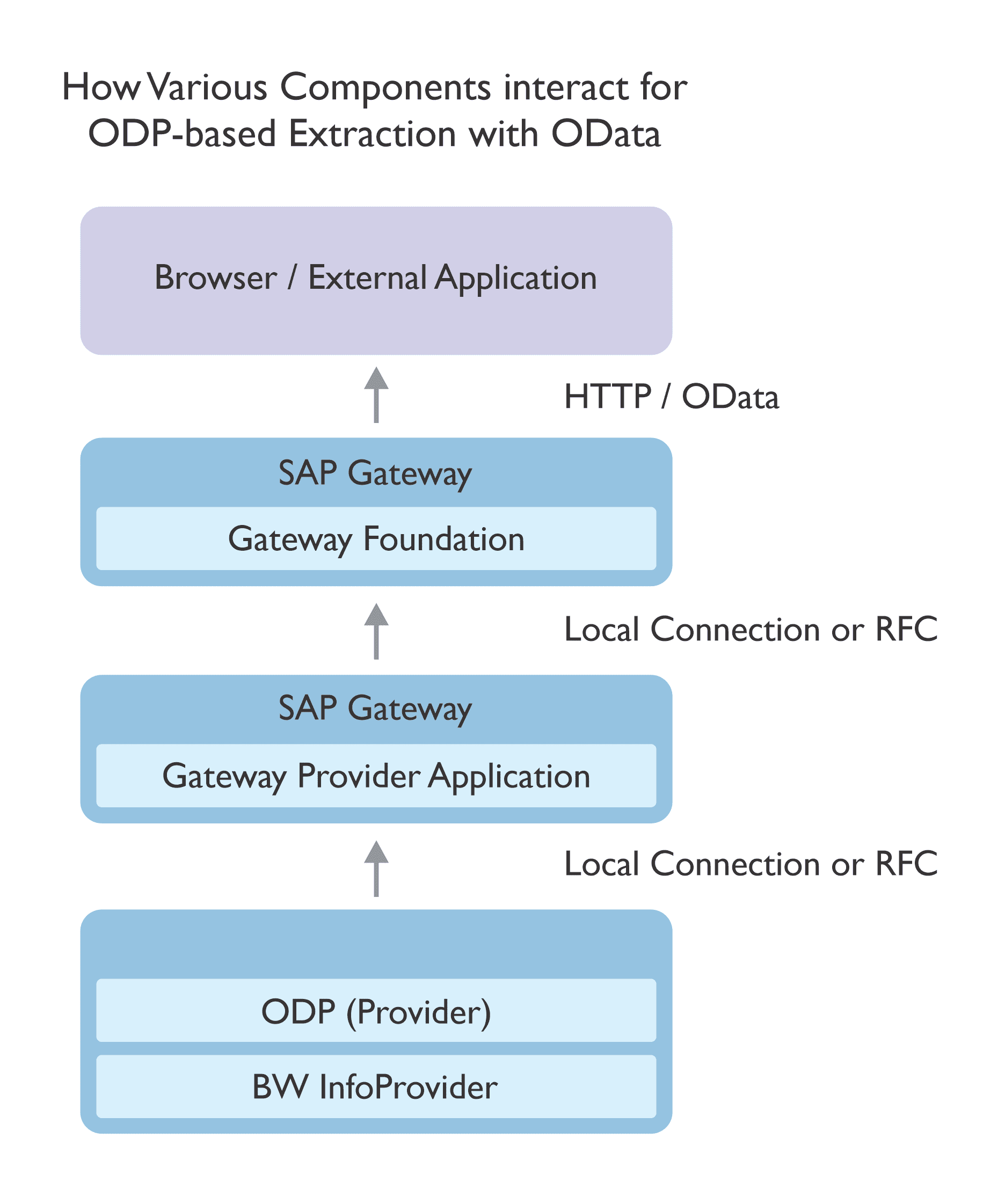

How SAP Gateway helps in SAP CDC

The SAP Gateway is an SAP Connector of sorts that acts like a bridge between SAP systems and external applications. It enables different platforms and applications to access SAP data easily. It makes deployment and development of SAP-based applications simpler and allows for integration of non-SAP applications with SAP systems. The SAP Cloud Connector and Why It’s So Amazing

SAP Gateway Service Catalog

Using OData enables sharing of business data within multiple environments and platforms – data that can be consumed without knowledge of SAP. Usually client-based applications consume SAP Gateway services, enabling client applications to use the data and interface directly with the user. For e.g. Web browser-based applications are a common use case. Another use case for an SAP Gateway service is to be used as an Application Programming Interface (API) by a server-based application. SAP HANA to Snowflake (2 Easy Ways)

The SAP Gateway Foundation, component (SAP_GRFND), provides the foundational technology and infrastructure for the SAP Gateway to enable secure communication between SAP and non-SAP systems. The SAP Gateway Foundation allows for deployment of OData services by providing development and design tools for developers to create, modify, and deploy OData services rapidly, allowing them to expose data from various SAP and non-SAP sources in a standardized, secure way. About BryteFlow SAP Data Lake Builder

Delta Mechanisms in SAP

To capture changes in the SAP source systems there are two basic delta handling mechanisms. Read more

Generic Timestamp or Date based Delta

Performing CDC with timestamps / date requires the availability of date/time information in the relevant application tables to be updated based on changes of the application data. It depends on a date / time component being present in the CDS view that reflects the changes of the underlying database records. RISE with SAP (Everything You Need to Know)

Change Data Capture (CDC) Delta with Triggers

CDC Delta with triggers captures changes based on database triggers in the application tables. It enables provisioning of only those records to the Consumers that have been freshly inserted, changed or deleted. We will now examine in some detail the SAP CDC with triggers process.

Delta Extraction with Trigger-based SAP Change Data Capture

Trigger-based Change Data Capture was first seen in SAP SLT. In SAP S/4HANA, this is implemented by the Change Data Capture engine that executes trigger-based CDC. In this method database triggers are created on underlying database tables of extraction views. When there are changes in the tables (inserts, updates, deletes), the change data is written to the CDC logging tables. The CDS views must be annotated (as mentioned earlier) to make this option possible. How to Select the Right Data Replication Software

For complex views with multiple tables, the view developer must include information about mapping between tables and views when defining the CDS extraction view. For simple views the system does the mapping automatically. However extracting deltas with the trigger-based CDC engine only works efficiently when the views are not overly complex. For instance, the delta-enabled extraction views must not have too many joins or aggregates.

How does trigger-based CDC work in SAP?

With trigger-based CDC, a CDC job is run at regular intervals to extract changes in data from application tables. The CDC engine uses the logging tables to understand the changes and the database views created from CDS extraction views read the data. The CDC job then writes the extracted data into the delta queue of the ODP framework and from where it is fetched by the designated APIs. Earlier extraction of deltas was only done based on timestamps which restricted the process to data models where timestamps were available. Timestamp-based delta extraction has its drawbacks, including an inability to detect deletes. Trigger-based CDC is a more viable option, especially for detecting deletes. How to Migrate Data from SAP Oracle to HANA

SAP CDC Tools

There are several native SAP tools that can help with SAP Change Data Capture. Here we discuss SAP SLT and SAP Data Services. There are also several third-party tools that can act as SAP connectors for CDC and provide SAP ETL operations like Qlik Replicate, Fivetran and our very own BryteFlow. SAP to AWS S3 – Know an Easy Method

SAP Landscape Transformation Replication Server (SAP SLT)

SAP SLT or the SAP Landscape Transformation Replication Server is a component of the SAP Business Technology Platform. It replicates data from SAP and non-SAP systems in real-time to the SAP HANA database. SAP SLT delivers real-time data using trigger-based Change Data Capture. Trigger-based CDC means the deltas are captured when the database is triggered due to population of data in the logging table. SAP SLT is an SAP ETL tool capable of only basic transformation. SAP SLT Replication with ODP and BryteFlow

SAP SLT Features

- Provides real-time or schedule-based replication from both SAP and non-SAP sources, whether on-premise or in the Cloud.

- Typically, SLT does not attract extra licensing costs and is relatively simple to implement.

- SAP SLT uses a Trigger based CDC approach to moving data –all updates, inserts, and deletes for the table to be replicated are captured.

- It provides data transformation (at a basic level), conversion and filtering functionality so you can tweak data before transferring it to a HANA database.

- Supports configurations for multiple system connections (1:N and N:1 scenarios).

SAP Data Services

SAP Data Services also called SAP Business Objects Data Services (SAP BODS) is a versatile SAP ETL tool that allows you to extract, transform, cleanse, consolidate and deliver data to SAP and non-SAP targets like SAP applications, data marts, data warehouses, flat files, or any operational database. SAP Data Services generally performs batch replication but can deliver data in real-time if required with workarounds (e.g. teaming up with tools like SAP SLT). SAP DS promotes data quality by checking duplicate records, validation of addresses and geocode search. It supports various data sources, including SAP ERP, SAP BW, SAP CRM, SAP HANA, and non-SAP sources. How to Carry Out a Successful SAP Cloud Migration

SAP Data Services Features

- Supports ETL from different sources – databases, applications, files, and unstructured content. SAP DS (What makes it a great SAP ETL Tool)

- Has a graphical user interface (GUI) to help developers build and implement complex data integration workflows easily without coding.

- Has a powerful set of transformation functions to cleanse, enrich, and convert data as required.

- Has native access to SAP Business Suite applications. SAP Data Services accesses relevant metadata after comprehending the business model.

- Can read data based on programming languages, such as ABAP, IDocs, BAPI, RFC, and SAP extractors. No Need to Panic with SAP’s Ban of RFC

- SAP Data Services has built-in features for data harmonization, profiling, cleansing and data quality.

- SAP Data Services has high scalability. It supports parallel processing, distributed data integration, and load balancing.

- Provides a wide range of SAP connectors to various data sources and targets.

- SAP Data Services is a commercial product and needs to be licensed for use. Maintenance, support, and upgrades may also attract extra charges.

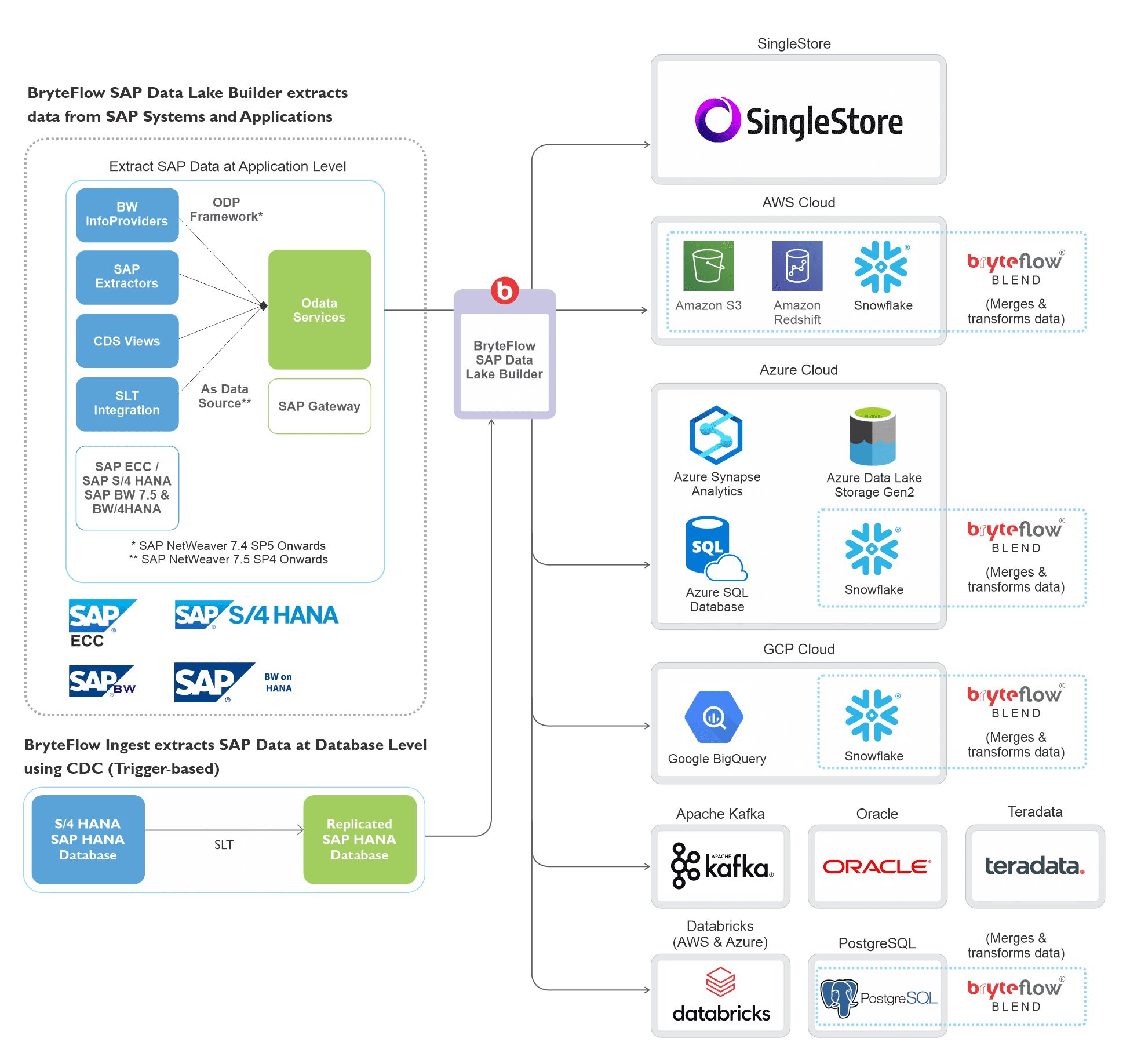

BryteFlow SAP Data Lake Builder

The BryteFlow SAP Data Lake Builder is ideal for CDC ETL of SAP data to Cloud platforms like AWS, Azure and GCP. The BryteFlow SAP Data Lake Builder is a third-party SAP connector that extracts data from SAP applications and replicates data with business logic intact to the target – both On-premise and on the Cloud. It supports integration of petabytes of enterprise data easily and automates SAP data extraction, using OData Services with ODP to extract the data, both initial and incremental. You get a completely automated setup of data extraction and automated analysis of the SAP source application – no coding required. SAP S/4 HANA and 5 Ways to Extract S4 ERP Data

Highlights of the BryteFlow SAP CDC Tool

BryteFlow Sap Data Lake Builder delivers data with business logic intact

Our SAP ETL tool the BryteFlow SAP Data Lake Builder extracts data from SAP applications like SAP ECC, S4HANA, SAP BW, SAP HANA, SAP SLT, SAP BODS and replicates data with business logic intact. It creates tables and schema automatically on target, saving you time and effort.

Our SAP connector has flexible connections to SAP

The BryteFlow ETL tool connects to SAP Data Extractors or SAP CDS Views to get the data from SAP applications like SAP ECC, S4 HANA, SAP BW, SAP HANA.

BryteFlow completely automates SAP ETL processes

BryteFlow automates every process including SAP data extraction, Change Data Capture, merging, data mapping, masking, schema and table creation and SCD Type 2 history. About BryteFlow SAP Data Lake Builder

Our SAP CDC tool supports loading of high volumes of enterprise data

BryteFlow loads massive volumes of data in real-time, using parallel, multi-thread loading, smart partitioning and compression, and syncs incremental change data using Change Data Capture. SAP HANA to Snowflake (2 Easy Ways)

Our SAP CDC Tool merges deltas automatically

BryteFlow merges Deltas (Updates, Inserts, Deletes) automatically with existing data on target to sync with the SAP source in real-time. BryteFlow for SAP DB Replication

BryteFlow SAP Data Lake Builder for SAP Migration

BryteFlow SAP Data Lake Builder can be used to migrate SAP to the Cloud (AWS, Azure, GCP) in specific scenarios including SAP on Oracle to SAP HANA DB. It also supports migration scenarios like migration from SAP ECC Release 3 and 4, SAP database on Oracle, SAP database on SQL Server, SAP S/4HANA Cloud Private Edition (new SAP Rise Cloud- Runtime version) to SAP HANA DB. RISE with SAP (Everything You Need to Know)

BryteFlow uses SAP-Certified Data Extraction methods

BryteFlow SAP Data Lake Builder is one of the very few ETL tools that uses the SAP-approved data extraction method, using ODP and OData Services to extract SAP data, unlike a lot of other tools that use Remote Function Calls (RFCs). This is relevant since according to SAP Note 3255746, SAP is prohibiting the use of RFCs for data extraction, except in the case of SAP-internal applications.

BryteFlow delivers data to SAP and non-SAP destinations

BryteFlow delivers data from SAP systems to Snowflake, Databricks, Redshift, S3, Azure Synapse, ADLS Gen2, Azure SQL DB, SQL Server, Kafka, PostgreSQL and Google BigQuery. SAP to Snowflake (Make the Integration Easy)

The BryteFlow SAP ETL tool provides ready for consumption data

BryteFlow SAP Data Lake Builder automates data type conversions (Parquet – snappy, ORC) so the delivered data is immediately ready for use for Analytics or ML models.

BryteFlow SAP Data Lake Builder provides very high throughput and low latency

Our SAP ETL tool loads data in real-time with very high throughput, transferring 1,000,000 rows of data in 30 seconds approx. The deployment is very fast, and you can start receiving data in just 2 weeks. How to Carry Out a Successful SAP Cloud Migration

Easy Point-and-Click Interface and Automated Network Catchup

BryteFlow SAP Data Lake Builder has an intuitive, user-friendly interface that any business user can use. It is sparing of your DBA’s time. Furthermore, it also has an automated network catch-up feature that ensures the process resumes from where it left off (in case of network failure), when normal conditions are restored.

Besides SAP applications, BryteFlow also delivers data from SAP databases using CDC

If access to the underlying SAP database is available, the BryteFlow SAP CDC tool can provide log-based or Trigger-based Change Data Capture from sources.

Conclusion

In this blog we have looked at SAP Change Data Capture and the various ways in which it is performed. We have examined the challenges encountered in the SAP CDC process, and studied 3 SAP Change Data Capture tools including our very own BryteFlow SAP Data Lake Builder. Contact us for a Demo