This blog is an alert to organizations that use SAP RFC to extract data from ABAP sources. Going forward, SAP will not permit the use of RFCs for data extraction, except for SAP-internal applications. The blog explores the role of ODP Data Replication API and SAP OData Services to extract data. These are SAP-certified mechanisms which are also used by BryteFlow. Learn how the BryteFlow SAP Data Lake Builder can protect your SAP implementation, besides automating the ETL of SAP data in real-time.

Quick Links

- SAP RFC Use for extracting ABAP data from external sources will not permitted

- Use SAP RFC within ODP Data Replication API at your own risk

- What is SAP RFC anyway?

- BryteFlow uses SAP certified mechanisms – ODP and SAP Odata Services to extract data

- How the Operational Data Provisioning (ODP) works

- What are SAP OData Services?

- What is SAP Gateway?

- Data Extraction Process using ODP and OData Services

- How does BryteFlow work with ODP and OData Services for extracting SAP data?

- Highlights of the BryteFlow SAP Connector

SAP RFC Use for extracting ABAP data from external sources will not permitted

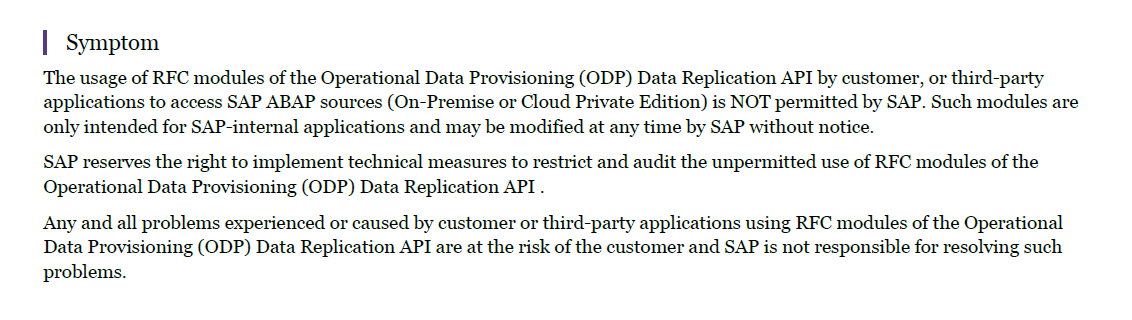

SAP OSS Note 3255746 – Unpermitted usage of ODP Data Replication APIs Component: BC-BW-ODP (Operational Data Provisioning (ODP) and Delta Queue (ODQ)), Version: 4, Released On: 02.02.2024

SAP has just notified customers in the form of a Note (3255746) released on Feb. 2, 2024. It states that usage of RFC (Remote Function Call) modules in the Operational Data Provisioning (ODP) Data Replication API will no longer be permitted for accessing and extracting SAP data from SAP ABAP sources (from on-premise or Cloud editions), by customers, or third-party applications. RISE with SAP (Everything You Need to Know)

SAP unequivocally states that RFC modules are only intended for SAP-internal applications and can be modified by SAP at any time, without notice. It also reserves the right to put in place technical measures that will restrict and audit the unpermitted use of RFC modules of the ODP Data Replication API. It further goes on to state any issues experienced or caused by third-party applications or customer applications by using the RFC modules, are entirely at the risk of the customer, and SAP is not responsible for resolving such issues, nor will it provide any support. 2 Easy Ways to extract SAP data using ODP and SAP OData

A Partial View of the SAP Note (3255746)

Use SAP RFC within ODP Data Replication API at your own risk

If your organization does use SAP RFC modules within the ODP Data Replication API to extract or replicate data, the outlook is grim. Your data implementation involving SAP may be at risk. After investing thousands of dollars, the last thing you want is to be told that your SAP data extraction mechanisms aren’t permissible, and neither are they supported. What’s worse is that SAP might put in place devices to put a spoke in the works and stop operations. Or even penalize your organization for using RFCs. Of course, your organization isn’t the only one in this situation. Many of the large enterprises including Microsoft and IBM depend on SAP RFCs to access SAP data. A lot of ETL and data replication companies do that as well, including Qlik Replicate, Informatica, Matillion and more. SAP HANA to Snowflake (2 Easy Ways)

What is SAP RFC anyway?

SAP RFC (Remote Function Call) is a mechanism that enables communication and exchange of information between business applications of different systems in the SAP environment. SAP RFCs could include connections between SAP systems as well as within SAP and non-SAP systems. The RFC enables or calls a function to be run in a remote system. Currently there are many RFC variants with different properties and different purposes. RFC has 2 interfaces:

- ABAP Program Calling Interface

- Non-SAP Program Calling Interface

BryteFlow uses SAP certified mechanisms – ODP and SAP Odata Services to extract data

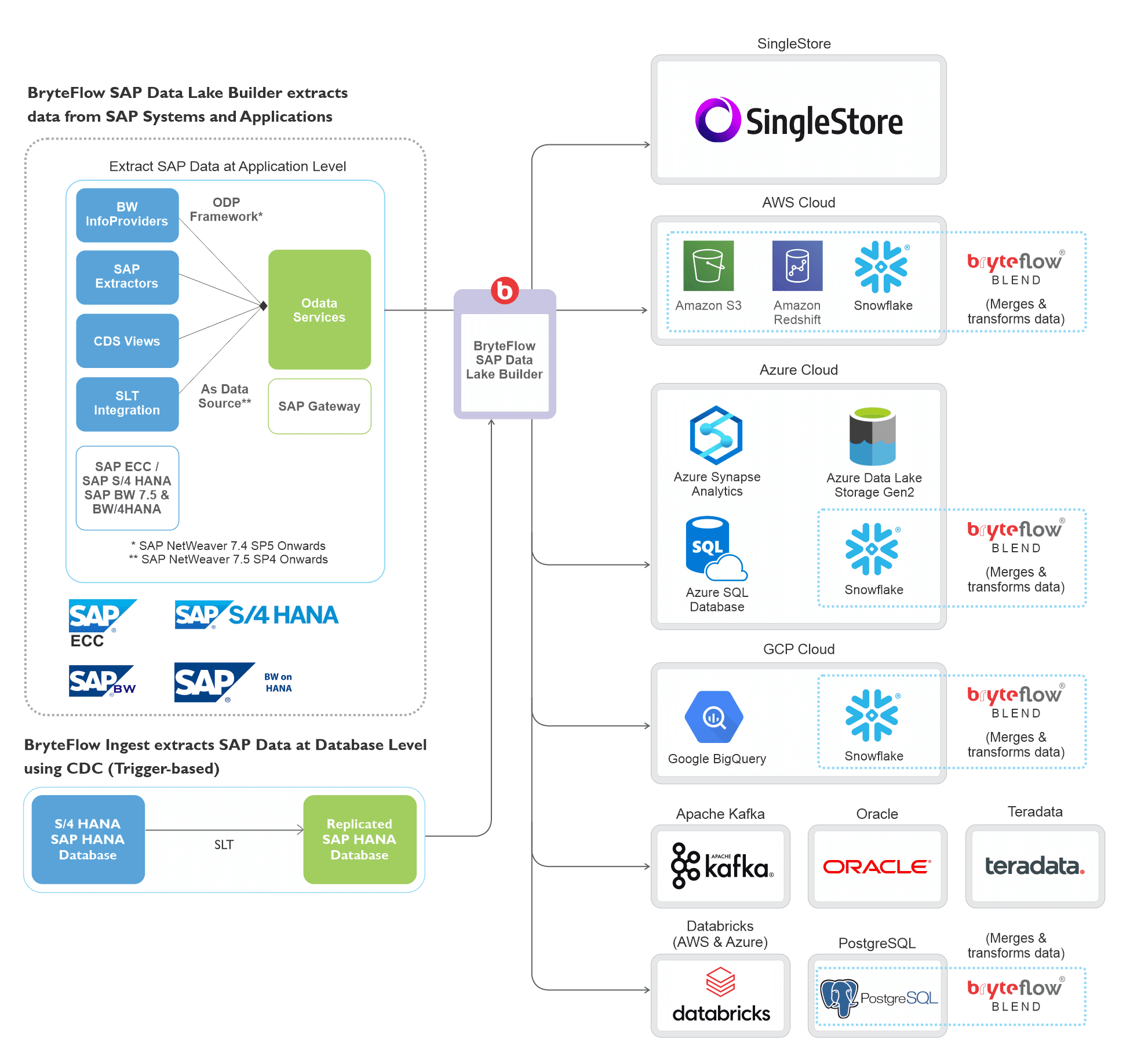

How should you now proceed to extract SAP data? The good news is that the BryteFlow SAP Data Lake Builder, our SAP connector uses only SAP certified and recommended mechanisms to extract data from SAP sources. Our BryteFlow SAP connector delivers real-time data from SAP applications and sources to multiple destinations like Snowflake, Redshift, Amazon S3, Azure Synapse, ADLS Gen2, Azure SQL Database, Google BigQuery, Postgres, Databricks and SQL Server using Change Data Capture. It ETLs SAP data in a completely automated manner, without having to write a single line of code. Contact us for a Demo

How Operational Data Provisioning (ODP) works

The Operational Data Provisioning (ODP) framework for data distribution provides a consolidated technology for data provisioning and consumption. ODP supports data extraction and replication for multiple targets and supports capturing of changes at source. ODP’s extract, transfer and load mechanisms support moving data to target applications like SAP BW, SAP BW/4HANA, SAP Data Services and SAP HANA Smart Data Integration (SAP HANA SDI). Create CDS Views on SAP HANA

To get the deltas, the source data is written incorporating an update process to a delta queue, known as the Operational Delta Queue and forwarded to the delta queue via an extractor interface. There are several ODP providers or sources that furnish the delta queues with data, including DataSources (extractors), SAP BW or SAP BW/4HANA, ABAP Core Data Services Views (ABAP CDS Views), SAP Landscape Transformation Replication Server (SAP SLT), and SAP HANA Information Views (Calculation Views). The data is retrieved by the target systems (ODP Consumers or Subscribers) from the delta queue, and they continue processing it. The delta queues also help in monitoring the data extraction and replication process. Reliable SAP CDC and Data Provisioning in SAP

What are SAP OData Services?

SAP OData is the method by which you can communicate with an SAP backend, whether you need it for a front-end process or for data integration. SAP OData is a Web communication protocol used to query and update data. It is built upon Web technologies like HTTP, Atom Publishing Protocol (AtomPub), and RSS (Really Simple Syndication) and can provide access to data from multiple applications. SAP Business Suite functionality can be exposed as REST-based OData (Open Data Protocol) services. SAP Gateway can then allow data from SAP applications to be shared with a variety of platforms and technologies so it can be easily consumed. Create an SAP OData Service in SAP BW

What is SAP Gateway?

SAP Gateway, as a component of SAP NetWeaver, allows you to connect platforms, devices, and environments to SAP systems. It uses the Open Data Protocol (OData) so you have the flexibility to use any programming language or model to connect to SAP and non-SAP applications. How to Migrate Data from SAP Oracle to HANA

Data Extraction Process using ODP and SAP OData Services

Consistent, scalable extraction of deltas or changes into non-ABAP external targets such as Cloud systems and mobile applications is possible using ODP (Operational Data Provisioning) and SAP OData. SAP OData connects to the ODP Data Replication API as a communication protocol. The subscriber is granted OData access via the ODP framework and thus access to the ODP data. SAP Extraction using ODP and SAP OData Services (2 Easy Methods)

ODP-based data extraction using SAP OData allows for extracting data between different systems, products, technology stacks and deployments. ODP is the gold standard for data extraction from an ABAP system into another system. Considering the need for flexible, heterogeneous data environments, ODP can also be used for extraction from systems that are based on technologies other than ABAP. Applications which are run in the Cloud (Software as a Service) can also be supported. The ABAP RFC interface is not deemed suitable for these scenarios by SAP. SAP HANA to Snowflake (2 Easy Ways)

ODP Data Sources from which data can be extracted for new scenarios include:

- DataSources in SAP Business Suite systems

- InfoProvider in BW systems

Extraction scenarios with ODP

The basic data extraction for new scenarios is by using SAP Gateway Foundation and by generating an SAP OData service with the SAP Gateway Service Builder. This is based on an Operational Data Provider (ODP). The service (URL) can then be called from an external OData client application using OData/HTTP. How to Carry Out a Successful SAP Cloud Migration

- The process supports package-based full extraction using the operational delta queue for very large volumes. Reliable SAP CDC and Data Provisioning in SAP

- It can also extract Deltas (after performing the initial full extract) using the operational delta queue for changes. The ODP data source must support deltas though. SAP to Snowflake (Make the Integration Easy)

- It can be used to access small data volumes directly, without needing an operational delta queue (e.g. a preview). How to Migrate Data from SAP Oracle to HANA

How does BryteFlow work with ODP and SAP OData Services for extracting SAP data?

BryteFlow has an SAP Connector called the BryteFlow SAP Data Lake Builder. It works with ODP and SAP OData Services to extract SAP data. As mentioned earlier, we do not use SAP RFC to extract data. Our extraction process is certified and recommended by SAP, so your implementation is safe and risk free. Not to mention highly scalable, fast, and performant. We have spent years perfecting our SAP extraction processes. Learn about BryteFlow for SAP Replication

Please note, once the SAP OData service is up and running, the BryteFlow SAP connector can consume the data easily. The BryteFlow SAP Data Lake Builder has multiple flexible connections for SAP including SAP BW Extractors, Database Logs, SAP CDS Views, S/4HANA, ECC, SAP SLT and SAP Data Services. etc. It can extract the data and load it to any Cloud platform including AWS, Azure or GCP in real-time. Download the BryteFlow SAP Data Lake Builder PDF

Highlights of the BryteFlow SAP Connector

Extracts SAP data with business logic intact

Our SAP connector can extract data from SAP applications like SAP ECC, S4HANA, SAP BW, SAP HANA and replicate data with business logic intact to your data lake or data warehouse. You get a completely automated setup of data extraction and automated analysis of the SAP source application. The SAP Cloud Connector and Why It’s So Amazing

Our SAP connector tool supports loading of high volumes of enterprise data

The BryteFlow SAP connector loads terabytes of data in real-time, using parallel, multi-thread loading, smart partitioning and compression, and brings across incremental data and deltas using Change Data Capture. SAP HANA to Snowflake (2 Easy Ways)

Our SAP connector has flexible ways of connecting to SAP

It can connect to SAP Data Extractors or SAP CDS Views to get the data from SAP applications like SAP ECC, S4 HANA, SAP BW, SAP HANA. It automates SAP data extraction, using SAP OData Services with ODP to extract the data, both initial and incremental.

Merges deltas automatically

Deltas (Updates, Inserts, Deletes) are merged automatically with initial data on target to sync data with with the SAP source in real-time.

BryteFlow SAP Data Lake Builder provides completely automated SAP ETL

SAP data extraction, CDC, merging, masking, schema and table creation and SCD Type 2 history are all automated -no coding required. BryteFlow SAP Data Lake Builder

Data delivered is ready to use on target

BryteFlow performs data type conversions out-of-the-box. Data delivered to the target is ready to use for Analytics or Machine Learning. Our SAP connector delivers data to Snowflake, Databricks, Redshift, S3, Azure Synapse, ADLS Gen2, Azure SQL DB, SQL Server, Kafka, Postgres and Google BigQuery.

Besides SAP applications it also performs CDC from SAP databases

If access to the underlying database is available, the BryteFlow SAP connector can provide log-based Change Data Capture from sources. BryteFlow for SAP

BryteFlow SAP Data Lake Builder provides very high throughput and low latency

Our SAP connector loads data with very high throughput, moving 1,000,000 rows of data in 30 seconds approx. It is also fast to deploy, and you can start receiving data in just 2 weeks. How to Carry Out a Successful SAP Cloud Migration

Try out our BryteFlow SAP Connector, contact us for a Demo

Conclusion

We have looked at the potential fallout of unpermitted usage of SAP RFCs as per SAP’s Note, and its possible impact on data implementations. We also learned how ODP and SAP OData services can be used to extract ABAP and other data. The blog also examined how BryteFlow, which uses SAP-certified ODP and SAP OData mechanisms to extract data, can provide a completely no-code, risk-free experience to ETL SAP data to Cloud platforms like AWS, Azure and GCP.