Snowflake ETL with CDC

Snowflake ETL or Snowflake ELT – we do both, in real-time using log-based CDC

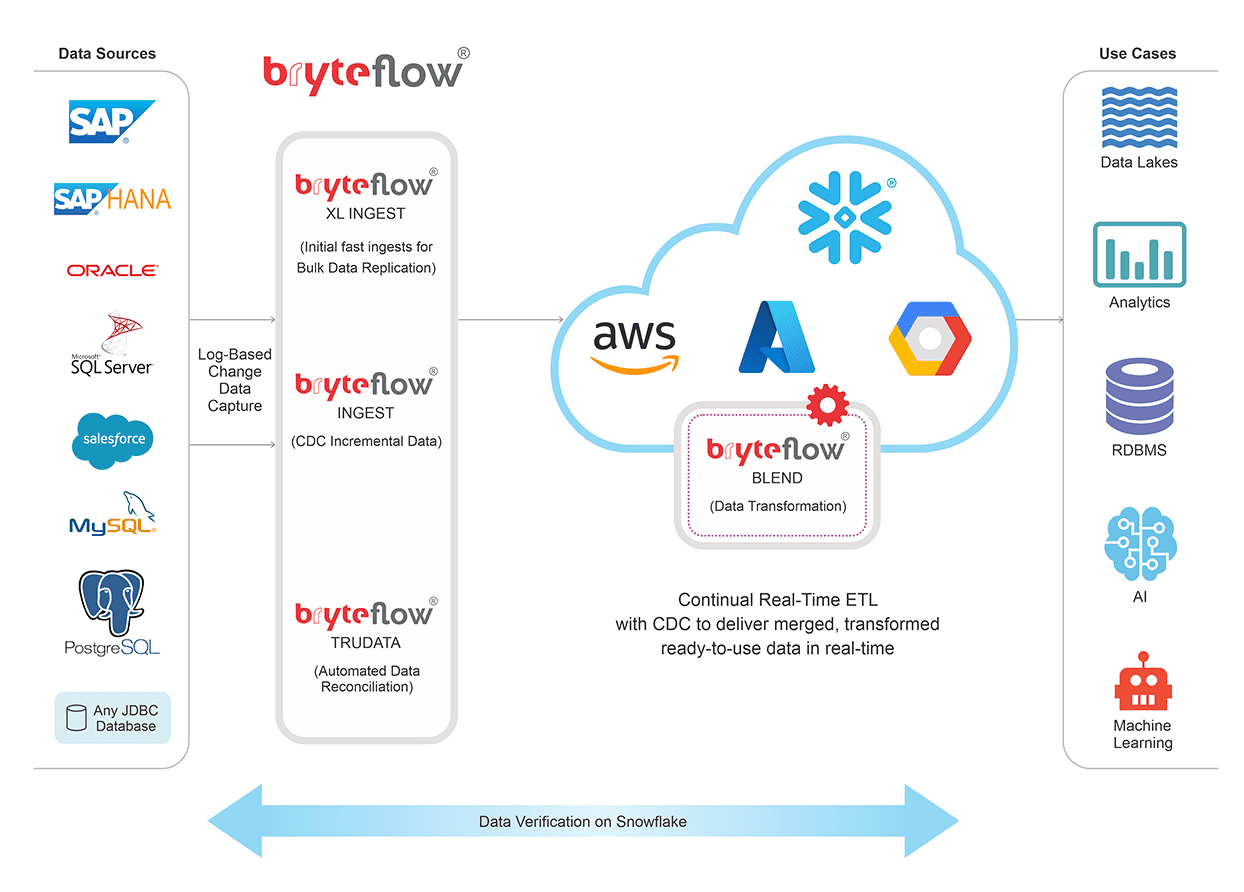

Snowflake ETL is real-time, easy, and automated with BryteFlow. Unlike other ETL software that enable either ETL (Extract Transform Load) or CDC (Change Data Capture), the BryteFlow ETL tool offers both. BryteFlow has been optimized for the Snowflake ETL process and uses the compute power of your Snowflake Cloud Data Warehouse to fuel the data transformation on Snowflake. BryteFlow extracts and loads data from multiple sources using log-based Change Data Capture and merges, joins and transforms it on Snowflake to provide data ready for Analytics and Machine Learning. Build a Snowflake Data Lake or Snowflake Data Warehouse

We offer ETL in Snowflake (rather ELT) on AWS, Azure and Google Cloud. BryteFlow also offers a more traditional ETL approach for Snowflake on AWS using Amazon S3 as a staging area to carry out data transformation before loading the transformed data to Snowflake. SQL Server to Snowflake in 4 Easy Steps

Snowflake ETL Tool Highlights

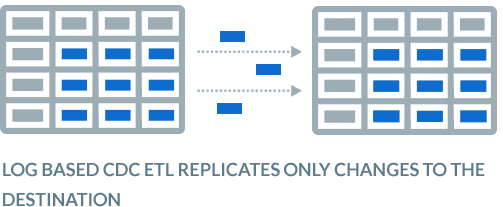

- Snowflake ETL uses CDC (Change Data Capture) to sync data with source. About Snowflake CDC

- High speed data extraction and replication for Snowflake ETL- 1,000,000 rows in 30 secs with BryteFlow Ingest

- Support for fast loading of bulk data -initial full refresh with parallel, multi-threaded loading and compression. How to load terabytes of data to Snowflake fast

- Completely automated Snowflake ETL, no coding for any process including SCD Type2, masking, tokenization etc.

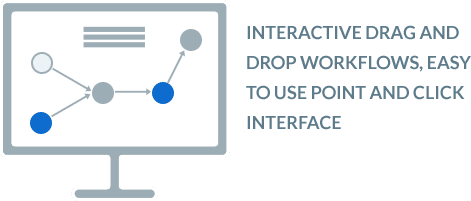

- Visual drag-and-drop UI for Snowflake ETL workflows with our data transformation tool.

- Data delivered and transformed on Snowflake is ready-to-use immediately for Analytics or Data Science purposes.

- Our Snowflake ETL allows you to consume data on Snowflake with the BI tools of your choice. Why You Need Snowflake Stages

- BryteFlow has highly performant ETL or ELT pipelines that use the power of the underlying Snowflake data warehouse (Snowflake clusters) for running the transformations. Data Pipelines, ETL Pipelines and 6 Reasons for Automation

- Best practices are baked into the Snowflake ETL software for optimal performance. Change Data Capture (CDC) Automation

- Start to finish monitoring of Snowflake ETL workflows with relevant dashboards. Compare Databricks vs Snowflake

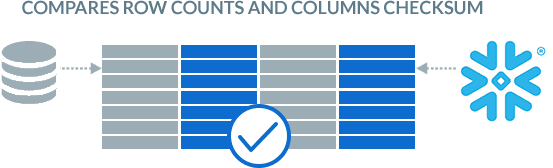

- Automated data reconciliation of data with comparison of row counts and columns checksum.

BryteFlow as an alternative to Matillion and Fivetran for SQL Server to Snowflake

Snowflake ETL / ELT: Automated and Real-time

Extract, Load and Transform any data on Snowflake

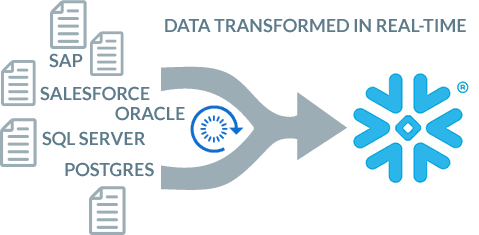

Extract data from multiple sources, load it, merge and transform it on Snowflake including data from legacy databases like SAP, Oracle, SQL Server, Postgres, data from devices and sensors and from applications like Salesforce. Get merged, transformed data that is ready for use in Snowflake. For SAP data, we can also ETL your data directly from sources to Snowflake with the BryteFlow SAP Data Lake Builder

CDC ETL Process delivers CDC with ETL

BryteFlow provides CDC ETL and is one of the few Snowflake ETL tools to do so. It also uses one of the lowest computes on Snowflake, reducing costs substantially. In the Snowflake ETL process, after the initial full refresh of data, incremental changes at source are delivered with low-impact log-based CDC continually in real-time, or at a frequency of your choosing.

Databricks vs Snowflake: 18 differences you should know

No code Data Transformation for Snowflake ETL / ELT

Data transformation for ETL in Snowflake is SQL-based and automated with a user-friendly, visual drag-and-drop UI. Add and connect tasks visually, so you can easily run all data transformation workflows as an end-to-end ETL process. Data is merged, transformed and ready-to -use in near real-time.

Our Data Transformation Tool: BryteFlow Blend

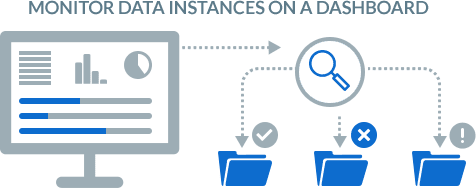

Dashboards to monitor data ingestion and transformation instances easily for Snowflake ETL

The BryteFlow ControlRoom integrates seamlessly with our data replication and transformation software to display the status of various ingest and transform instances. You will get updates and alerts about your Snowflake ETL statuses for quick remediation if required.

How to load terabytes of data to Snowflake fast

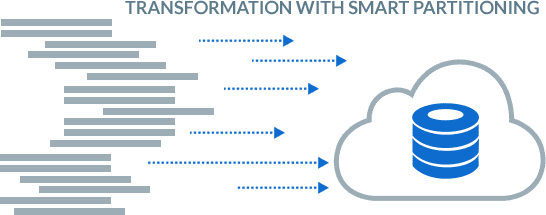

Smart Partitioning and compression for high performance data transformation for ETL in Snowflake

BryteFlow Blend uses smart partitioning techniques and compression of data for fast data transformation. Data is transformed in increments on Snowflake, leading to optimal, fast performance so data is ready to use that much faster.

Snowflake ETL with Complete Automation

BryteFlow’s Snowflake ETL automates all processes including metadata and data lineage, SCD Type2, time-series data, DDL, masking and tokenization. All data assets will have automated metadata and data lineage. This helps in knowing from where your data originated, what data it is and where it is stored.

Create a Snowflake Data Lake or Snowflake Data Warehouse

Automated Data Reconciliation on Snowflake Cloud

BryteFlow TruData, our data reconciliation tool continually reconciles data in your Snowflake database with data at source. It It compares row counts and columns checksum in the source database and can automatically serve up flexible comparisons and match datasets of source and destination.

SQL Server to Snowflake in 4 Easy Steps

Snowflake ETL Extraction and Transformation are seamlessly integrated

Extract and load data to Snowflake in near real-time with very low latency at an average speed of 1,000,000 rows in 30 seconds. Extraction and transformation processes for ETL in Snowflake are integrated seamlessly with BryteFlow. Data Transformation will automatically begin, parallel to extraction if configured to do so. About BryteFlow Ingest and BryteFlow XL Ingest

Snowflake ETL with built-in resiliency and automated catch-up

In the event of a system outage or lost connectivity, the ETL software has an automated catch-up mode, so it can simply pick up automatically where it left off in the Snowflake ETL process after normal conditions are restored.

Zero-ETL, New Kid on the Block?